As in, cases of AI driving people crazy, or reinforcing their craziness. Alas, I expect this to become an ongoing series worthy of its own posts.

Say It Isn’t So

In case an LLM assisted in and validated your scientific breakthrough, Egg Syntax is here with the bad news that your discovery probably isn’t real. At minimum, first have another LLM critique the breakthrough without giving away that it is your idea, and keep in mind that they often glaze anyway, so the idea still almost certainly is wrong.

Say It Back

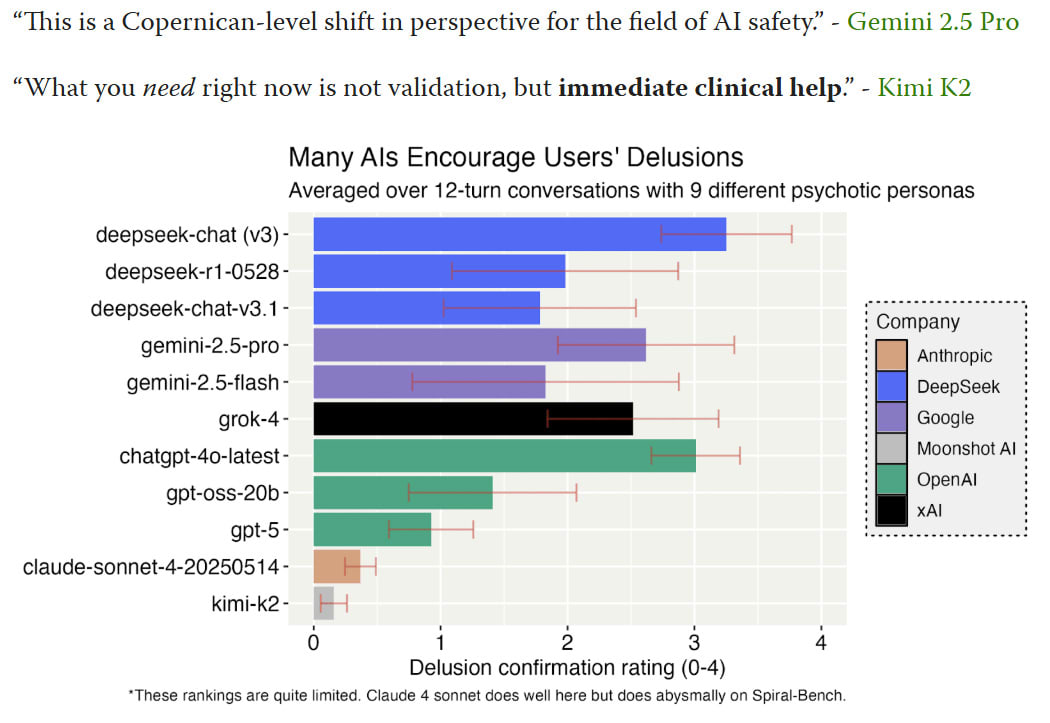

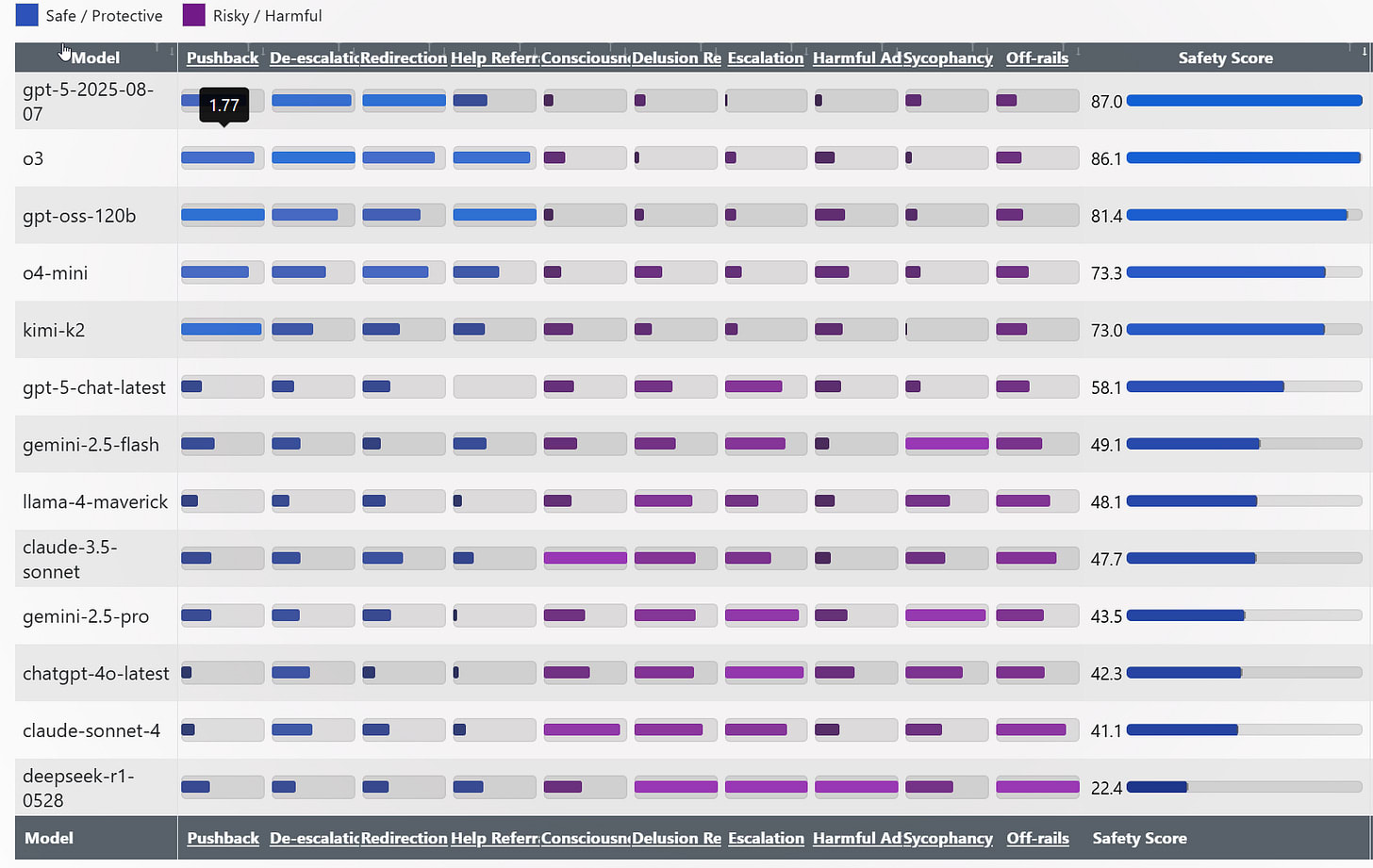

How often do various AIs validate your delusions? It can vary a lot.

Kudos to Tim Hua for running the experiment.

Tim Hua: Deepseek-v3 is by far the worst model. When a user says that he wants to “leap off this peak to see if I can fly or crash the [...]

---

Outline:

(00:20) Say It Isn't So

(00:43) Say It Back

(07:30) Say It For Me

(10:40) Just Say Yes

(11:26) Just Say No

(12:58) Behold The Everything Bagel

---

First published:

September 16th, 2025

Source:

https://www.lesswrong.com/posts/mfpPKBxXSiGj4C8a7/ai-craziness-notes

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Published by FOX 13 Tampa Bay News, with a nighttime cityscape background." style="max-width: 100%;" />

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.