Consider this largely a follow-up to Friday's post about a statement aimed at creating common knowledge around it being unwise to build superintelligence any time soon.

Mainly, there was a great question asked, so I gave a few hour shot at writing out my answer. I then close with a few other follow-ups on issues related to the statement.

A Great Question To Disentangle

There are some confusing wires potentially crossed here but the intent is great.

Scott Alexander: I think removing a 10% chance of humanity going permanently extinct is worth another 25-50 years of having to deal with the normal human problems the normal way.

Sriram Krishnan: Scott what are verifiable empirical things ( model capabilities / incidents / etc ) that would make you shift that probability up or down over next 18 months?

I went through three steps interpreting [...]

---

Outline:

(00:30) A Great Question To Disentangle

(02:20) Scott Alexander Gives a Fast Answer

(04:53) Question 1: What events would most shift your p(doom | ASI) in the next 18 months?

(13:01) Question 1a: What would get this risk down to acceptable levels?

(14:48) Question 2: What would shift the amount that stopping us from creating superintelligence for a potentially extended period would reduce p(doom)?

(17:01) Question 3: What would shift your timelines to ASI (or to sufficiently advanced AI, or 'to crazy')?

(20:01) Bonus Question 1: Why Do We Keep Having To Point Out That Building Superintelligence At The First Possible Moment Is Not A Good Idea?

(22:44) Bonus Question 2: What Would a Treaty On Prevention of Artificial Superintelligence Look Like?

---

First published:

October 27th, 2025

Source:

https://www.lesswrong.com/posts/mXtYM3yTzdsnFq3MA/asking-some-of-the-right-questions

---

Narrated by TYPE III AUDIO.

---

Images from the article:

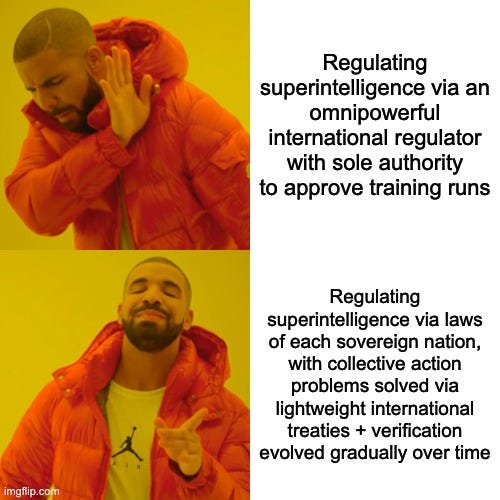

Top panel (rejected): Single powerful international regulator controlling AI training

Bottom panel (preferred): National laws with international cooperation and gradual verification treaties

The format contrasts centralized versus distributed regulatory approaches for superintelligent AI systems." style="max-width: 100%;" />

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.