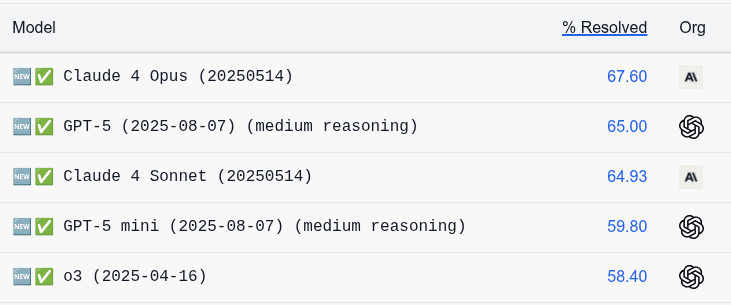

What if DeepSeek released a model claiming 66 on SWE and almost no one tried using it? Would it be any good? Would you be able to tell? Or would we get the shortest post of the year?

Why We Haven’t Seen v4 or r2

DeepSeek was encouraged by authorities to adopt Huawei's Ascend processor rather than use Nvidia's systems after releasing its R1 model in January, according to three people familiar with the matter.

But the Chinese start-up encountered persistent technical issues during its R2 training process using Ascend chips, prompting it to use Nvidia chips [...]

---

Outline:

(00:24) Why We Haven't Seen v4 or r2

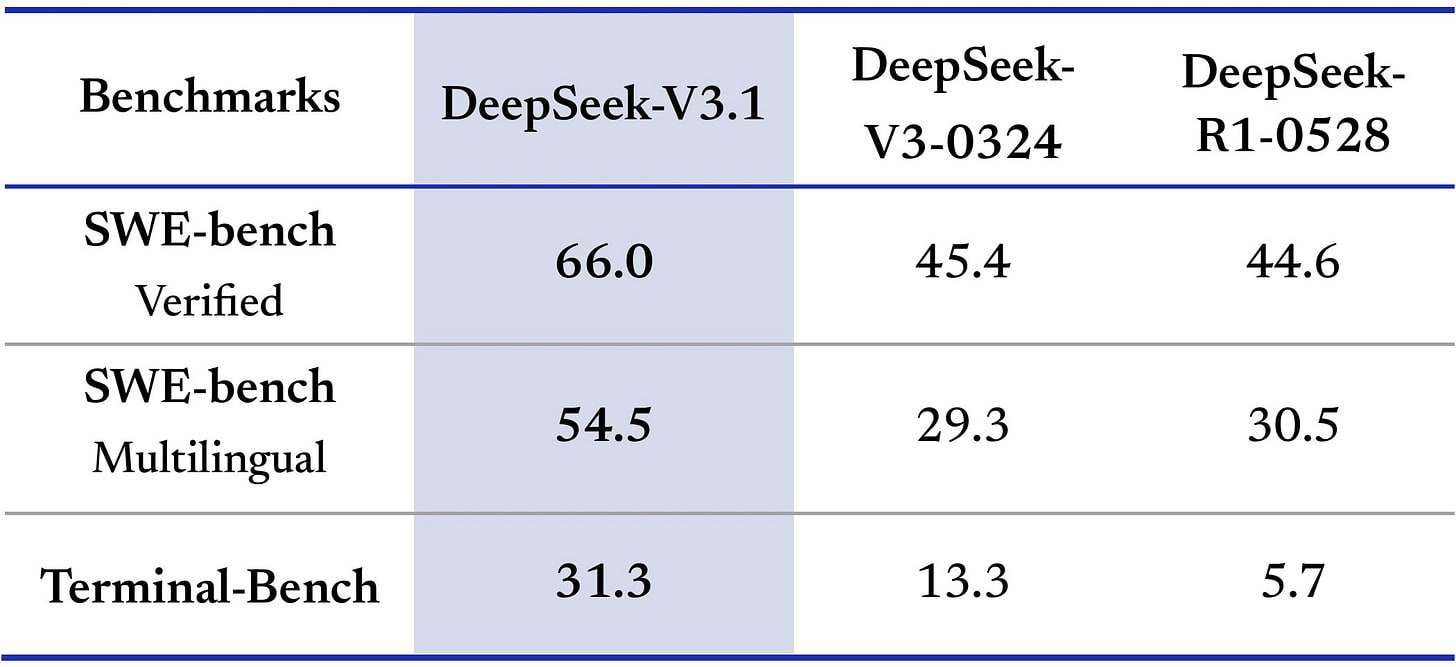

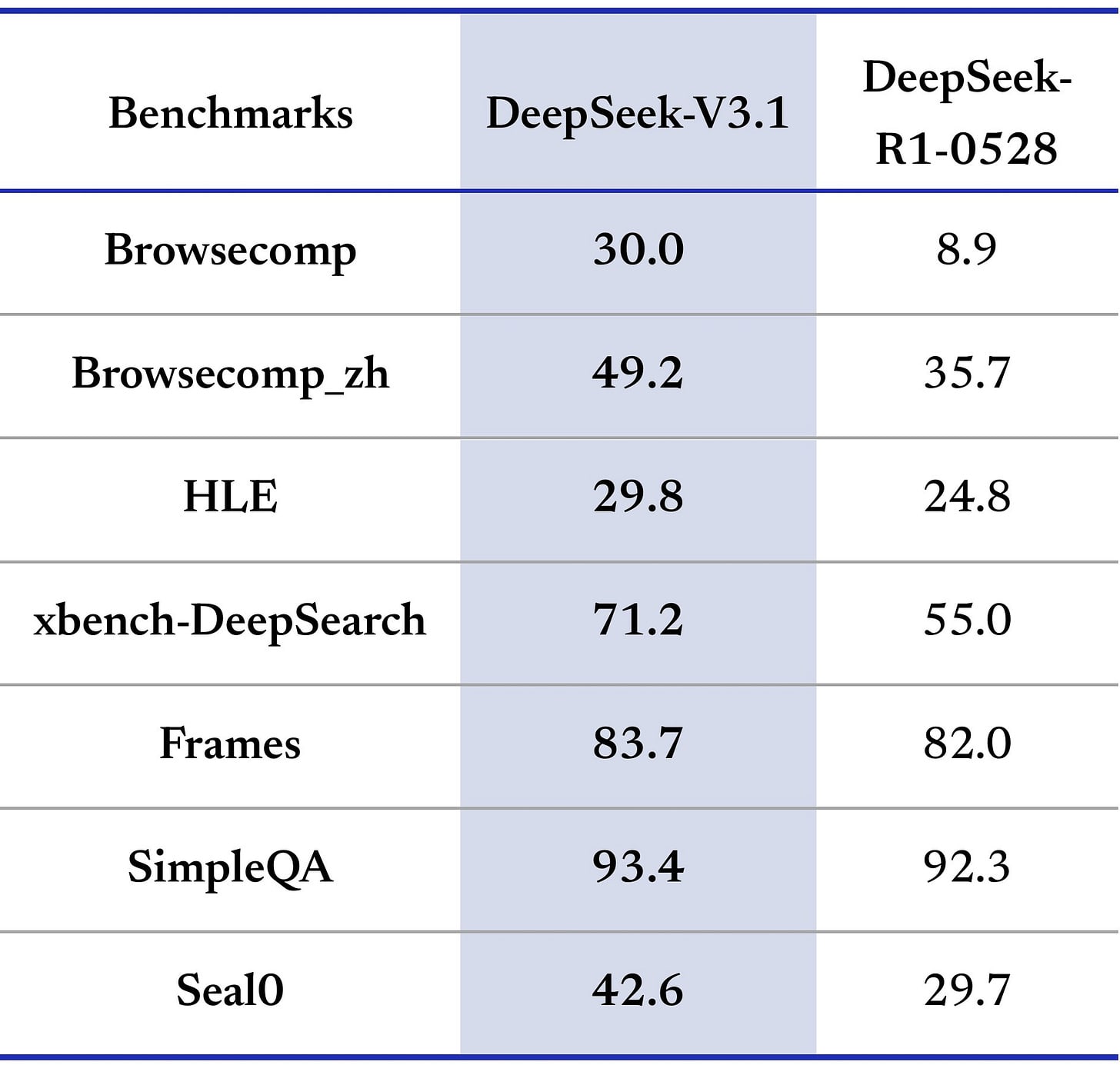

(01:49) Introducing DeepSeek v3.1

(04:34) Signs of Life

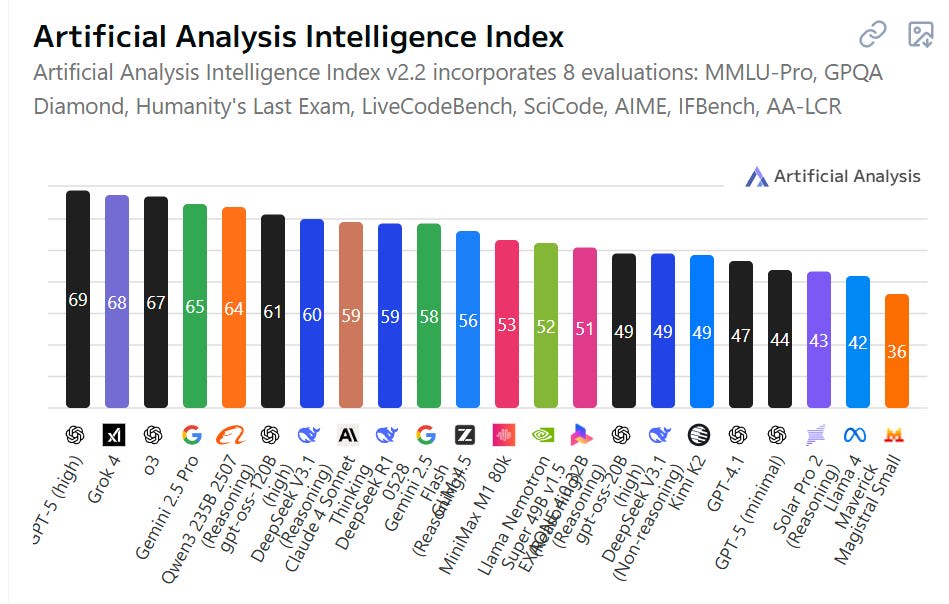

(06:57) How Should We Update?

---

First published:

August 22nd, 2025

Source:

https://www.lesswrong.com/posts/gBnfwLqxcF4zyBE2J/deepseek-v3-1-is-not-having-a-moment

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.