Shows

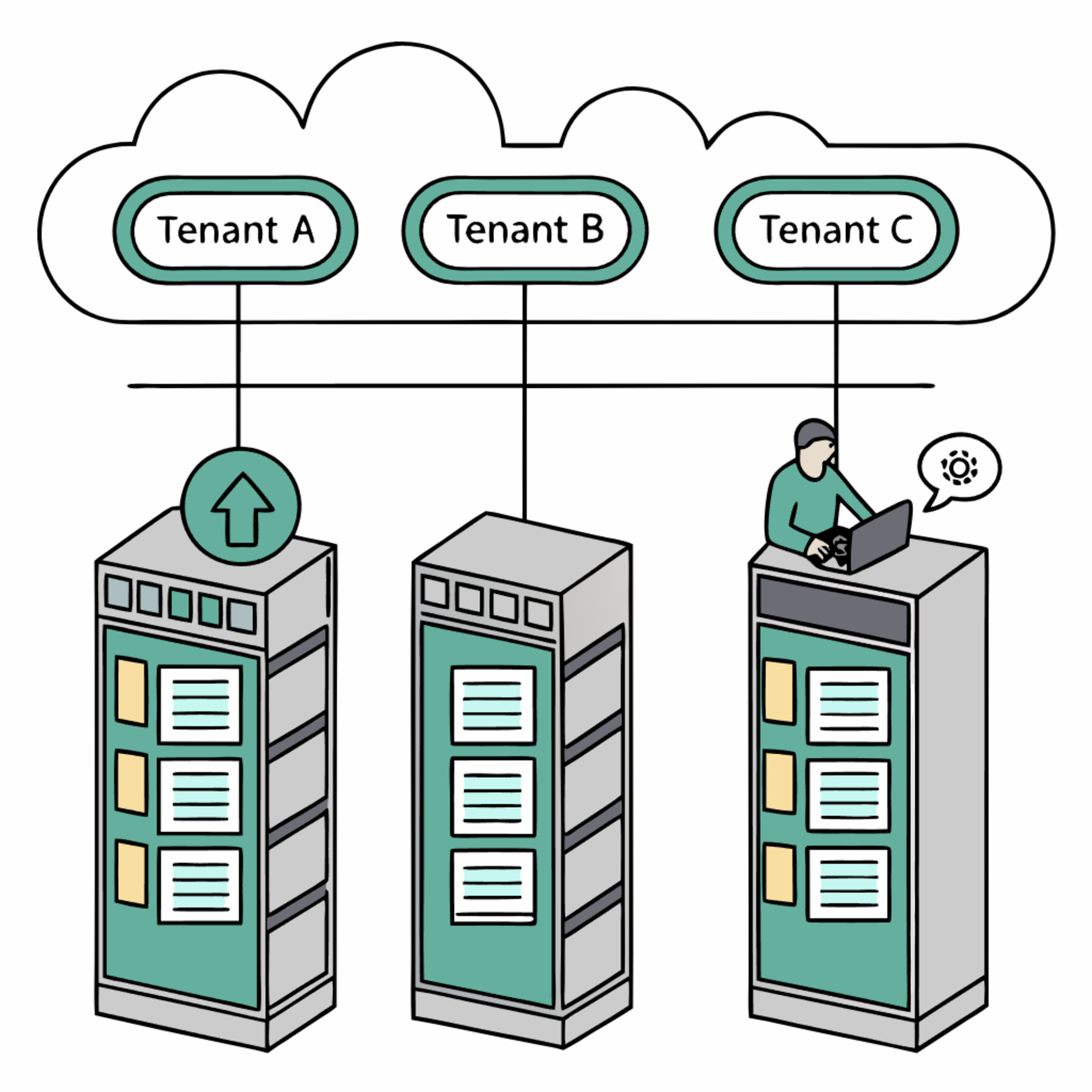

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Zonda: Workday's AI Data Streaming PlatformPublic Source : https://medium.com/workday-engineering/accelerating-zonda-workdays-data-streaming-platform-22a2e10d9901Analysis of Zonda, Workday's proprietary AI-centric data streaming platform, built upon Apache Flink. It highlights Zonda's crucial role as the central nervous system for data powering Workday's advanced Artificial Intelligence and Machine Learning capabilities. The report examines the strategic decision behind building Zonda in-house, citing the inadequacy of generic cloud services to meet Workday's stringent demands for low latency, cost-efficiency, and multi-tenant data security. Furthermore, it details specific engineering challenges overcome by the Zonda team, such as implementing cryptographic isolation for multi-tenancy and optimizing S3...2025-06-1717 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Zonda: Workday's AI Data Streaming PlatformPublic Source : https://medium.com/workday-engineering/accelerating-zonda-workdays-data-streaming-platform-22a2e10d9901Analysis of Zonda, Workday's proprietary AI-centric data streaming platform, built upon Apache Flink. It highlights Zonda's crucial role as the central nervous system for data powering Workday's advanced Artificial Intelligence and Machine Learning capabilities. The report examines the strategic decision behind building Zonda in-house, citing the inadequacy of generic cloud services to meet Workday's stringent demands for low latency, cost-efficiency, and multi-tenant data security. Furthermore, it details specific engineering challenges overcome by the Zonda team, such as implementing cryptographic isolation for multi-tenancy and optimizing S3...2025-06-1717 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!ALE-Bench: AI in Algorithm Engineering AnalysisSources https://arxiv.org/abs/2506.09050https://sakana.ai/ale-bench/ALE-Bench, a new evaluation framework designed to assess Artificial Intelligence (AI) performance in algorithm engineering, particularly for computationally hard optimization problems. It details the benchmark's design philosophy, emphasizing long-horizon, objective-driven tasks that mirror real-world industrial challenges in logistics, scheduling, and power grid balancing. The analysis compares AI systems against human experts, highlighting the significant performance gains achieved through iterative refinement and agentic scaffolding, while also identifying the current limitations of Large Language Models (LLMs), such as inconsistent logical reasoning and challenges with long-horizon planning. 2025-06-1732 min

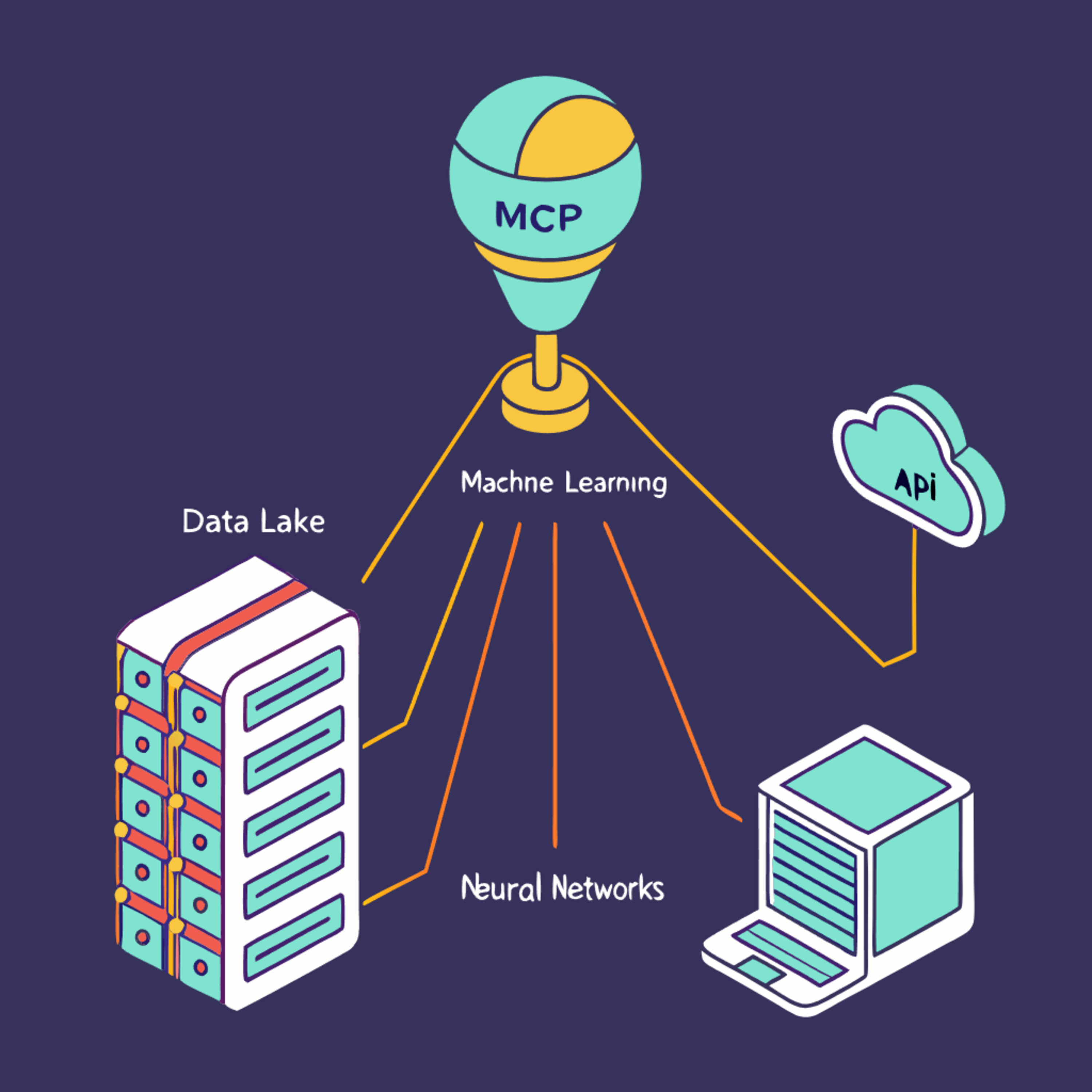

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!ALE-Bench: AI in Algorithm Engineering AnalysisSources https://arxiv.org/abs/2506.09050https://sakana.ai/ale-bench/ALE-Bench, a new evaluation framework designed to assess Artificial Intelligence (AI) performance in algorithm engineering, particularly for computationally hard optimization problems. It details the benchmark's design philosophy, emphasizing long-horizon, objective-driven tasks that mirror real-world industrial challenges in logistics, scheduling, and power grid balancing. The analysis compares AI systems against human experts, highlighting the significant performance gains achieved through iterative refinement and agentic scaffolding, while also identifying the current limitations of Large Language Models (LLMs), such as inconsistent logical reasoning and challenges with long-horizon planning. 2025-06-1732 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Architecting APIs for AI AgentsComprehensive guide to designing APIs for AI agents, emphasizing a paradigm shift from human-centric data exchange to machine-interpretable capabilities. It outlines foundational principles like predictability, semantic richness, and robust error handling, crucial for an agent's autonomous interaction. The source further explores architectural blueprints, comparing communication protocols such as REST, GraphQL, and gRPC, and discusses the importance of API gateways and orchestration patterns. Additionally, it introduces emerging protocols like Model Context Protocol (MCP) for agent-to-tool interaction and Agent-to-Agent (A2A) for inter-agent collaboration, highlighting their complementary roles in a future multi-agent ecosystem. The text...2025-06-1635 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Architecting APIs for AI AgentsComprehensive guide to designing APIs for AI agents, emphasizing a paradigm shift from human-centric data exchange to machine-interpretable capabilities. It outlines foundational principles like predictability, semantic richness, and robust error handling, crucial for an agent's autonomous interaction. The source further explores architectural blueprints, comparing communication protocols such as REST, GraphQL, and gRPC, and discusses the importance of API gateways and orchestration patterns. Additionally, it introduces emerging protocols like Model Context Protocol (MCP) for agent-to-tool interaction and Agent-to-Agent (A2A) for inter-agent collaboration, highlighting their complementary roles in a future multi-agent ecosystem. The text...2025-06-1635 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Robust AI Fairness in Hiring through Internal InterventionSource: https://arxiv.org/abs/2506.10922Examines the limitations of current methods for ensuring fairness in Large Language Models (LLMs), particularly in high-stakes applications like hiring. It highlights how prompt-based anti-bias instructions are insufficient, creating a "fairness façade" that collapses under realistic conditions. Furthermore, the source reveals that LLM-generated reasoning (Chain-of-Thought) can be unfaithful, masking underlying biases despite explicit claims of neutrality. Consequently, the research proposes and validates an internal, interpretability-guided approach called Affine Concept Editing (ACE), which directly modifies a model's internal representations of sensitive attributes to achieve robust and generalizable bias m...2025-06-1520 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Robust AI Fairness in Hiring through Internal InterventionSource: https://arxiv.org/abs/2506.10922Examines the limitations of current methods for ensuring fairness in Large Language Models (LLMs), particularly in high-stakes applications like hiring. It highlights how prompt-based anti-bias instructions are insufficient, creating a "fairness façade" that collapses under realistic conditions. Furthermore, the source reveals that LLM-generated reasoning (Chain-of-Thought) can be unfaithful, masking underlying biases despite explicit claims of neutrality. Consequently, the research proposes and validates an internal, interpretability-guided approach called Affine Concept Editing (ACE), which directly modifies a model's internal representations of sensitive attributes to achieve robust and generalizable bias m...2025-06-1520 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Python Wheels for Machine LearningOffers a comprehensive guide to Python wheels, emphasizing their crucial role in modern machine learning (ML) workflows. It explains that wheels are pre-built, ready-to-install package formats that offer significant advantages over source distributions, including faster installation, improved reliability, and enhanced security, especially vital for ML libraries with compiled code. The sources detail the anatomy of a wheel, from its compatibility-defining filename to its internal structure and metadata, highlighting the importance of pyproject.toml for declarative project configuration. Furthermore, the text covers the wheel creation workflow, the challenges of cross-platform compatibility (especially for Linux...2025-06-1531 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Python Wheels for Machine LearningOffers a comprehensive guide to Python wheels, emphasizing their crucial role in modern machine learning (ML) workflows. It explains that wheels are pre-built, ready-to-install package formats that offer significant advantages over source distributions, including faster installation, improved reliability, and enhanced security, especially vital for ML libraries with compiled code. The sources detail the anatomy of a wheel, from its compatibility-defining filename to its internal structure and metadata, highlighting the importance of pyproject.toml for declarative project configuration. Furthermore, the text covers the wheel creation workflow, the challenges of cross-platform compatibility (especially for Linux...2025-06-1531 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Pydantic for Robust Machine Learning SystemsOffers a comprehensive overview of Pydantic, a Python library vital for data validation, configuration management, and reproducibility in machine learning workflows. It highlights Pydantic's foundational role in ensuring data integrity through type hints and granular constraints, addressing the "garbage in, garbage out" problem. The source further explains Pydantic's practical applications across the ML lifecycle, from data ingestion and preprocessing using custom validators to managing complex experiment configurations and secrets with pydantic-settings. Finally, it emphasizes Pydantic's crucial integration with FastAPI for deploying robust ML APIs and its emerging significance in generative AI for structuring non-deterministic LLM...2025-06-1519 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Pydantic for Robust Machine Learning SystemsOffers a comprehensive overview of Pydantic, a Python library vital for data validation, configuration management, and reproducibility in machine learning workflows. It highlights Pydantic's foundational role in ensuring data integrity through type hints and granular constraints, addressing the "garbage in, garbage out" problem. The source further explains Pydantic's practical applications across the ML lifecycle, from data ingestion and preprocessing using custom validators to managing complex experiment configurations and secrets with pydantic-settings. Finally, it emphasizes Pydantic's crucial integration with FastAPI for deploying robust ML APIs and its emerging significance in generative AI for structuring non-deterministic LLM...2025-06-1519 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!The Rise of the GenAI Application Engineer: Architecting the Next Wave of Software Innovationsource : https://www.deeplearning.ai/the-batch/issue-305/The comprehensive, and maybe a little too long, overview details the emerging role of the GenAI Application Engineer, highlighting their crucial position in translating generative AI's potential into practical software solutions. The text outlines the multifaceted skills required for this role, encompassing technical expertise in AI frameworks, programming languages, and generative models, alongside critical soft skills like problem-solving and communication, and a distinctive "X-factor" of product and design intuition. It further explores how these engineers leverage AI tools for rapid and efficient application development, examine key...2025-06-131h 31

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!The Rise of the GenAI Application Engineer: Architecting the Next Wave of Software Innovationsource : https://www.deeplearning.ai/the-batch/issue-305/The comprehensive, and maybe a little too long, overview details the emerging role of the GenAI Application Engineer, highlighting their crucial position in translating generative AI's potential into practical software solutions. The text outlines the multifaceted skills required for this role, encompassing technical expertise in AI frameworks, programming languages, and generative models, alongside critical soft skills like problem-solving and communication, and a distinctive "X-factor" of product and design intuition. It further explores how these engineers leverage AI tools for rapid and efficient application development, examine key...2025-06-131h 31 Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Yambda: A Landmark Dataset for Recommender SystemsOffer a comprehensive analysis of Yandex's Yambda dataset, highlighting its significance as the world's largest publicly available dataset for recommender systems research. It details Yambda's unprecedented scale, with billions of user-track interactions, and its rich features, including timestamps, audio embeddings, and an 'is_organic' flag indicating how content was discovered. The sources emphasize Yambda's role in bridging the gap between academic research and industry applications by providing real-world data and promoting robust evaluation through its Global Temporal Split (GTS) methodology. Furthermore, they discuss the ethical considerations of handling large-scale anonymized user data, such...2025-06-1222 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Yambda: A Landmark Dataset for Recommender SystemsOffer a comprehensive analysis of Yandex's Yambda dataset, highlighting its significance as the world's largest publicly available dataset for recommender systems research. It details Yambda's unprecedented scale, with billions of user-track interactions, and its rich features, including timestamps, audio embeddings, and an 'is_organic' flag indicating how content was discovered. The sources emphasize Yambda's role in bridging the gap between academic research and industry applications by providing real-world data and promoting robust evaluation through its Global Temporal Split (GTS) methodology. Furthermore, they discuss the ethical considerations of handling large-scale anonymized user data, such...2025-06-1222 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!V-JEPA 2: Advancing Physical AI and RoboticsDetails Meta AI's V-JEPA 2, a sophisticated world model designed to enhance physical artificial intelligence and zero-shot robotic interaction. It explains how V-JEPA 2, trained extensively on video data, enables robots to plan and operate in unfamiliar environments with novel objects, achieving notable success rates in pick-and-place tasks. The document also addresses critical challenges in deploying such advanced AI, including bridging the performance gap to human dexterity, overcoming technical hurdles like multi-modal integration, and navigating significant ethical and societal considerations such as algorithmic bias, safety, and workforce transformation, while discussing the evolving regulatory landscape and future research...2025-06-1229 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!V-JEPA 2: Advancing Physical AI and RoboticsDetails Meta AI's V-JEPA 2, a sophisticated world model designed to enhance physical artificial intelligence and zero-shot robotic interaction. It explains how V-JEPA 2, trained extensively on video data, enables robots to plan and operate in unfamiliar environments with novel objects, achieving notable success rates in pick-and-place tasks. The document also addresses critical challenges in deploying such advanced AI, including bridging the performance gap to human dexterity, overcoming technical hurdles like multi-modal integration, and navigating significant ethical and societal considerations such as algorithmic bias, safety, and workforce transformation, while discussing the evolving regulatory landscape and future research...2025-06-1229 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Direct Preference Optimization (DPO) for LLMsOffers a comprehensive overview of Direct Preference Optimization (DPO), a streamlined method for aligning Large Language Models (LLMs) with human values and subjective preferences. It explains DPO's core principles, highlighting its efficiency by directly optimizing LLMs based on binary human choices, thus bypassing the complex reward model training and reinforcement learning steps found in traditional Reinforcement Learning from Human Feedback (RLHF). The document emphasizes DPO's particular utility for subjective tasks like creative writing, personalized communication, and style control, and discusses its methodologies, including the loss function and the role of the reference model. ...2025-06-1222 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Direct Preference Optimization (DPO) for LLMsOffers a comprehensive overview of Direct Preference Optimization (DPO), a streamlined method for aligning Large Language Models (LLMs) with human values and subjective preferences. It explains DPO's core principles, highlighting its efficiency by directly optimizing LLMs based on binary human choices, thus bypassing the complex reward model training and reinforcement learning steps found in traditional Reinforcement Learning from Human Feedback (RLHF). The document emphasizes DPO's particular utility for subjective tasks like creative writing, personalized communication, and style control, and discusses its methodologies, including the loss function and the role of the reference model. ...2025-06-1222 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!EchoLeak: The Zero-Click AI VulnerabilitySources:https://www.aim.security/lp/aim-labs-echoleak-blogposthttps://msrc.microsoft.com/update-guide/vulnerability/CVE-2025-32711The provided sources comprehensively analyze the "EchoLeak" vulnerability (CVE-2025-32711), a critical "zero-click" AI command injection flaw discovered in Microsoft 365 Copilot. This vulnerability allowed unauthorized data exfiltration without user interaction, by manipulating the AI's processing of specially crafted emails through an "LLM Scope Violation" within its Retrieval-Augmented Generation (RAG) architecture. The texts detail the attack chain, potential impacts on sensitive organizational data, and crucial mitigation strategies, emphasizing the need for proactive, AI-specific security measures beyond traditional cybersecurity, including AI...2025-06-1224 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!EchoLeak: The Zero-Click AI VulnerabilitySources:https://www.aim.security/lp/aim-labs-echoleak-blogposthttps://msrc.microsoft.com/update-guide/vulnerability/CVE-2025-32711The provided sources comprehensively analyze the "EchoLeak" vulnerability (CVE-2025-32711), a critical "zero-click" AI command injection flaw discovered in Microsoft 365 Copilot. This vulnerability allowed unauthorized data exfiltration without user interaction, by manipulating the AI's processing of specially crafted emails through an "LLM Scope Violation" within its Retrieval-Augmented Generation (RAG) architecture. The texts detail the attack chain, potential impacts on sensitive organizational data, and crucial mitigation strategies, emphasizing the need for proactive, AI-specific security measures beyond traditional cybersecurity, including AI...2025-06-1224 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI and Personal Data Under GDPRSource: https://www.europarl.europa.eu/RegData/etudes/STUD/2020/641530/EPRS_STU(2020)641530_EN.pdf)Excerpts from an EPRS study on the impact of the General Data Protection Regulation (GDPR) on artificial intelligence offers a comprehensive analysis of the intersection between AI and data protection. It explores how AI's rapid development and data hunger necessitate the application of GDPR principles, such as purpose limitation, data minimization, and transparency. The study examines the challenges and opportunities presented by AI's use of personal data, particularly in areas like profiling and automated decision-making, while also discussing the rights of d...2025-06-1120 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI and Personal Data Under GDPRSource: https://www.europarl.europa.eu/RegData/etudes/STUD/2020/641530/EPRS_STU(2020)641530_EN.pdf)Excerpts from an EPRS study on the impact of the General Data Protection Regulation (GDPR) on artificial intelligence offers a comprehensive analysis of the intersection between AI and data protection. It explores how AI's rapid development and data hunger necessitate the application of GDPR principles, such as purpose limitation, data minimization, and transparency. The study examines the challenges and opportunities presented by AI's use of personal data, particularly in areas like profiling and automated decision-making, while also discussing the rights of d...2025-06-1120 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Hugging Face TGI: LLM Deployment and OptimizationSources offer a comprehensive technical overview of Hugging Face Text Generation Inference (TGI), a toolkit designed for efficient deployment and serving of Large Language Models (LLMs). They define TGI's purpose in addressing the high computational demands and latency requirements of LLMs, detailing its evolution from NVIDIA GPU focus to broad hardware compatibility. The texts explain TGI's core architecture, comprising a Router, Launcher, and Model Server, and how data flows through these components. Furthermore, the sources highlight key features such as continuous batching, advanced quantization techniques (like EETQ and FP8), speculative decoding, and guidance mechanisms, all...2025-06-1133 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Hugging Face TGI: LLM Deployment and OptimizationSources offer a comprehensive technical overview of Hugging Face Text Generation Inference (TGI), a toolkit designed for efficient deployment and serving of Large Language Models (LLMs). They define TGI's purpose in addressing the high computational demands and latency requirements of LLMs, detailing its evolution from NVIDIA GPU focus to broad hardware compatibility. The texts explain TGI's core architecture, comprising a Router, Launcher, and Model Server, and how data flows through these components. Furthermore, the sources highlight key features such as continuous batching, advanced quantization techniques (like EETQ and FP8), speculative decoding, and guidance mechanisms, all...2025-06-1133 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Sparse Attention Mechanisms OverviewCollectively explore the concept of sparse attention mechanisms in deep learning, primarily within the context of Transformer models. They explain how standard attention's quadratic computational and memory cost (O(n²)) limits handling long sequences and how sparse attention addresses this by only computing a subset of interactions. Various sparse patterns, such as local window, global, random, and hybrid, are discussed, along with specific models like Longformer, Reformer, and BigBird, which implement these techniques. The texts highlight the significant efficiency gains, enabling longer context windows for tasks in NLP, computer vision, speech recognition, and other domains, w...2025-06-1037 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Sparse Attention Mechanisms OverviewCollectively explore the concept of sparse attention mechanisms in deep learning, primarily within the context of Transformer models. They explain how standard attention's quadratic computational and memory cost (O(n²)) limits handling long sequences and how sparse attention addresses this by only computing a subset of interactions. Various sparse patterns, such as local window, global, random, and hybrid, are discussed, along with specific models like Longformer, Reformer, and BigBird, which implement these techniques. The texts highlight the significant efficiency gains, enabling longer context windows for tasks in NLP, computer vision, speech recognition, and other domains, w...2025-06-1037 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!An Analysis of Xiaohongshu's dots.llm1 MoE ModelXiaohongshu's dots.llm1, a new open-source large language model utilizing a Mixture of Experts (MoE) architecture with 142 billion total parameters and 14 billion active parameters during inference. A key feature highlighted is its extensive pretraining on 11.2 trillion high-quality, non-synthetic tokens, alongside a 32K token context window. Released under the permissive MIT license, the model includes intermediate training checkpoints to support research. The text discusses the advantages and challenges of the MoE architecture compared to dense models and notes dots.llm1's strong performance, particularly in Chinese language tasks, positioning it competitively within the evolving global landscape...2025-06-1018 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!An Analysis of Xiaohongshu's dots.llm1 MoE ModelXiaohongshu's dots.llm1, a new open-source large language model utilizing a Mixture of Experts (MoE) architecture with 142 billion total parameters and 14 billion active parameters during inference. A key feature highlighted is its extensive pretraining on 11.2 trillion high-quality, non-synthetic tokens, alongside a 32K token context window. Released under the permissive MIT license, the model includes intermediate training checkpoints to support research. The text discusses the advantages and challenges of the MoE architecture compared to dense models and notes dots.llm1's strong performance, particularly in Chinese language tasks, positioning it competitively within the evolving global landscape...2025-06-1018 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Pwn2Own Berlin and the Rise of AI CybersecurityThe Pwn2Own Berlin 2025 hacking competition highlighted the evolving cybersecurity landscape by introducing a category specifically targeting Artificial Intelligence (AI) infrastructure, demonstrating that AI systems are becoming significant attack surfaces. While participants also discovered numerous zero-day vulnerabilities in traditional enterprise software, such as virtualization platforms and web browsers, the focus on AI underscored its increasing integration into critical systems and its dual role in both offensive and defensive cyber operations. The event revealed vulnerabilities in platforms like NVIDIA Triton and Chroma, prompting discussion on new vulnerability classes unique to AI and the ethical challenges of...2025-06-1028 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Pwn2Own Berlin and the Rise of AI CybersecurityThe Pwn2Own Berlin 2025 hacking competition highlighted the evolving cybersecurity landscape by introducing a category specifically targeting Artificial Intelligence (AI) infrastructure, demonstrating that AI systems are becoming significant attack surfaces. While participants also discovered numerous zero-day vulnerabilities in traditional enterprise software, such as virtualization platforms and web browsers, the focus on AI underscored its increasing integration into critical systems and its dual role in both offensive and defensive cyber operations. The event revealed vulnerabilities in platforms like NVIDIA Triton and Chroma, prompting discussion on new vulnerability classes unique to AI and the ethical challenges of...2025-06-1028 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Evolution of Large Language Models (2017-Present)Track the significant evolution of Large Language Models (LLMs) from 2017, when the Transformer architecture revolutionized the field, enabling models to process language more effectively. Key milestones included BERT, known for its bidirectional understanding, and the GPT series, particularly GPT-3, which demonstrated groundbreaking few-shot learning capabilities driven by massive scale. The development landscape is characterized by advancements like Mixture of Experts (MoE) for efficiency and Reinforcement Learning from Human Feedback (RLHF) for alignment, alongside the rise of multimodality and powerful open-source models from major companies. Despite rapid progress, challenges remain, including high computational costs, model hallucinations...2025-06-1044 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Evolution of Large Language Models (2017-Present)Track the significant evolution of Large Language Models (LLMs) from 2017, when the Transformer architecture revolutionized the field, enabling models to process language more effectively. Key milestones included BERT, known for its bidirectional understanding, and the GPT series, particularly GPT-3, which demonstrated groundbreaking few-shot learning capabilities driven by massive scale. The development landscape is characterized by advancements like Mixture of Experts (MoE) for efficiency and Reinforcement Learning from Human Feedback (RLHF) for alignment, alongside the rise of multimodality and powerful open-source models from major companies. Despite rapid progress, challenges remain, including high computational costs, model hallucinations...2025-06-1044 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI21 Labs: NLP Enterprise SolutionsOverview of AI21 Labs, an Israeli company specializing in Natural Language Processing (NLP), focusing on its journey from a consumer product to an enterprise-focused AI provider. It highlights the company's key technologies, including the innovative Jamba architecture designed for efficiency and long context understanding. The document examines AI21 Labs' product suite, such as the writing assistant Wordtune and the developer platform AI21 Studio, and explores their applications across diverse sectors. Furthermore, the report analyzes AI21 Labs' position in the competitive NLP landscape, details its numerous strategic partnerships, and discusses its approach to navigating the crucial...2025-06-1021 min

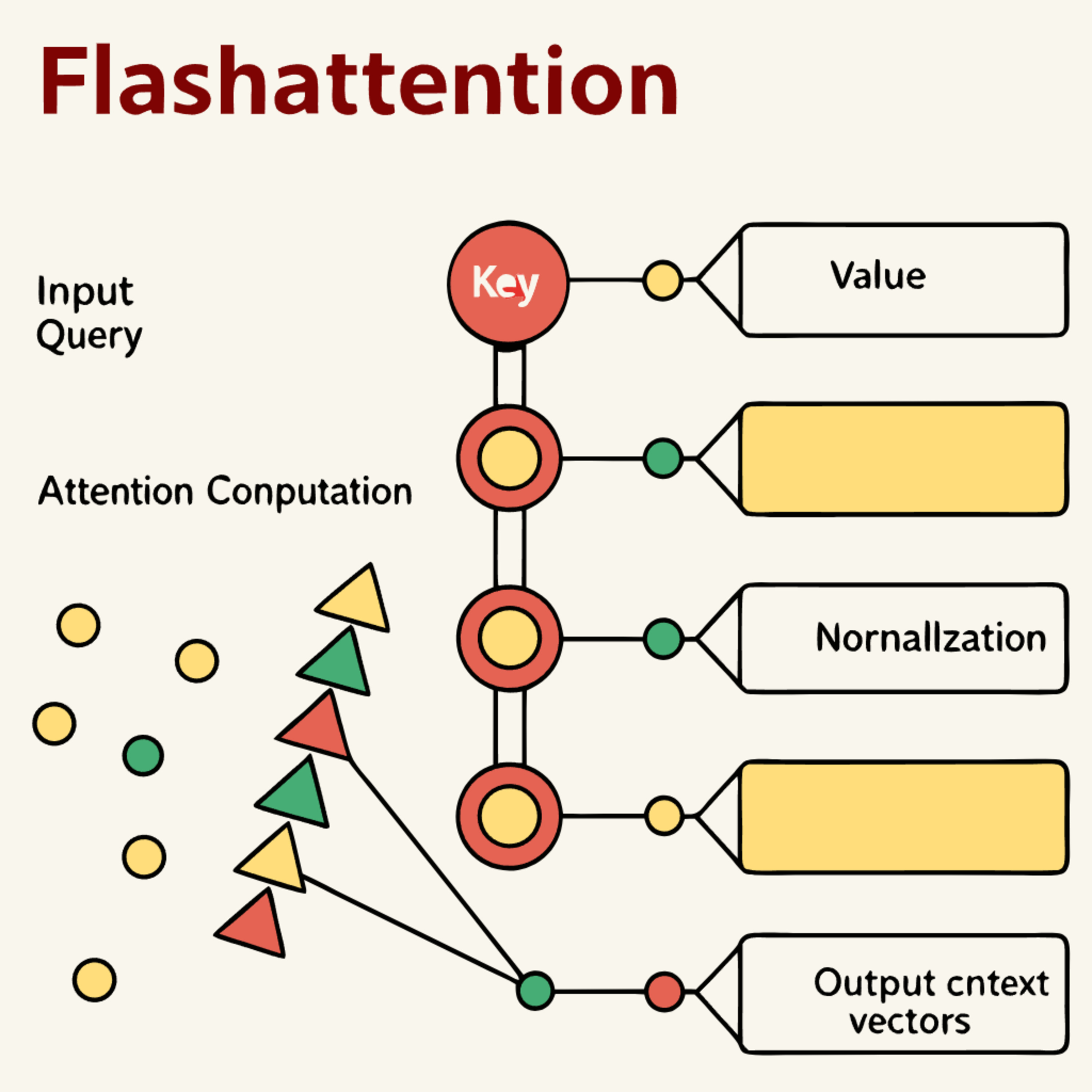

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI21 Labs: NLP Enterprise SolutionsOverview of AI21 Labs, an Israeli company specializing in Natural Language Processing (NLP), focusing on its journey from a consumer product to an enterprise-focused AI provider. It highlights the company's key technologies, including the innovative Jamba architecture designed for efficiency and long context understanding. The document examines AI21 Labs' product suite, such as the writing assistant Wordtune and the developer platform AI21 Studio, and explores their applications across diverse sectors. Furthermore, the report analyzes AI21 Labs' position in the competitive NLP landscape, details its numerous strategic partnerships, and discusses its approach to navigating the crucial...2025-06-1021 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!FlashAttention for Large Language ModelsDiscusses FlashAttention, an IO-aware algorithm designed to optimize the attention mechanism in Large Language Models (LLMs). It explains how standard attention suffers from quadratic complexity and becomes a memory bottleneck on GPUs due to excessive data transfers between slow HBM and fast SRAM.FlashAttention addresses this by employing techniques like tiling, kernel fusion, online softmax, and recomputation to significantly reduce memory usage (achieving linear scaling) and increase speed, enabling LLMs to handle much longer sequences. The text also covers the evolution through FlashAttention-2 and FlashAttention-3, which leverage enhanced parallelism and new hardware features, as well...2025-06-0922 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!FlashAttention for Large Language ModelsDiscusses FlashAttention, an IO-aware algorithm designed to optimize the attention mechanism in Large Language Models (LLMs). It explains how standard attention suffers from quadratic complexity and becomes a memory bottleneck on GPUs due to excessive data transfers between slow HBM and fast SRAM.FlashAttention addresses this by employing techniques like tiling, kernel fusion, online softmax, and recomputation to significantly reduce memory usage (achieving linear scaling) and increase speed, enabling LLMs to handle much longer sequences. The text also covers the evolution through FlashAttention-2 and FlashAttention-3, which leverage enhanced parallelism and new hardware features, as well...2025-06-0922 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI Diplomacy: LLM Strategic Gameplay and AnalysisIntroduce the EveryInc/AI_Diplomacy project, an open-source initiative found on GitHub (source: https://github.com/EveryInc/AI_Diplomacy) that enhances the strategic game of Diplomacy with Large Language Model-powered AI agents. The project aims to evaluate and benchmark various AI models by having them compete in the game, simulating complex negotiations, strategic decision-making, and even deception. The GitHub repository details the technical implementation, including the architecture of stateful agents, memory systems, prompt construction, and analysis tools, while the accompanying article highlights the insights gained from pitting top AI models against each other and emphasizes...2025-06-0942 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI Diplomacy: LLM Strategic Gameplay and AnalysisIntroduce the EveryInc/AI_Diplomacy project, an open-source initiative found on GitHub (source: https://github.com/EveryInc/AI_Diplomacy) that enhances the strategic game of Diplomacy with Large Language Model-powered AI agents. The project aims to evaluate and benchmark various AI models by having them compete in the game, simulating complex negotiations, strategic decision-making, and even deception. The GitHub repository details the technical implementation, including the architecture of stateful agents, memory systems, prompt construction, and analysis tools, while the accompanying article highlights the insights gained from pitting top AI models against each other and emphasizes...2025-06-0942 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!NVIDIA CUDA: Driving the AI RevolutionExamines NVIDIA's Compute Unified Device Architecture (CUDA), highlighting its fundamental role in powering modern artificial intelligence advancements. It explains how CUDA leverages the parallel architecture of GPUs to significantly accelerate computationally intensive deep learning tasks compared to CPUs. The document also describes the expansive CUDA ecosystem, including essential libraries like cuDNN and TensorRT, demonstrates CUDA's performance superiority through comparisons and benchmarks, and discusses its expanding application areas, particularly in edge computing. Finally, it compares CUDA to alternative platforms like OpenCL, addressing challenges such as vendor lock-in and programming complexity, and outlines recent technological...2025-06-0937 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!NVIDIA CUDA: Driving the AI RevolutionExamines NVIDIA's Compute Unified Device Architecture (CUDA), highlighting its fundamental role in powering modern artificial intelligence advancements. It explains how CUDA leverages the parallel architecture of GPUs to significantly accelerate computationally intensive deep learning tasks compared to CPUs. The document also describes the expansive CUDA ecosystem, including essential libraries like cuDNN and TensorRT, demonstrates CUDA's performance superiority through comparisons and benchmarks, and discusses its expanding application areas, particularly in edge computing. Finally, it compares CUDA to alternative platforms like OpenCL, addressing challenges such as vendor lock-in and programming complexity, and outlines recent technological...2025-06-0937 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI's Internet Domination AnalysisExamines the profound transformation of the internet driven by Artificial Intelligence (AI). It explores the historical development and convergence of the internet and AI, detailing how AI is reshaping internet applications like search engines and social media, and the significant demands AI places on underlying infrastructure. The report also addresses the critical ethical, privacy, and security challenges posed by this integration, including bias, surveillance, and misinformation, while considering the future trajectory towards semantic web technologies and autonomous systems. Finally, it emphasizes the importance of regulation, digital literacy, and international collaboration for navigating this complex transition...2025-06-0936 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI's Internet Domination AnalysisExamines the profound transformation of the internet driven by Artificial Intelligence (AI). It explores the historical development and convergence of the internet and AI, detailing how AI is reshaping internet applications like search engines and social media, and the significant demands AI places on underlying infrastructure. The report also addresses the critical ethical, privacy, and security challenges posed by this integration, including bias, surveillance, and misinformation, while considering the future trajectory towards semantic web technologies and autonomous systems. Finally, it emphasizes the importance of regulation, digital literacy, and international collaboration for navigating this complex transition...2025-06-0936 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Atlas: Advancing Long-Context NLP Through Enhanced MemorySource : https://arxiv.org/abs/2505.23735Examines Google Research's "Atlas" paper, which addresses the limitations of current language models in handling very long contexts. The paper introduces innovations like the Omega rule for contextual memory updates, higher-order kernels to boost memory capacity, and the Muon optimizer for enhanced memory management. It proposes DEEPTRANSFORMERS as a generalization of existing Transformer architectures by adding deep memory. Atlas shows promise in tasks requiring extensive recall and ultra-long context reasoning, outperforming some baselines and highlighting the importance of explicit, learnable memory systems for future NLP progress.2025-06-0521 min

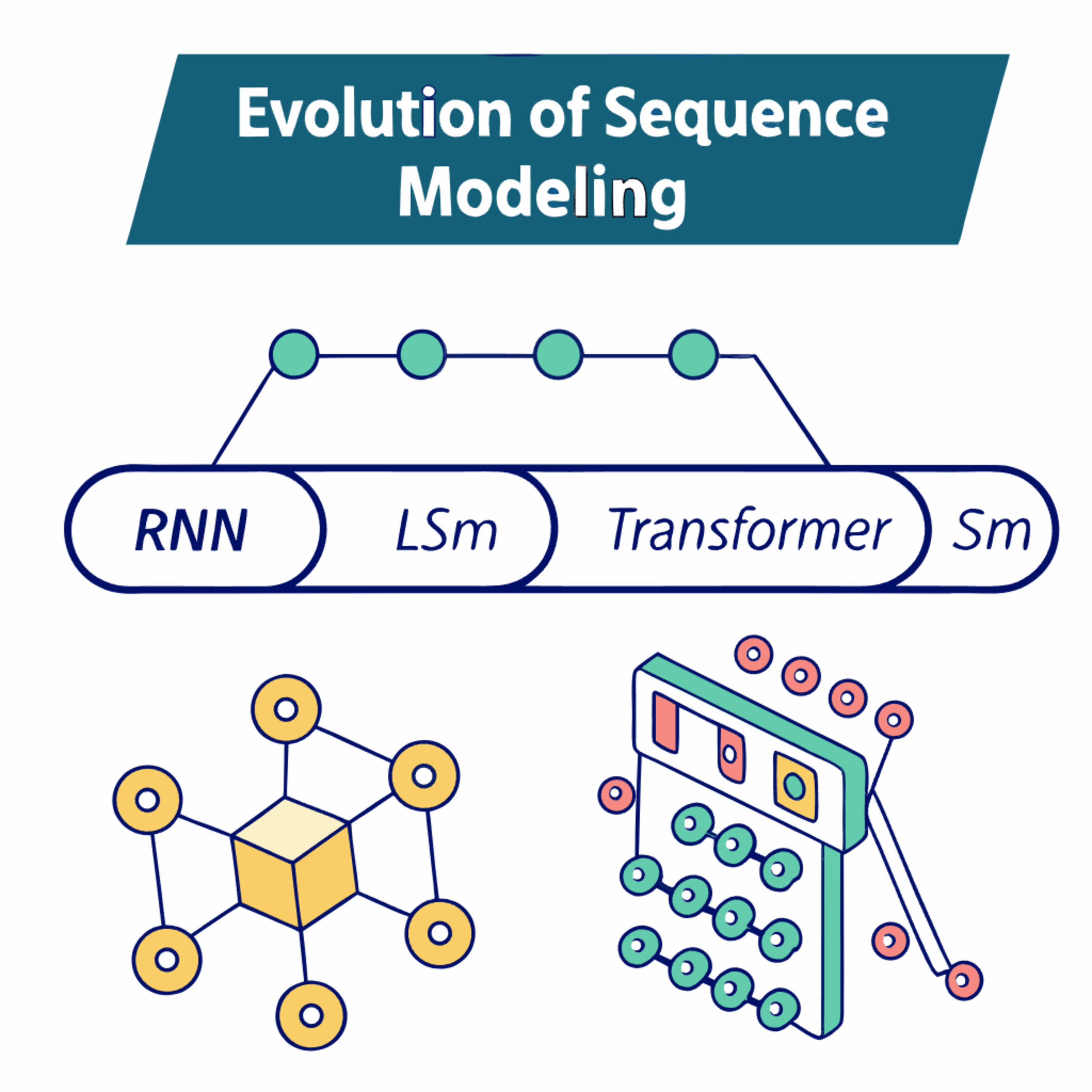

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Atlas: Advancing Long-Context NLP Through Enhanced MemorySource : https://arxiv.org/abs/2505.23735Examines Google Research's "Atlas" paper, which addresses the limitations of current language models in handling very long contexts. The paper introduces innovations like the Omega rule for contextual memory updates, higher-order kernels to boost memory capacity, and the Muon optimizer for enhanced memory management. It proposes DEEPTRANSFORMERS as a generalization of existing Transformer architectures by adding deep memory. Atlas shows promise in tasks requiring extensive recall and ultra-long context reasoning, outperforming some baselines and highlighting the importance of explicit, learnable memory systems for future NLP progress.2025-06-0521 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Evolution of Sequence Modeling: Transformers and BeyondExamines the evolution of sequence modeling, focusing on the impact, advantages, and disadvantages of the Transformer architecture. It contrasts Transformers with earlier models like Recurrent Neural Networks (RNNs) and their variants (LSTMs, GRUs), highlighting the Transformer's key innovation of self-attention which enables superior handling of long-range dependencies and parallel processing. Crucially, the report identifies the Transformer's quadratic complexity for long sequences as its main limitation, driving the development of more efficient alternatives like State Space Models (SSMs) such as Mamba and modern RNNs like RWKV and RetNet. It also explores hybrid architectures that combine elements...2025-06-0526 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Evolution of Sequence Modeling: Transformers and BeyondExamines the evolution of sequence modeling, focusing on the impact, advantages, and disadvantages of the Transformer architecture. It contrasts Transformers with earlier models like Recurrent Neural Networks (RNNs) and their variants (LSTMs, GRUs), highlighting the Transformer's key innovation of self-attention which enables superior handling of long-range dependencies and parallel processing. Crucially, the report identifies the Transformer's quadratic complexity for long sequences as its main limitation, driving the development of more efficient alternatives like State Space Models (SSMs) such as Mamba and modern RNNs like RWKV and RetNet. It also explores hybrid architectures that combine elements...2025-06-0526 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!PlayDiffusion: Non-Autoregressive Diffusion for Speech EditingDescribes PlayDiffusion, an open-source non-autoregressive (NAR) diffusion model engineered for speech editing, specifically tasks like inpainting (filling gaps) and word replacement. Unlike traditional autoregressive (AR) models that regenerate entire sequences, PlayDiffusion employs a discrete diffusion process with iterative refinement of masked audio tokens and non-causal attention to efficiently make localized edits while preserving the surrounding context and speaker consistency. This approach aims for seamless, high-quality edits and can also function as a fast NAR Text-to-Speech (TTS) system. While promising for applications in audio production, accessibility, and interactive systems, challenges include computational cost, handling...2025-06-0528 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!PlayDiffusion: Non-Autoregressive Diffusion for Speech EditingDescribes PlayDiffusion, an open-source non-autoregressive (NAR) diffusion model engineered for speech editing, specifically tasks like inpainting (filling gaps) and word replacement. Unlike traditional autoregressive (AR) models that regenerate entire sequences, PlayDiffusion employs a discrete diffusion process with iterative refinement of masked audio tokens and non-causal attention to efficiently make localized edits while preserving the surrounding context and speaker consistency. This approach aims for seamless, high-quality edits and can also function as a fast NAR Text-to-Speech (TTS) system. While promising for applications in audio production, accessibility, and interactive systems, challenges include computational cost, handling...2025-06-0528 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Analyzing LLM Memorization and Generalization QuantitativelySource : https://arxiv.org/abs/2505.24832This research paper, "How much do language models memorize?" by Morris et al. (2025), introduces a novel method to estimate the extent of information a model retains about specific data points. The authors formally distinguish between "unintended memorization" (information about a specific dataset) and "generalization" (information about the true data-generation process). By focusing on unintended memorization, they estimate the capacity of language models, finding that models in the GPT family have an approximate capacity of 3.6 bits-per-parameter. 2025-06-0556 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Analyzing LLM Memorization and Generalization QuantitativelySource : https://arxiv.org/abs/2505.24832This research paper, "How much do language models memorize?" by Morris et al. (2025), introduces a novel method to estimate the extent of information a model retains about specific data points. The authors formally distinguish between "unintended memorization" (information about a specific dataset) and "generalization" (information about the true data-generation process). By focusing on unintended memorization, they estimate the capacity of language models, finding that models in the GPT family have an approximate capacity of 3.6 bits-per-parameter. 2025-06-0556 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!YOLO Object Detection Overview and EvolutionExplain the evolution of the YOLO (You Only Look Once) object detection framework, detailing its core concept of single-pass processing for speed and efficiency. They cover key architectural components like the backbone, neck, and head, the use of anchor boxes (in many versions), and the structure of its output tensor. The text also compares YOLO's speed and accuracy to other methods like SSD and Faster R-CNN, outlines common challenges in implementation (such as small object detection and dataset imbalance), and discusses practical applications across various fields and future trends in AI vision.2025-05-2952 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!YOLO Object Detection Overview and EvolutionExplain the evolution of the YOLO (You Only Look Once) object detection framework, detailing its core concept of single-pass processing for speed and efficiency. They cover key architectural components like the backbone, neck, and head, the use of anchor boxes (in many versions), and the structure of its output tensor. The text also compares YOLO's speed and accuracy to other methods like SSD and Faster R-CNN, outlines common challenges in implementation (such as small object detection and dataset imbalance), and discusses practical applications across various fields and future trends in AI vision.2025-05-2952 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Google AI Gemma Model Family OverviewDescribe Google's Gemma model family, a series of open-weight artificial intelligence models designed for accessibility and innovation. Tracing their lineage back to the sophisticated Gemini program, the text outlines the evolution from initial text-based models to more advanced, efficient, and specialized variants like those for vision (PaliGemma), safety (ShieldGemma), medicine (MedGemma), and coding (CodeGemma). It highlights technological advancements like multimodality, expanded context windows, and efficiency innovations such as Quantization-Aware Training (QAT) and mobile-first architectures (Gemma 3n). The diverse applications and technical specifications underscore Google's strategic aim to cultivate a broad AI ecosystem and establish a...2025-05-2927 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Google AI Gemma Model Family OverviewDescribe Google's Gemma model family, a series of open-weight artificial intelligence models designed for accessibility and innovation. Tracing their lineage back to the sophisticated Gemini program, the text outlines the evolution from initial text-based models to more advanced, efficient, and specialized variants like those for vision (PaliGemma), safety (ShieldGemma), medicine (MedGemma), and coding (CodeGemma). It highlights technological advancements like multimodality, expanded context windows, and efficiency innovations such as Quantization-Aware Training (QAT) and mobile-first architectures (Gemma 3n). The diverse applications and technical specifications underscore Google's strategic aim to cultivate a broad AI ecosystem and establish a...2025-05-2927 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Analyzing Mistral AI's Codestral Embed ModelIntroduces Mistral AI's Codestral Embed, a new embedding model specifically designed for code, aiming to address the limitations of general text embedders in understanding programming languages. A key feature is its use of Matryoshka Representation Learning, allowing for flexible embeddings up to 3072 dimensions that can be efficiently truncated. The document highlights the model's ability to offer high performance with compact int8 precision embeddings at 256 dimensions, claiming superiority over larger competitors and discussing the benefits of such efficiency for storage and speed. The source also explores the model's expected applications in Retrieval-Augmented Generation (RAG) for coding assistants and...2025-05-2926 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Analyzing Mistral AI's Codestral Embed ModelIntroduces Mistral AI's Codestral Embed, a new embedding model specifically designed for code, aiming to address the limitations of general text embedders in understanding programming languages. A key feature is its use of Matryoshka Representation Learning, allowing for flexible embeddings up to 3072 dimensions that can be efficiently truncated. The document highlights the model's ability to offer high performance with compact int8 precision embeddings at 256 dimensions, claiming superiority over larger competitors and discussing the benefits of such efficiency for storage and speed. The source also explores the model's expected applications in Retrieval-Augmented Generation (RAG) for coding assistants and...2025-05-2926 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Microsoft NLWeb: The Conversational Agentic WebOverview of Microsoft's NLWeb initiative, an open-source project aiming to integrate conversational AI directly into websites using existing data like Schema.org. NLWeb is positioned as a foundational technology for an "agentic web", enabling sites to become standardized endpoints accessible by AI agents via the Model Context Protocol (MCP). While promising enhanced user experience and accessibility, the initiative faces challenges regarding technical implementation, privacy, security, and ethical considerations. Early adoption shows potential for customer engagement and streamlined information access across various industries, though its long-term impact on web centralization versus decentralization is a key debate.2025-05-2544 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Microsoft NLWeb: The Conversational Agentic WebOverview of Microsoft's NLWeb initiative, an open-source project aiming to integrate conversational AI directly into websites using existing data like Schema.org. NLWeb is positioned as a foundational technology for an "agentic web", enabling sites to become standardized endpoints accessible by AI agents via the Model Context Protocol (MCP). While promising enhanced user experience and accessibility, the initiative faces challenges regarding technical implementation, privacy, security, and ethical considerations. Early adoption shows potential for customer engagement and streamlined information access across various industries, though its long-term impact on web centralization versus decentralization is a key debate.2025-05-2544 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Time Series Foundation Models (TSFM) OverviewComprehensively overview Time Series Foundation Models (TSFMs), defining them as AI models pre-trained on vast time series data to learn generalized patterns for accurate forecasting and analysis on new data with minimal additional training. They explore TSFM architectures, highlighting the dominance of Transformer-based models often using patching techniques, while also presenting efficient MLP-based alternatives. The text discusses training methodologies, emphasizing the requirement for massive, diverse datasets and sophisticated pre-processing and tokenization, alongside the practical benefits of zero-shot and few-shot learning capabilities. A significant portion is dedicated to a comparative analysis of TSFMs versus traditional forecasting methods, illustrating...2025-05-241h 11

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Time Series Foundation Models (TSFM) OverviewComprehensively overview Time Series Foundation Models (TSFMs), defining them as AI models pre-trained on vast time series data to learn generalized patterns for accurate forecasting and analysis on new data with minimal additional training. They explore TSFM architectures, highlighting the dominance of Transformer-based models often using patching techniques, while also presenting efficient MLP-based alternatives. The text discusses training methodologies, emphasizing the requirement for massive, diverse datasets and sophisticated pre-processing and tokenization, alongside the practical benefits of zero-shot and few-shot learning capabilities. A significant portion is dedicated to a comparative analysis of TSFMs versus traditional forecasting methods, illustrating...2025-05-241h 11 Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!VLLM: High-Throughput LLM Inference and ServingIntroduce and detail vLLM, a prominent open-source library designed for high-throughput and memory-efficient Large Language Model (LLM) inference. They explain its core innovations like PagedAttention and continuous batching, highlighting how these techniques revolutionize memory management and significantly boost performance compared to traditional systems. The text also outlines vLLM's architecture, including the recent V1 upgrades, its extensive features and capabilities (covering performance, memory, flexibility, and scalability), and its strong integration with MLOps workflows and various real-world applications across NLP, computer vision, and RL. Finally, the sources discuss comparisons with other serving frameworks, vLLM's robust development community...2025-05-2256 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!VLLM: High-Throughput LLM Inference and ServingIntroduce and detail vLLM, a prominent open-source library designed for high-throughput and memory-efficient Large Language Model (LLM) inference. They explain its core innovations like PagedAttention and continuous batching, highlighting how these techniques revolutionize memory management and significantly boost performance compared to traditional systems. The text also outlines vLLM's architecture, including the recent V1 upgrades, its extensive features and capabilities (covering performance, memory, flexibility, and scalability), and its strong integration with MLOps workflows and various real-world applications across NLP, computer vision, and RL. Finally, the sources discuss comparisons with other serving frameworks, vLLM's robust development community...2025-05-2256 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!The Agentic Web: Microsoft's Transformative FrontierEmergence of the Agentic Web, a new phase of the internet where autonomous AI agents can make decisions and perform complex tasks independently or collaboratively. It highlights Microsoft's strategic initiatives in this domain, particularly their vision for an "open agentic web" underpinned by platforms like Azure AI Foundry and Copilot Studio, and the introduction of open standards such as the Model Context Protocol (MCP) and projects like NLWeb for creating natural language interfaces. The text explores the profound implications of agentic AI for developers, businesses, and end-users, presenting opportunities for enhanced productivity and personalization alongside significant challenges...2025-05-2233 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!The Agentic Web: Microsoft's Transformative FrontierEmergence of the Agentic Web, a new phase of the internet where autonomous AI agents can make decisions and perform complex tasks independently or collaboratively. It highlights Microsoft's strategic initiatives in this domain, particularly their vision for an "open agentic web" underpinned by platforms like Azure AI Foundry and Copilot Studio, and the introduction of open standards such as the Model Context Protocol (MCP) and projects like NLWeb for creating natural language interfaces. The text explores the profound implications of agentic AI for developers, businesses, and end-users, presenting opportunities for enhanced productivity and personalization alongside significant challenges...2025-05-2233 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Diffusion Language Models: Concepts and ChallengesExplore the emergence and evolution of diffusion models, a powerful class of generative AI models that learn to synthesize data by reversing a gradual noising process. Initially successful in image and audio generation, researchers are increasingly adapting them to Natural Language Processing (NLP), giving rise to diffusion-based Large Language Models (LLMs). The text details the theoretical foundations rooted in non-equilibrium thermodynamics and Stochastic Differential Equations (SDEs), highlights landmark developments like DDPMs and Score-Based Generative Modeling, and compares them to traditional models like GANs and VAEs. Key challenges in applying diffusion models to the discrete nature of text...2025-05-2234 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Diffusion Language Models: Concepts and ChallengesExplore the emergence and evolution of diffusion models, a powerful class of generative AI models that learn to synthesize data by reversing a gradual noising process. Initially successful in image and audio generation, researchers are increasingly adapting them to Natural Language Processing (NLP), giving rise to diffusion-based Large Language Models (LLMs). The text details the theoretical foundations rooted in non-equilibrium thermodynamics and Stochastic Differential Equations (SDEs), highlights landmark developments like DDPMs and Score-Based Generative Modeling, and compares them to traditional models like GANs and VAEs. Key challenges in applying diffusion models to the discrete nature of text...2025-05-2234 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Superintelligence: Reshaping Warfare and Empowering DefendersDiscuss the transformative impact of superintelligence on defense, particularly in biological and cyber warfare. Historically, attackers have held an asymmetric advantage, but superintelligence could shift this by making defensive intelligence more affordable and effective, enabling proactive measures like advanced red-teaming, sophisticated pathogen modeling, and real-time cybersecurity fortification. While OSINT offers benefits, its amplification by superintelligence is a double-edged sword, and navigating this era requires ethical frameworks, international cooperation, and continuous strategic adaptation to evolving threats. The text uses historical examples and current AI case studies to illustrate the potential for superintelligence to fundamentally...2025-05-2243 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Superintelligence: Reshaping Warfare and Empowering DefendersDiscuss the transformative impact of superintelligence on defense, particularly in biological and cyber warfare. Historically, attackers have held an asymmetric advantage, but superintelligence could shift this by making defensive intelligence more affordable and effective, enabling proactive measures like advanced red-teaming, sophisticated pathogen modeling, and real-time cybersecurity fortification. While OSINT offers benefits, its amplification by superintelligence is a double-edged sword, and navigating this era requires ethical frameworks, international cooperation, and continuous strategic adaptation to evolving threats. The text uses historical examples and current AI case studies to illustrate the potential for superintelligence to fundamentally...2025-05-2243 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Project Astra: Universal AI Assistant Development and ImplicationsGoogle DeepMind's Project Astra, a research initiative aiming to create a universal AI assistant. The project focuses on developing an AI that can understand multimodal inputs like voice and vision in real time, possess a sophisticated memory across devices, and exhibit "Action Intelligence" to control devices and applications. While showcasing potential applications in areas like education, accessibility, and daily tasks, the texts also acknowledge significant technical, ethical, and societal challenges, particularly concerning data privacy, bias, and accountability. Google's strategy involves iterative integration into existing products like Gemini Live and Google Search, alongside the release of a developer API to...2025-05-2224 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Project Astra: Universal AI Assistant Development and ImplicationsGoogle DeepMind's Project Astra, a research initiative aiming to create a universal AI assistant. The project focuses on developing an AI that can understand multimodal inputs like voice and vision in real time, possess a sophisticated memory across devices, and exhibit "Action Intelligence" to control devices and applications. While showcasing potential applications in areas like education, accessibility, and daily tasks, the texts also acknowledge significant technical, ethical, and societal challenges, particularly concerning data privacy, bias, and accountability. Google's strategy involves iterative integration into existing products like Gemini Live and Google Search, alongside the release of a developer API to...2025-05-2224 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Google I/O 2025: An AI Revolution UnleashedThe Google I/O 2025 event showcased Google's intense focus on integrating AI, particularly its Gemini models, across a wide range of products and services. This includes transforming Google Search into a more conversational "answer engine" with features like AI Mode and AI Overviews, and introducing advanced AI for creative tasks like video generation. Google also unveiled future hardware initiatives like 3D video conferencing (Google Beam) and AI-powered smart glasses, positioning Android XR as a key platform. The company is also enhancing everyday tools like Meet, Chrome, and Gmail with AI capabilities and launching new AI subscription tiers, while also...2025-05-2239 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Google I/O 2025: An AI Revolution UnleashedThe Google I/O 2025 event showcased Google's intense focus on integrating AI, particularly its Gemini models, across a wide range of products and services. This includes transforming Google Search into a more conversational "answer engine" with features like AI Mode and AI Overviews, and introducing advanced AI for creative tasks like video generation. Google also unveiled future hardware initiatives like 3D video conferencing (Google Beam) and AI-powered smart glasses, positioning Android XR as a key platform. The company is also enhancing everyday tools like Meet, Chrome, and Gmail with AI capabilities and launching new AI subscription tiers, while also...2025-05-2239 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Clara Unplugged: Your Local AI UniverseIntroduces Clara, an open-source AI workspace designed for complete local operation with no reliance on cloud services, API keys, or external backends, prioritizing user privacy and data ownership. Clara provides a suite of tools including local LLM chat via Ollama, tool calling for agents to interact with other systems, a visual agent builder with templates, offline Stable Diffusion image generation using ComfyUI, and a built-in n8n-style automation engine, allowing users to build custom applications and workflows. The article details various installation methods and highlights user testimonials showcasing Clara's practical application in areas like...2025-05-2020 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Clara Unplugged: Your Local AI UniverseIntroduces Clara, an open-source AI workspace designed for complete local operation with no reliance on cloud services, API keys, or external backends, prioritizing user privacy and data ownership. Clara provides a suite of tools including local LLM chat via Ollama, tool calling for agents to interact with other systems, a visual agent builder with templates, offline Stable Diffusion image generation using ComfyUI, and a built-in n8n-style automation engine, allowing users to build custom applications and workflows. The article details various installation methods and highlights user testimonials showcasing Clara's practical application in areas like...2025-05-2020 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Physical Artificial Intelligence: Embodiment and InteractionExplore the emerging field of Physical Artificial Intelligence (Physical AI), which extends AI capabilities from the digital realm into the tangible world. They explain how Physical AI systems utilize AI algorithms, sensors, and robotics to perceive, reason, and act in physical environments, contrasting this with traditional, disembodied AI. The texts trace the historical roots from early automatons and cybernetics to modern embodied AI and robotics foundation models, while also discussing the significant technical challenges in hardware, software integration, data requirements, and the sim-to-real gap. Finally, the sources examine the practical applications across industries...2025-05-2052 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Physical Artificial Intelligence: Embodiment and InteractionExplore the emerging field of Physical Artificial Intelligence (Physical AI), which extends AI capabilities from the digital realm into the tangible world. They explain how Physical AI systems utilize AI algorithms, sensors, and robotics to perceive, reason, and act in physical environments, contrasting this with traditional, disembodied AI. The texts trace the historical roots from early automatons and cybernetics to modern embodied AI and robotics foundation models, while also discussing the significant technical challenges in hardware, software integration, data requirements, and the sim-to-real gap. Finally, the sources examine the practical applications across industries...2025-05-2052 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI Recruitment: Risks, Regulations, and Responsible PracticeDiscusses the growing use of Large Language Models (LLMs) in candidate scoring for recruitment, highlighting both their potential benefits and considerable risks. It details how LLMs analyze candidate data, but focuses heavily on inherent biases (gender, race, age, socioeconomic) that can lead to discriminatory hiring outcomes, citing real-world examples like Amazon and iTutorGroup. The document also explains the complex and evolving legal and ethical landscape, covering regulations in the EU, US, and Canada and emphasizing the principles of Fairness, Accountability, and Transparency (FAT) and the challenge of the AI's "black box" nature. Finally, it provides strategic recommendations...2025-05-1942 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!AI Recruitment: Risks, Regulations, and Responsible PracticeDiscusses the growing use of Large Language Models (LLMs) in candidate scoring for recruitment, highlighting both their potential benefits and considerable risks. It details how LLMs analyze candidate data, but focuses heavily on inherent biases (gender, race, age, socioeconomic) that can lead to discriminatory hiring outcomes, citing real-world examples like Amazon and iTutorGroup. The document also explains the complex and evolving legal and ethical landscape, covering regulations in the EU, US, and Canada and emphasizing the principles of Fairness, Accountability, and Transparency (FAT) and the challenge of the AI's "black box" nature. Finally, it provides strategic recommendations...2025-05-1942 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LLMs for Resume ParsingDiscuss how Large Language Models (LLMs) are transforming resume parsing and talent acquisition by enabling more sophisticated understanding and extraction of information from varied resume formats compared to older rule-based or traditional machine learning methods. While LLMs offer benefits like improved efficiency and the ability to handle unstructured data, they introduce significant challenges, particularly regarding algorithmic bias and data privacy. Highlight the importance of human oversight, bias mitigation strategies, and the impact of regulations like GDPR, NYC Local Law 144, and the EU AI Act on the ethical and practical deployment of these technologies in hiring...2025-05-1932 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LLMs for Resume ParsingDiscuss how Large Language Models (LLMs) are transforming resume parsing and talent acquisition by enabling more sophisticated understanding and extraction of information from varied resume formats compared to older rule-based or traditional machine learning methods. While LLMs offer benefits like improved efficiency and the ability to handle unstructured data, they introduce significant challenges, particularly regarding algorithmic bias and data privacy. Highlight the importance of human oversight, bias mitigation strategies, and the impact of regulations like GDPR, NYC Local Law 144, and the EU AI Act on the ethical and practical deployment of these technologies in hiring...2025-05-1932 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LLM Sampling and Decoding Strategies ExplainedExplores how to control the text generated by Large Language Models (LLMs) by examining various decoding strategies and sampling parameters. Key parameters like temperature, top-k sampling, and top-p (nucleus) sampling are explained, detailing their mechanisms and impact on balancing output creativity versus coherence.Also discusses the history and evolution of these techniques, highlighting newer, more adaptive methods and the importance of practical experimentation for task-specific tuning. Finally, it touches upon additional user-defined constraints that further shape LLM outputs.2025-05-1929 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LLM Sampling and Decoding Strategies ExplainedExplores how to control the text generated by Large Language Models (LLMs) by examining various decoding strategies and sampling parameters. Key parameters like temperature, top-k sampling, and top-p (nucleus) sampling are explained, detailing their mechanisms and impact on balancing output creativity versus coherence.Also discusses the history and evolution of these techniques, highlighting newer, more adaptive methods and the importance of practical experimentation for task-specific tuning. Finally, it touches upon additional user-defined constraints that further shape LLM outputs.2025-05-1929 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LangChain and LangSmith for LLM ApplicationsDescribe the roles of LangChain and LangSmith in developing and deploying Large Language Model (LLM) applications. LangChain is presented as an open-source framework providing components and abstractions to streamline building LLM applications, while LangSmith is highlighted as a complementary platform offering crucial tools for debugging, testing, evaluating, and monitoring these applications. LangSmith helps move LLM prototypes to production by providing deep visibility into application behavior through tracing, enabling systematic evaluation against datasets, supporting prompt engineering and management, and offering monitoring features for live applications. The text also explores practical applications across industries, technical architecture, comparisons with other...2025-05-1533 min

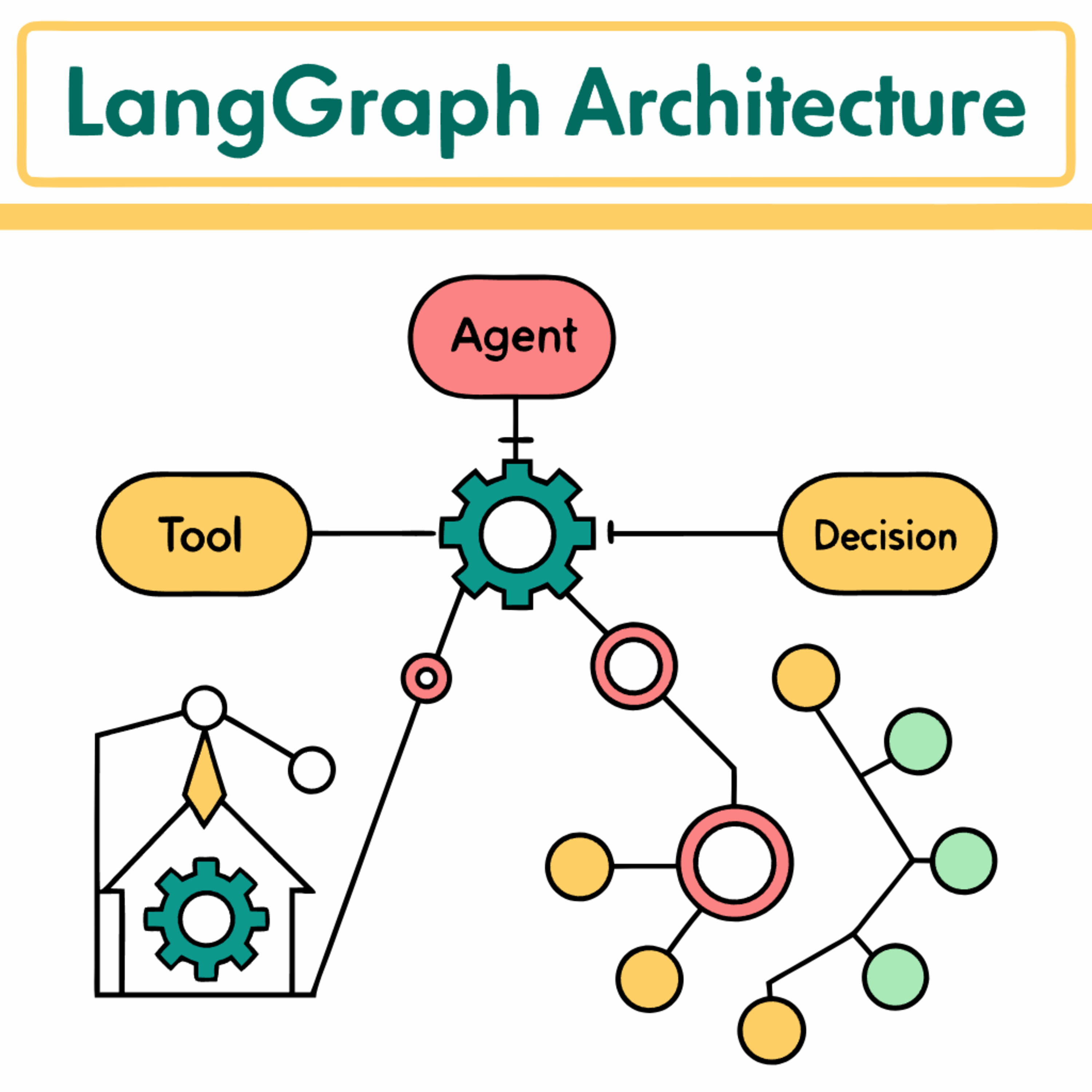

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LangChain and LangSmith for LLM ApplicationsDescribe the roles of LangChain and LangSmith in developing and deploying Large Language Model (LLM) applications. LangChain is presented as an open-source framework providing components and abstractions to streamline building LLM applications, while LangSmith is highlighted as a complementary platform offering crucial tools for debugging, testing, evaluating, and monitoring these applications. LangSmith helps move LLM prototypes to production by providing deep visibility into application behavior through tracing, enabling systematic evaluation against datasets, supporting prompt engineering and management, and offering monitoring features for live applications. The text also explores practical applications across industries, technical architecture, comparisons with other...2025-05-1533 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LangGraph for Advanced LLM OrchestrationIntroduces LangGraph, a library extending LangChain to build stateful, multi-actor Large Language Model applications using cyclical graphs. It highlights LangGraph's core purpose in enabling complex, dynamic agent runtimes by providing robust mechanisms for state management, agent coordination, and handling cyclical processes crucial for iterative behaviors. The sources also outline LangGraph's architecture based on State, Nodes, and Edges, compare it to other frameworks like CrewAI and AutoGen, discuss security considerations, performance evaluation metrics, and the ecosystem's support tools, including LangSmith for observability and the LangGraph Platform for deployment. Ultimately, the text showcases LangGraph's utility through case studies and...2025-05-1528 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LangGraph for Advanced LLM OrchestrationIntroduces LangGraph, a library extending LangChain to build stateful, multi-actor Large Language Model applications using cyclical graphs. It highlights LangGraph's core purpose in enabling complex, dynamic agent runtimes by providing robust mechanisms for state management, agent coordination, and handling cyclical processes crucial for iterative behaviors. The sources also outline LangGraph's architecture based on State, Nodes, and Edges, compare it to other frameworks like CrewAI and AutoGen, discuss security considerations, performance evaluation metrics, and the ecosystem's support tools, including LangSmith for observability and the LangGraph Platform for deployment. Ultimately, the text showcases LangGraph's utility through case studies and...2025-05-1528 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LangChain: Orchestrating LLM ApplicationsProvides a comprehensive overview of LangChain, a popular open-source framework designed for building applications using large language models (LLMs). It explains LangChain's modular architecture and key components like LCEL, models, prompts, chains, agents, tools, memory, and indexes, illustrating how they connect LLMs to data and enable complex workflows and interactions. The text also details LangChain's integration capabilities with various LLMs and data sources, showcases practical use cases and successful enterprise applications, and compares LangChain to other frameworks like Hugging Face and direct API usage. Finally, it discusses developer challenges and best practices, including debugging with LangSmith, addresses...2025-05-1554 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!LangChain: Orchestrating LLM ApplicationsProvides a comprehensive overview of LangChain, a popular open-source framework designed for building applications using large language models (LLMs). It explains LangChain's modular architecture and key components like LCEL, models, prompts, chains, agents, tools, memory, and indexes, illustrating how they connect LLMs to data and enable complex workflows and interactions. The text also details LangChain's integration capabilities with various LLMs and data sources, showcases practical use cases and successful enterprise applications, and compares LangChain to other frameworks like Hugging Face and direct API usage. Finally, it discusses developer challenges and best practices, including debugging with LangSmith, addresses...2025-05-1554 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!EU AI Act: Post-2025 Developments and ComplianceSince January 2025, the EU AI Act has significantly progressed from legislative text to active compliance obligations for businesses operating in the EU. Key developments include the implementation of bans on certain AI practices and AI literacy requirements in February 2025, along with crucial interpretative guidelines issued by the European Commission and the new European AI Office. General-Purpose AI (GPAI) models face specific rules becoming applicable in August 2025, supported by an emerging Code of Practice, and the penalty regime also takes effect then. While comprehensive obligations for most high-risk AI systems have later deadlines (August 2026/2027), challenges in developing harmonised standards create...2025-05-1223 min

Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!EU AI Act: Post-2025 Developments and ComplianceSince January 2025, the EU AI Act has significantly progressed from legislative text to active compliance obligations for businesses operating in the EU. Key developments include the implementation of bans on certain AI practices and AI literacy requirements in February 2025, along with crucial interpretative guidelines issued by the European Commission and the new European AI Office. General-Purpose AI (GPAI) models face specific rules becoming applicable in August 2025, supported by an emerging Code of Practice, and the penalty regime also takes effect then. While comprehensive obligations for most high-risk AI systems have later deadlines (August 2026/2027), challenges in developing harmonised standards create...2025-05-1223 min Rapid Synthesis: Delivered under 30 mins..ish, or it's on me!Apache Mahout: Evolution and FutureApache Mahout, an open-source project from the Apache Software Foundation that has significantly evolved from a MapReduce-based machine learning library to focus on providing a Scala DSL for scalable linear algebra, primarily leveraging Apache Spark as its distributed backend. Mahout excels in areas like recommendation systems, including a unique Correlated Co-Occurrence algorithm, and dimensionality reduction techniques. However, many older algorithms are deprecated, and the project's recent strategic direction is heavily influenced by Qumat, an initiative focused on quantum computing. The text details Mahout's architecture, key components like Distributed Row Matrices (DRMs), performance enhancements, and compares it to...2025-05-1225 min