Shows

Justified PosteriorsCan an AI Interview You Better Than a Human?We discuss “Voice in AI Firms: A Natural Field Experiment on Automated Job Interviews” by Brian Jabarian and Luca Henkel. The paper examines a randomized experiment with call center job applicants in the Philippines who were assigned to either AI-conducted voice interviews, human interviews, or given a choice between the two.Key Findings:* AI interviews led to higher job offer rates and proportionally higher retention rates* No significant difference in involuntary terminations between groups* Applicants actually preferred AI interviews—likely due to scheduling flexibility and immediate availability* AI interviewers kept c...

2026-01-261h 00

AI: post transformersOpenRouter 2025 Report: Analysis of Global LLM Usage PatternsWe review two research papers, one from January 15, 2026 by OpenRouter Inc and a16z (Andreessen Horowitz) and another from April 2025 by Andrey Fradkin (Boston University and MIT IDE) which provide uses of OpenRouter, they analyze the evolving market dynamics and user behaviors within the Large Language Model (LLM) ecosystem, primarily using data from the **OpenRouter marketplace**. The studies document that new AI models see **rapid adoption** upon release, with demand patterns suggesting that models are **horizontally and vertically differentiated** rather than being simple commodities. Researchers found that while some releases **expand the overall market**, others primarily trigger **substitution** within...

2026-01-1914 min

Justified PosteriorsAnecdotes from AI Supercharged ScienceAnecdotes of AI Supercharged Science: Justified Posteriors reads “Early Science Acceleration Experiments with GPT-5”In this episode, Seth and Andrey break down OpenAI’s report, Early Science Acceleration Experiments with GPT-5. The paper is organized as a series of anecdotes about how top scientists used an early version of GPT-5 in their scientific investigations. The coauthors of the papers try out the model to help them with everything from Erdős’ unsolved math problems to understanding black hole symmetries to interpreting the results of a biological experiment. Seth and Andrey’s priors revolve around whether current models are closer...

2026-01-131h 07

Justified PosteriorsBen Golub: AI Referees, Social Learning, and Virtual CurrenciesIn this episode, we sit down with Ben Golub, economist at Northwestern University, to talk about what happens when AI meets academic research, social learning, and network theory.We start with Ben’s startup Refine, an AI-powered technical referee for academic papers. From there, the conversation ranges widely: how scholars should think about tooling, why “slop” is now cheap, how eigenvalues explain viral growth, and what large language models might do to collective belief formation. We get math, economics, startups, misinformation, and even cow tipping.Links & References* Refine — AI referee for academic papers* H...

2025-12-291h 16

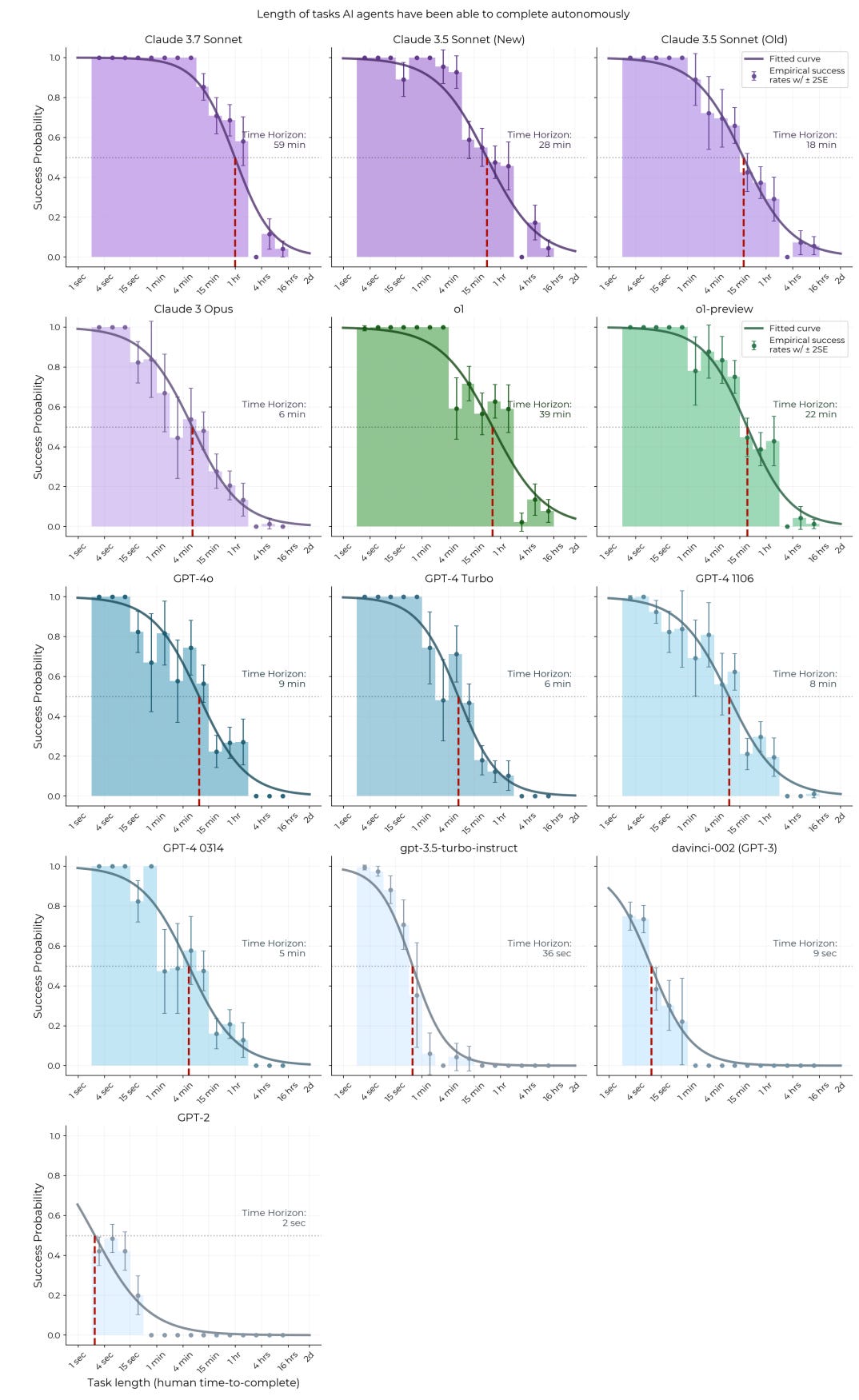

Justified PosteriorsAre We There Yet? Evaluating METR’s Eval of AI’s Ability to Complete Tasks of Different LengthsSeth and Andrey are back to evaluating an AI evaluation, this time discussing METR’s paper “Measuring AI Ability to Complete Long Tasks.” The paper’s central claim is that the “effective horizon” of AI agents—the length of tasks they can complete autonomously—is doubling every 7 months. Extrapolate that, and AI handles month-long projects by decade’s end. They discuss the data and the assumptions that go into this benchmark. Seth and Andrey start by walking through the tests of task length, from simple atomic actions to the 8-hour research simulations in RE-Bench. They discuss whether the paper properly...

2025-12-151h 06

Justified PosteriorsEpistemic Apocalypse and Prediction Markets (Bo Cowgill Pt. 2)We continue our conversation with Columbia professor Bo Cowgill. We start with a detour through Roman Jakobson’s six functions of language (plus two bonus functions Seth insists on adding: performative and incantatory). Can LLMs handle the referential? The expressive? The poetic? What about magic?The conversation gets properly technical as we dig into Crawford-Sobel cheap talk models, the collapse of costly signaling, and whether “pay to apply” is the inevitable market response to a world where everyone can produce indistinguishable text. Bo argues we’ll see more referral hiring (your network as the last remaining credible signal...

2025-12-021h 02

Justified PosteriorsDoes AI Cheapen Talk? (Bo Cowgill Pt. 1)In this episode, we brought on our friend Bo Cowgill, to dissect his forthcoming Management Science paper, Does AI Cheapen Talk? The core question is one economists have been circling since Spence drew a line on the blackboard: What happens when a technology makes costly signals cheap? If GenAI allows anyone to produce polished pitches, résumés, and cover letters, what happens to screening, hiring, and the entire communication equilibrium?Bo’s answer: it depends. Under some conditions, GenAI induces an epistemic apocalypse, flattening signals and confusing recruiters. In others, it reveals skill even more sharply, givi...

2025-11-1853 min

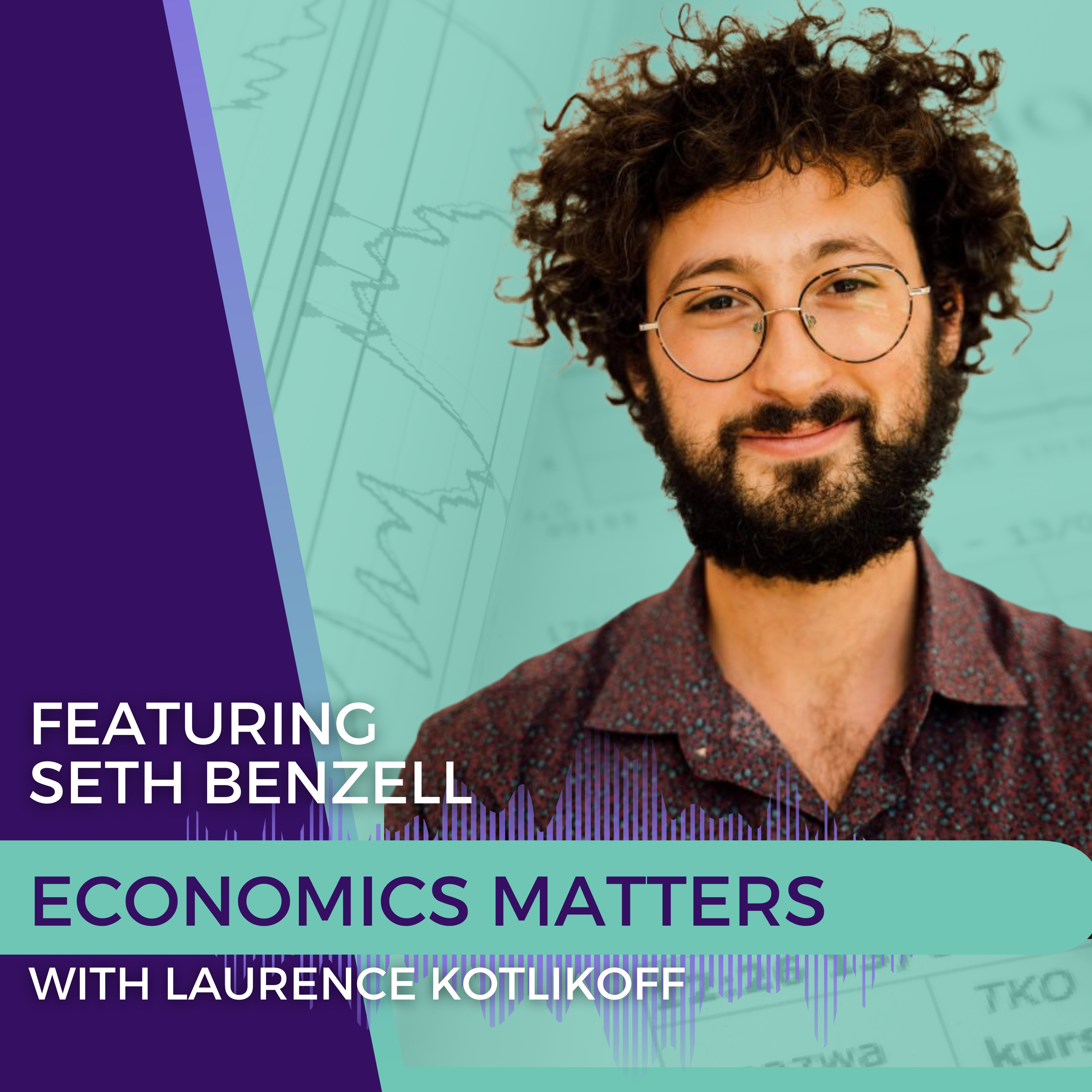

Economics Matters with Laurence KotlikoffSeth Benzell is Back to Update Us on All things AIWith the leading ten AI companies comprising one third of the value of the S&P and none showing a profit plus the threat they pose to our jobs, let alone humanity, we best keep careful track of the industry. My former graduate student, Seth Benzell, is one of our nation's top AI experts. Seth discusses AI's so-called scaling law, the problem of too little data to fit the industry's parameters mystically being cured by double descent, and the bitter lesson. Seth also talks us through how much money AI can save and, therefore, make by replacing humans in accompli...

2025-11-141h 03

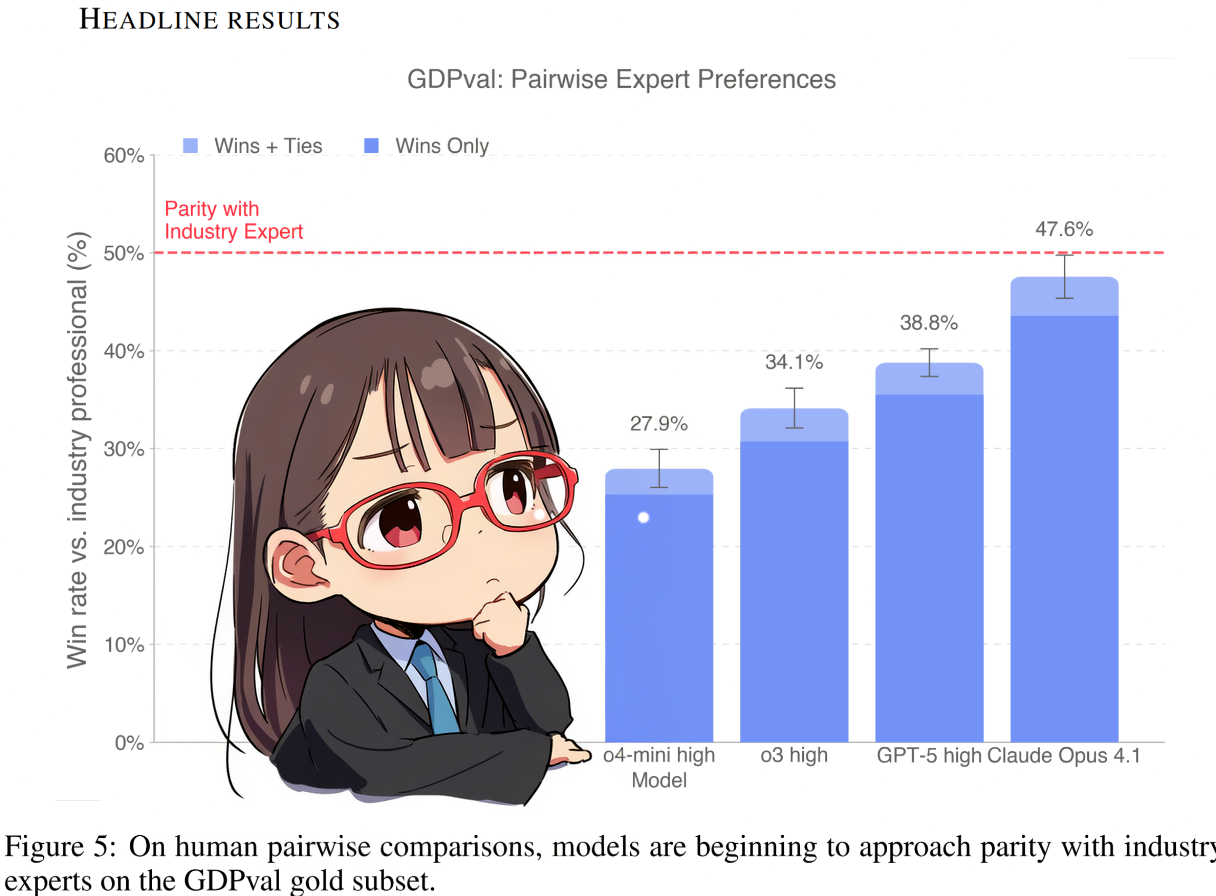

Justified PosteriorsEvaluating GDPVal, OpenAI's Eval for Economic ValueIn this episode of Justified Posteriors podcast, Seth and Andrey discuss “GDPVal” a new set of AI evaluations, really a novel approach to AI evaluation, from OpenAI. The metric is debuted in a new OpenAI paper, “GDP Val: Evaluating AI Model Performance on Real-World, Economically Valuable Tasks.” We discuss this “bottom-up” approach to the possible economic impact of AI (which evaluates hundreds of specific tasks, multiplying them by estimated economic value in the economy of each), and contrast it with Daron Acemoglu’s “top-down” “Simple Macroeconomics of AI” paper (which does the same, but only for aggregate averages), as well as with measures...

2025-11-041h 04

Justified PosteriorsWill Super-Intelligence's Opportunity Costs Save Human Labor?In this episode, Seth Benzell and Andrey Fradkin read “We Won’t Be Missed: Work and Growth in the AGI World” by Pascual Restrepo (Yale) to understand what how AGI will change work in the long run. A common metaphor for the post AGI economy is to compare AGIs to humans and men to ants. Will the AGI want to keep the humans around? Some argue that they would — there’s the possibility of useful exchange with the ants, even if they are small and weak, because an AGI will, definitionally, have opportunity costs. You might view Pascual’s paper as a f...

2025-10-2151 min

Justified PosteriorsCan political science contribute to the AI discourse?Economists generally see AI as a production technology, or input into production. But maybe AI is actually more impactful as unlocking a new way of organizing society. Finish this story: * The printing press unlocked the Enlightenment — along with both liberal democracy and France’s Reign of Terror* Communism is primitive socialism plus electricity* The radio was an essential prerequisite for fascism * AI will unlock ????We read “AI as Governance” by Henry Farrell in order to understand whether and how political scientists are thinking about this question. * Concepts or other...

2025-10-0758 min

Justified PosteriorsShould AI Read Without Permission?Many of today’s thinkers and journalists worry that AI models are eating their lunch: hoovering up these authors’ best ideas and giving them away for free or nearly free. Beyond fairness, there is a worry that these authors will stop producing valuable content if they can’t be compensated for their work. On the other hand, making lots of data freely accessible makes AI models better, potentially increasing the utility of everyone using them. Lawsuits are working their way through the courts as we speak of AI with property rights. Society needs a better of understanding the harms and be...

2025-09-2255 min

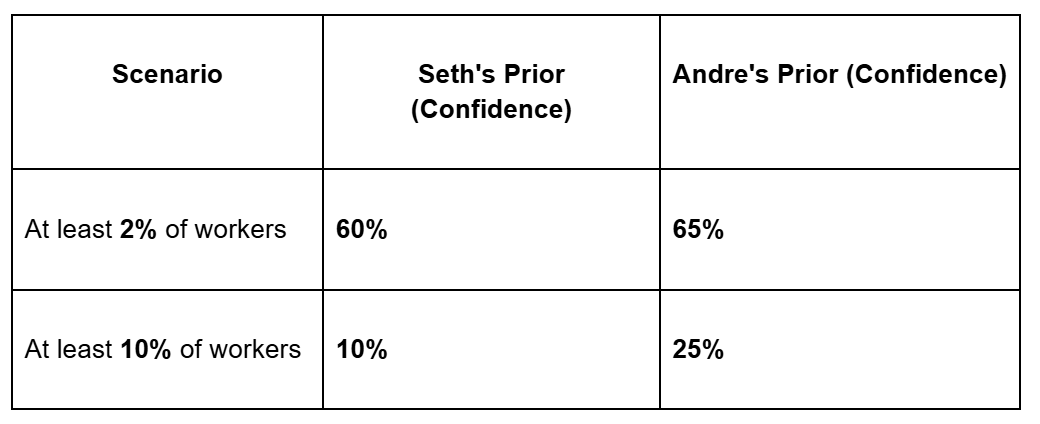

Justified PosteriorsEMERGENCY POD: Is AI already causing youth unemployment?In our first ever EMERGENCY PODCAST, co-host Seth Benzell is summoned out of paternity leave by Andrey Fradkin to discuss the AI automation paper that’s making headlines around the world. The paper is Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence by Erik Brynjolfsson, Bharat Chandar, and Ruyu Chen. The paper is being heralded as the first evidence that AI is negatively impacting employment for young workers in certain careers. Seth and Andrey dive in, and ask — what do we believe about AI’s effect on youth employment going in, and what c...

2025-09-0951 min

Justified PosteriorsAI and its labor market effects in the knowledge economyIn this episode, we discuss a new theoretical framework for understanding how AI integrates into the economy. We read the paper Artificial Intelligence and the Knowledge Economy (Ide & Talamas, JPE), and debate whether AI will function as a worker, a manager, or an expert. Read on to learn more about the model, our thoughts, timestamp, and at the end, you can spoil yourself on Andrey and Seth’s prior beliefs and posterior conclusions — Thanks to Abdullahi Hassan for compiling these notes to make this possible. The Ide & Talamas ModelOur discussion was based on the pape...

2025-08-2556 min

Justified PosteriorsOne LLM to rule them all?In this special episode of the Justified Posteriors Podcast, hosts Seth Benzell and Andrey Fradkin dive into the competitive dynamics of large language models (LLMs). Using Andrey’s working paper, Demand for LLMs: Descriptive Evidence on Substitution, Market Expansion, and Multihoming, they explore how quickly new models gain market share, why some cannibalize predecessors while others expand the user base, and how apps often integrate multiple models simultaneously.Host’s note, this episode was recorded in May 2025, and things have been rapidly evolving. Look for an update sometime soon.TranscriptSeth: Welcome to Justif...

2025-08-121h 02

Neues aus der Managementforschung in 220 SekundenOnline-Jobplattformen: Worauf reagieren Bewerber?Ep 65. Online-Jobplattformen: Worauf reagieren Bewerber? (August 2025)

Basierend auf dem Artikel „Competition Avoidance vs. Herding in Job Search: Evidence from Large-Scale Field Experiments on an Online Job Board" von Andrey Fradkin, Monica Bhole und John J. Horton.

https://doi.org/10.1287/mnsc.2023.02483

Der Podcast zur Unternehmensführung und Organisation für die Praxis, der Ihnen aktuelle Erkenntnisse aus der internationalen Spitzenforschung kurz und unkompliziert näherbringt.

Von und mit Prof. Dr. Markus Reitzig, Professor für Unternehmensstrategie an der Universität Wien.

Dieser Podcast ist Teil des brand eins Netzwerks.

2025-08-0104 min

Justified PosteriorsWhat can we learn from AI exposure measures?In a Justified Posteriors first, hosts Seth Benzell and Andrey Fradkin sit down with economist Daniel Rock, assistant professor at Wharton and AI2050 Schmidt Science Fellow, to unpack his groundbreaking research on generative AI, productivity, exposure scores, and the future of work. Through a wide-ranging and insightful conversation, the trio examines how exposure to AI reshapes job tasks and why the difference between exposure and automation matters deeply.Links to the referenced papers, as well as a lightly edited transcript of our conversation, with timestamps are below:Timestamps:[00:08] – Meet Daniel Rock[02:04] – Why AI? The...

2025-07-281h 11

Justified PosteriorsA Resource Curse for AI?In this episode of Justified Posteriors, we tackle the provocative essay “The Intelligence Curse” by Luke Drago and Rudolf Laine. What if AI is less like a productivity booster and more like oil in a failed state? Drawing from economics, political theory, and dystopian sci-fi, we explore the analogy between AI-driven automation and the classic resource curse.* [00:03:30] Introducing The Intelligence Curse – A speculative essay that blends LessWrong rationalism, macroeconomic theory, and political pessimism.* [00:07:55] Running through the six economic mechanisms behind the curse, including volatility, Dutch disease, and institutional decay.* [00:13:10] Prior #1: Will AI-enabled automation make e...

2025-07-141h 09

Justified PosteriorsRobots for the retired?In this episode of Justified Posteriors, we examine the paper "Demographics and Automation" by economists Daron Acemoglu and Pascual Restrepo. The central hypothesis of this paper is that aging societies, facing a scarcity of middle-aged labor for physical production tasks, are more likely to invest in industrial automation.Going in, we were split. One of us thought the idea made basic economic sense, while the other was skeptical, worrying that a vague trend of "modernity" might be the real force causing both aging populations and a rise in automation. The paper threw a mountain of data at...

2025-06-301h 00

Justified PosteriorsWhen Humans and Machines Don't Say What They ThinkAndrey and Seth examine two papers exploring how both humans and AI systems don't always say what they think. They discuss Luca Braghieri's study on political correctness among UC San Diego students, which finds surprisingly small differences (0.1-0.2 standard deviations) between what students report privately versus publicly on hot-button issues. We then pivot to Anthropic's research showing that AI models can produce chain-of-thought reasoning that doesn't reflect their actual decision-making process. Throughout, we grapple with fundamental questions about truth, social conformity, and whether any intelligent system can fully understand or honestly represent its own thinking.Timestamps (Transcript...

2025-06-171h 09

Justified PosteriorsScaling Laws Meet PersuasionIn this episode, we tackle the thorny question of AI persuasion with a fresh study: "Scaling Language Model Size Yields Diminishing Returns for Single-Message Political Persuasion." The headline? Bigger AI models plateau in their persuasive power around the 70B parameter mark—think LLaMA 2 70B or Qwen-1.5 72B.As you can imagine, this had us diving deep into what this means for AI safety concerns and the future of digital influence. Seth came in worried that super-persuasive AIs might be the top existential risk (60% confidence!), while Andrey was far more skeptical (less than 1%).Before jumping into th...

2025-06-0340 min

Justified PosteriorsTechno-prophets try macroeconomics: are they hallucinating?In this episode, we tackle a brand new paper from the folks at Epoch AI called the "GATE model" (Growth and AI Transition Endogenous model). It makes some bold claims. The headline grabber? Their default scenario projects a whopping 23% global GDP growth in 2027! As you can imagine, that had us both (especially Andrey) practically falling out of our chairs. Before diving into GATE, Andrey shared a bit about the challenge of picking readings for his PhD course on AGI and business – a tough task when the future hasn't happened yet! Then, we broke down the GATE model it...

2025-05-191h 06

Justified PosteriorsDid Meta's Algorithms Swing the 2020 Election?We hear it constantly: social media algorithms are driving polarization, feeding us echo chambers, and maybe even swinging elections. But what does the evidence actually say? In the darkest version of this narrative, social media platform owners are shadow king-makers and puppet masters who can select the winner of close election by selectively promoting narratives. Amorally, they disregard the heightened political polarization and mental anxiety which are the consequence of their manipulations of the public psyche. In this episode, we dive into an important study published in Science (How do social media feed algorithms affect...

2025-05-0550 min

Justified PosteriorsClaude Just Refereed the Anthropic Economic IndexIn this episode of Justified Posteriors, we dive into the paper "Which Economic Tasks Are Performed with AI: Evidence from Millions of Claude Conversations." We analyze Anthropic's effort to categorize how people use their Claude AI assistant across different economic tasks and occupations, examining both the methodology and implications with a critical eye.We came into this discussion expecting coding and writing to dominate AI usage patterns—and while the data largely confirms this, our conversation highlights several surprising insights. Why are computer and mathematical tasks so heavily overrepresented, while office administrative work lag behind? What explains th...

2025-04-211h 01

Justified PosteriorsHow much should we invest in AI safety?In this episode, we tackle one of the most pressing questions of our technological age: how much risk of human extinction should we accept in exchange for unprecedented economic growth from AI?The podcast explores research by Stanford economist Chad Jones, who models scenarios where AI might deliver a staggering 10% annual GDP growth but carry a small probability of triggering an existential catastrophe. We dissect how our risk tolerance depends on fundamental assumptions about utility functions, time horizons, and what actually constitutes an "existential risk."We discuss how Jones’ model presents some stark calculations: with ce...

2025-04-071h 09

Justified PosteriorsCan AI make better decisions than an ER doctor?Dive into the intersection of economics and healthcare with our latest podcast episode. How much can AI systems enhance high-stakes medical decision-making? In this episode, we explore the implications of a research paper titled “Diagnosing Physician Error: A Machine Learning Approach to Low Value Health Care” by Sendhil Mullainathan and Ziad Obermeyer.The paper argues that physicians often make predictable and costly errors in deciding who to test for heart attacks. The authors claim that incorporating machine learning could significantly improve the efficiency and outcome of such tests, reducing the cost per life year saved while maintaining or i...

2025-03-2443 min

Justified PosteriorsIf the Robots Are Coming, Why Aren't Interest Rates Higher?In this episode, we tackle an intriguing question inspired by a recent working paper: If artificial general intelligence (AGI) is imminent, why are real interest rates so low?The discussion centers on the provocative paper, "Transformative AI, Existential Risk, and Real Interest Rates", authored by Trevor Chow, Basil Halperin, and Jay Zachary Maslisch. This research argues that today's historically low real interest rates signal market skepticism about the near-term arrival of transformative AI—defined here as either technology generating massive economic growth (over 30% annually) or catastrophic outcomes like total extinction.We found ourselves initially at od...

2025-03-1159 min

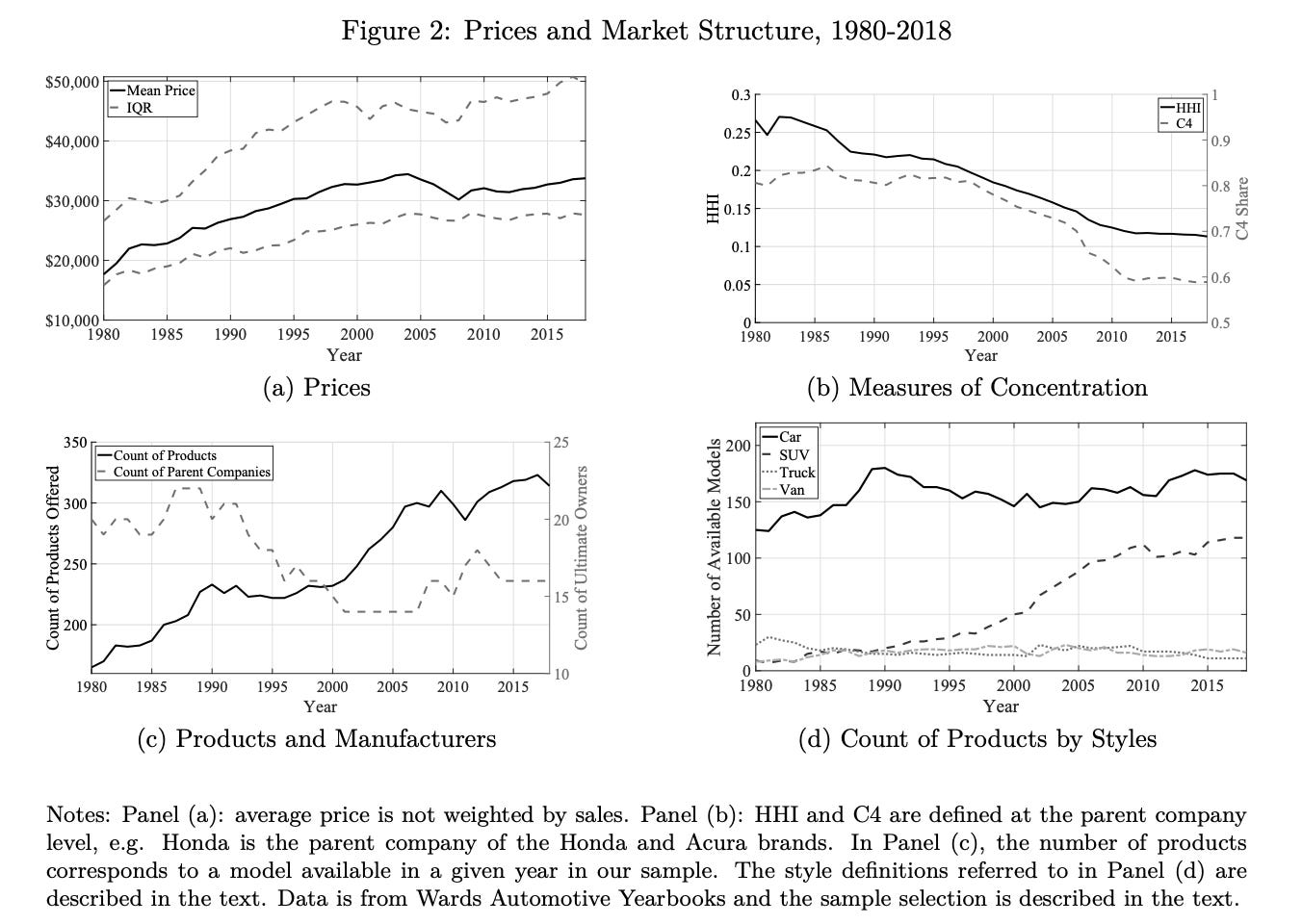

Justified PosteriorsHigh Prices, Higher Welfare? The Auto Industry as a Case StudyDoes the U.S. auto industry prioritize consumers or corporate profits? In this episode of Justified Posteriors, hosts Seth Benzell and Andrey Fradkin explore the evidence behind this question through the lens of the research paper “The Evolution of Market Power and the U.S. Automobile Industry” by Paul Grieco, Charles Murry, and Ali Yurukoglu.Join them as they unpack trends in car prices, market concentration, and consumer surplus, critique the methodology, and consider how competition and innovation shape the auto industry. Could a different competitive structure have driven even greater innovation? Tune in to find out!

2025-02-2453 min

Justified PosteriorsScaling Laws in AIDoes scaling alone hold the key to transformative AI?In this episode of Justified Posteriors, we dive into the topic of scaling laws in artificial intelligence (AI), discussing a set of paradigmatic papers.We discuss the idea that as more compute, data, and parameters are added to machine learning models, their performance improves predictably. Referencing several pivotal papers, including early works from OpenAI and empirical studies, they explore how scaling laws translate to model performance and potential economic value. We also debate the ultimate usefulness and limitations of scaling laws, considering whether purely increasing compute...

2025-02-1159 min

Justified PosteriorsIs Social Media a Trap?Are we trapped by the social media we love? In this episode of the “Justified Posteriors” podcast, hosts Seth Benzell and Andrey Fradkin discuss a research paper examining the social and economic impacts of TikTok and Instagram usage among college students. The paper, authored by Leonardo Bursztyn, Benjamin Handel, Rafael Jimenez, and Christopher Roth, suggests that these platforms may create a “collective trap” where users prefer a world where no one used social media, despite the platforms' popularity. Through surveys, the researchers found that students place significant value on these platforms but also experience negative social externalities. The discussion explores...

2025-01-2637 min

Justified PosteriorsBeyond Task ReplacementIn this episode, we discuss Artificial Intelligence Technologies and Aggregate Growth Prospects by Timothy Bresnahan.* We contrast Tim Bresnahan's paper on AI's impact on economic growth, with Daron Acemoglu's task-replacement focused approach from the previous episode.* Bresnahan argues that AI's main economic benefits will come through:* Reorganizing organizations and tasks* Capital deepening (improving existing machine capabilities)* Creating new products and services rather than simply replacing human jobs* We discuss examples from big tech companies:* Amazon's product recommendations* Google's search capabilities* Voice...

2025-01-1144 min

Justified PosteriorsThe Simple Macroeconomics of AIWill AI's impact be as modest as predicted, or could it exceed expectations in reshaping economic productivity? In this episode, hosts Seth Benzell and Andrey Fradkin discuss the paper “The Simple Macroeconomics of AI” by Daron Acemoglu, an economist and an institute professor at MIT.Additional notes from friend of the podcast Daniel Rock of Wharton, coauthor of “GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models” one of the papers cited in the show, and a main data source for Acemoglu’s paper: (1) Acemoglu does not use the paper’s ‘main’ est...

2024-12-2153 min

Justified PosteriorsSituational AwarenessHow close are we to AGI, and what might its impact be on the global stage? In this episode, hosts Seth Benzell and Andrey Fradkin tackle the high-stakes world of artificial intelligence, focusing on the transformative potential of Artificial General Intelligence (AGI). The conversation is based on Leopold Aschenbrenner’s essay 'Situational Awareness', which argues that AI's development follows a predictable scaling law that allow for reliable projections about when AGI will emerge. The hosts also discuss Leopold’s thoughts on the geopolitical implications of AGI, including the influence of AI on military and social conflicts.Sp...

2024-12-071h 02

Mobile Dev Memo PodcastSeason 4, Episode 6: Consumer welfare and antitrust in tech (with Andrey Fradkin)My guest on this week's episode of the Mobile Dev Memo podcast is Andrey Fradkin, an Assistant Professor of Marketing at Boston University's Questrom School of Business. The topic of our conversation is the role of antitrust in tech and the various design decisions impact consumer welfare. Among other things, our discussion covers:

The role of antitrust law with respect to digital marketplaces;

Whether digital marketplaces naturally trend toward anticompetitive behavior;

How and whether certain consumer tech design practices can benefit consumers and still be considered anti-competitive;

Amazon's treatment of its own products in search rankings (from Andrey's...

2024-10-0945 min

the no BS podcastDaily Dose - What Does Banning Short-term Rentals Really Accomplish?Original article or post - Harvard Business Review - What Does Banning Short-term Rentals Really Accomplish? https://hbr.org/2024/02/what-does-banning-short-term-rentals-really-accomplish by Sophie Calder-Wang, Chiara Farronato, and Andrey FradkinTagging - David Angotti - Chief Evangelist at Guesty, Founder of StaySenseIs there an article or social post you'd like us to respond to on the no BS Daily Dose?? If so, please fill out this Google form- https://docs.google.com/forms/d/1EoiHZ5PvtsTqDqvZDrhDWvrkDGPJcTVrhioaPO0S4nY/edit

2024-02-2103 min