Shows

FC Picks#11. Quantificação da incerteza em ML com Chris Molnar (três fontes)As três fontes, majoritariamente de Christoph Molnar e Timo Freiesleben, oferecem uma visão aprofundada sobre a quantificação da incerteza, as filosofias de modelagem e a aplicação de aprendizado de máquina em ciência.A Predição Conformal (CP) é apresentada como uma metodologia para quantificar a incerteza de modelos de ML com garantias probabilísticas, notadamente a cobertura garantida do resultado verdadeiro, que é geralmente marginal (em média). Ela é agnóstica ao modelo, independente de distribuição e não requer retreinamento, sendo aplicável a classificação (conjuntos de predição), regressão...

2025-06-1113 min

Rund um Hund - dein Podcast aus dem TreuhundbüroDas Bellen der HundeKein anderes Thema kann zu so grossen Konflikten in der Nachbarschaft führen wie das Bellen der Hunde. In dieser Folge von Rund um Hund spreche ich deshalb darüber, was bellen überhaupt ist, welche Funktionen es für Hunde einnimmt, warum die Melodie des Bellens für uns Hundemenschen durchaus eine grosse Wichtigkeit hat und welche Kategorien von Bellen es überhaupt gibt. Ferner versuche ich die Frage zu beantworten, wann ein Hund zu viel bellt und erzähle, wie man das Bellen eines Hundes trainieren kann.QUELLENAbrantes: "Evolution of canine social behavior", 2004Brads...

2025-04-0933 min

The AI FundamentalistsSupervised machine learning for science with Christoph Molnar and Timo Freiesleben, Part 2Part 2 of this series could have easily been renamed "AI for science: The expert’s guide to practical machine learning.” We continue our discussion with Christoph Molnar and Timo Freiesleben to look at how scientists can apply supervised machine learning techniques from the previous episode into their research.Introduction to supervised ML for science (0:00) Welcome back to Christoph Molnar and Timo Freiesleben, co-authors of “Supervised Machine Learning for Science: How to Stop Worrying and Love Your Black Box”The model as the expert? (1:00)Evaluation metrics have profound downstream effects on all model...

2025-03-2741 min

The AI FundamentalistsSupervised machine learning for science with Christoph Molnar and Timo Freiesleben, Part 1Machine learning is transforming scientific research across disciplines, but many scientists remain skeptical about using approaches that focus on prediction over causal understanding. That’s why we are excited to have Christoph Molnar return to the podcast with Timo Freiesleben. They are co-authors of "Supervised Machine Learning for Science: How to Stop Worrying and Love your Black Box." We will talk about the perceived problems with automation in certain sciences and find out how scientists can use machine learning without losing scientific accuracy.• Different scientific disciplines have varying goals beyond prediction, including control, explanation, and reaso...

2025-03-2527 min

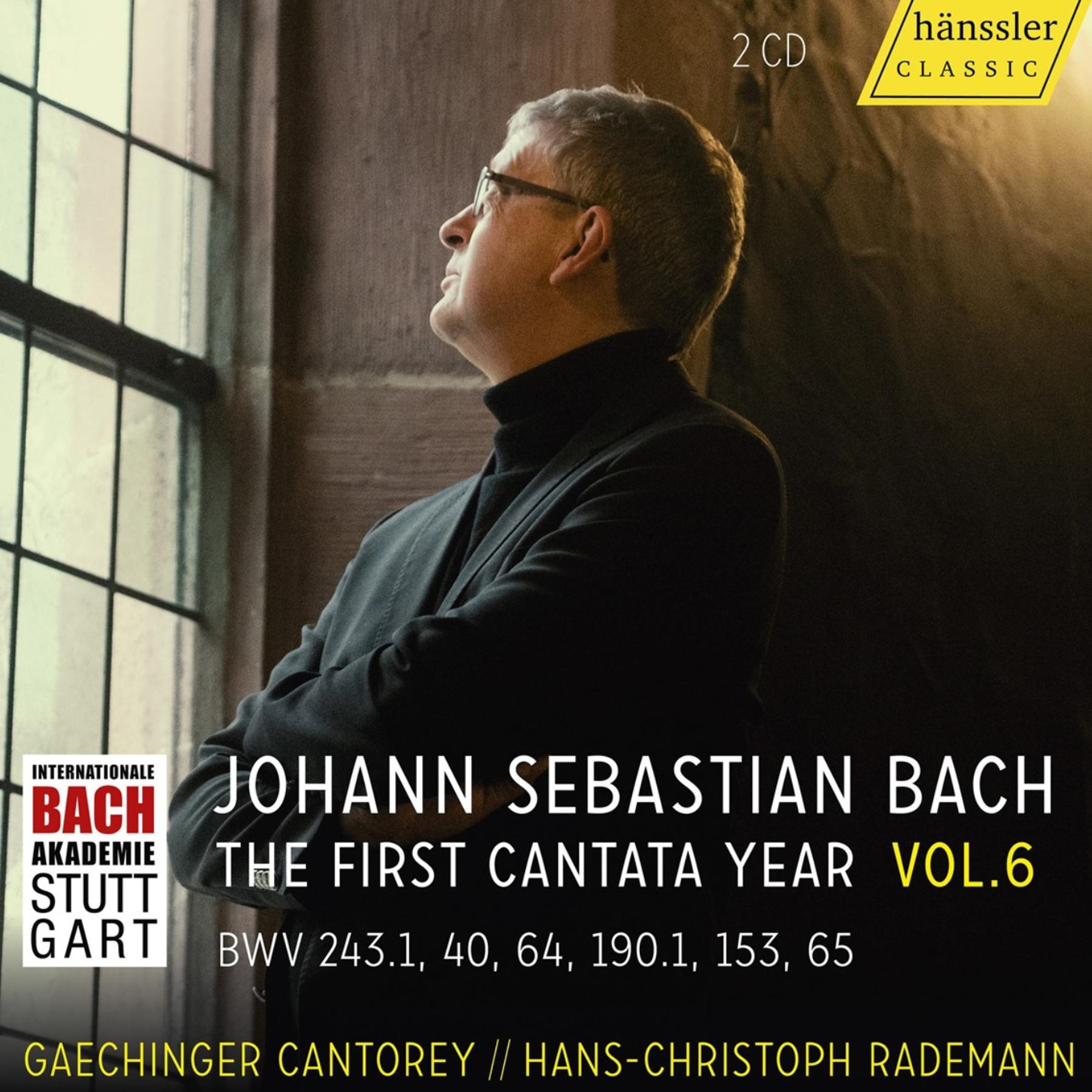

Klassik aktuellNeues Album: Bach-Kantaten Vol. 6, „Magnificat“Die Kantaten von Johann Sebastian Bach: sie werden bewundert als Schätze der Kirchenmusik. Gesamtaufnahmen der Bach-Kantaten sind die Königsdisziplin. Geradezu eine Pflicht ist das für die Internationale Bachakademie in Stuttgart. Ihr Leiter Hans-Christoph Rademann hat sich im vergangenen Jahr an seine Gesamtaufnahme der Bach-Kantaten gemacht. Jetzt ist die sechste Folge mit Kantaten aus Bachs erstem Jahr als Thomaskantor in Leipzig erschienen. Laszlo Molnar hat sie sich angehört.

2025-01-1503 min

Tous mélomanesLes musiques de la famille !Ils s'appellent Bach, Mozart, Stravinsky, Wagner, mais ils ne sont pas ceux que vous croyez ! Aucune note de Jean-Sébastien (Bach), ni de Wolfgang Amadeus (Mozart), ni d’Igor (Stravinsky) et encore moins de Richard (Wagner) ! Les musiciens que nous écoutons portent le même nom, mais ce sont des proches, des membres des familles respectives de ces compositeurs précités. Et eux aussi ont - merci la génétique - beaucoup de talent...Références musicales : Johann Christoph Bach : Motet ‘Lieber Herr Gott, wecke uns auf » Cantus Cölln – dir. Konrad Jungh...

2024-06-0658 min

AI StoriesInterpreting Black Box Models with Christoph Molnar #40Our guest today is Christoph Molnar, expert in Interpretable Machine Learning and book author. In our conversation, we dive into the field of Interpretable ML. Christoph explains the difference between post hoc and model agnostic approaches as well as global and local model agnostic methods. We dig into several interpretable ML techniques including permutation feature importance, SHAP and Lime. We also talk about the importance of interpretability and how it can help you build better models and impact businesses. If you enjoyed the episode, please leave a 5 star review and subscribe to the AI Stories Y...

2024-01-1055 min

The AI FundamentalistsAI regulation, data privacy, and ethics - 2023 summarizedIt's the end of 2023 and our first season. The hosts reflect on what's happened with the fundamentals of AI regulation, data privacy, and ethics. Spoiler alert: a lot! And we're excited to share our outlook for AI in 2024.AI regulation and its impact in 2024.Hosts reflect on AI regulation discussions from their first 10 episodes, discussing what went well and what didn't.Its potential impact on innovation. 2:36AI innovation, regulation, and best practices. 7:05AI, privacy, and data security in healthcare. 11:08Data scientists face contradictory goals of fairness and privacy in AI, with increased interest in...

2023-12-1931 min

DataTalks.ClubCracking the Code: Machine Learning Made Understandable - Christoph MolnarWe talked about:

Christoph’s background

Kaggle and other competitions

How Christoph became interested in interpretable machine learning

Interpretability vs Accuracy

Christoph’s current competition engagement

How Christoph chooses topics for books

Why Christoph started the writing journey with a book

Self-publishing vs via a publisher

Christoph’s other books

What is conformal prediction?

Christoph’s book on SHAP

Explainable AI vs Interpretable AI

Working alone vs with other people

Christoph’s other engagements and how to stay hands-on

Keeping a logbook

Does one have to be an expert on the topic to write a book about it?

Writing in...

2023-11-2751 min

The AI FundamentalistsModeling with Christoph MolnarEpisode 4. The AI Fundamentalists welcome Christoph Molnar to discuss the characteristics of a modeling mindset in a rapidly innovating world. He is the author of multiple data science books including Modeling Mindsets, Interpretable Machine Learning, and his latest book Introduction to Conformal Prediction with Python. We hope you enjoy this enlightening discussion from a model builder's point of view.To keep in touch with Christoph's work, subscribe to his newsletter Mindful Modeler - "Better machine learning by thinking like a statistician. About model interpretation, paying attention to data, and always staying critical."SummaryI...

2023-07-2527 min

Practical AICopilot lawsuits & Galactica "science"There are some big AI-related controversies swirling, and it’s time we talk about them. A lawsuit has been filed against GitHub, Microsoft, and OpenAI related to Copilot code suggestions, and many people have been disturbed by the output of Meta AI’s Galactica model. Does Copilot violate open source licenses? Does Galactica output dangerous science-related content? In this episode, we dive into the controversies and risks, and we discuss the benefits of these technologies.Featuring:Chris Benson – Website, GitHub, LinkedIn, XDaniel Whitenack – Website, GitHub, XShow Notes:Related to Copilot...

2022-11-2944 min

Investigando la investigación164. SIBILA: investigación y desarrollo en aprendizaje máquina interpretable mediante supercomputación para la medicina personalizadaEpisodio previo sobre el proyecto H2020 REVERT: https://anchor.fm/horacio-ps/episodes/Proyecto-Europeo-H2020-REVERT-Medicina-personalizada-mediante-inteligencia-artificial-etpfse

Paper revisión mencionado: https://www.mdpi.com/1422-0067/22/9/4394

Libro Christoph Molnar "Interpretable Machine Learning": https://christophm.github.io/interpretable-ml-book/

Paper de SIBILA: https://arxiv.org/abs/2205.06234

Código de SIBILA: https://github.com/bio-hpc/sibila

Comunidad discord BIO-HPC: https://discord.gg/gFNjXGNVxx

Paper BRUSELAS: https://pubs.acs.org/doi/abs/10.1021/acs.jcim.9b00279

Tesis Antonio Jesús Banegas Luna: http://repositorio.ucam.edu/handle/10952/4124

2022-06-131h 24

On the Way to Effective Finance#15 Uta Molnar von Siemens Buildings Products über die kaufmännische CFO-Sicht und adressatengerechte Kommunikation"Der Finanzbereich muss sich vom Number Cruncher zur Daten Management Organisation entwickeln."

Uta Molnar ist CFO von Siemens Building Products Deutschland, Teil der Smart Infrastructure Sparte von Siemens. Als einer der Marktführer im Bereich der Gebäudetechnik hat Building Products das Ziel, mit seiner Technologie das Leben und Arbeiten in den Gebäuden an die sich ändernden Bedingungen und Bedürfnisse ihrer Nutzer anzupassen: Digitalisierung und Smart Products werden auf intelligente Weise die physische mit der digitalen Welt in einem Gebäude-Ecosystem verbinden und somit die Gebäude komfortabler, sicherer, effizienter und nachhaltiger machen. Uta Molnar war vor...

2022-04-0430 min

>reboot academiaPublizieren und offene BücherChristoph Molnar ist Wissenschaftler und beschäftigt sich mit erklärbarer künstlicher Intelligenz. In dieser Episode spreche ich mit ihm über sein Buch “Interpretable Machine Learning”, das er unter einer offenen Lizenz als Website zur Verfügung stellt. Wir sprechen über die Magie von offenen Lizenzen und darüber wie wissenschaftliches Publizieren in Zukunft aussehen könnte.

Disclaimer: Christoph ist mein Ehemann, weshalb ich schon viele seiner Antworten vorher kannte und auch so viel über ihn weiß. Einige Dinge habe aber auch ich neu von ihm gelernt.

Links:

Christophs Twitter: https://twitter.com/ChristophMolnar

Christophs Website: https://www.mlnar.com/

Buch als...

2021-10-2637 min

Data Futurology - Leadership And Strategy in Artificial Intelligence, Machine Learning, Data Science#70 RE-RUN: Making Black Box Models Explainable with Christoph Molnar– Interpretable Machine Learning ResearcherChristoph Molnar is a data scientist and Ph.D. candidate in interpretable machine learning. He is interested in making the decisions from algorithms more understandable for humans. Christoph is passionate about using statistics and machine learning on data to make humans and machines smarter.

In this episode, Christoph explains how he decided to study statistics at university, which eventually led him to his passion for machine learning and data. Starting out studying with a senior researcher gave Christoph exposure to many different projects. It is an excellent program for students and companies whom both benefit greatly...

2021-08-241h 15

The Effort Report135 - Covert (Resource) AffairsElizabeth and Roger cover a variety of small things, grant follow up, and how to think about covert or hidden resources. Show Notes: Christoph Molnar thread about academia Anne Silverman thread about cover letters Roger on Twitter Elizabeth on Twitter Effort Report on Twitter Get Roger’s and Elizabeth’s book, The Art of Data Science Subscribe to the podcast on Apple Podcasts Subscribe to the podcast on Google Play Find past episodes Contact us at th...

2021-03-2451 min

Machine Learning Street Talk (MLST)047 Interpretable Machine Learning - Christoph MolnarChristoph Molnar is one of the main people to know in the space of interpretable ML. In 2018 he released the first version of his incredible online book, interpretable machine learning. Interpretability is often a deciding factor when a machine learning (ML) model is used in a product, a decision process, or in research. Interpretability methods can be used to discover knowledge, to debug or justify the model and its predictions, and to control and improve the model, reason about potential bias in models as well as increase the social acceptance of models. But Interpretability methods can also be quite...

2021-03-141h 40

Medical Device Insights2020-12: Interpretierbarkeit von Machine Learning von ModellenFallstricke beim Einsatz von Machine Learning Modellen und beim Versuch, diese zu interpretieren

Buch von Christoph Molnar zur Interpretierbarkeit

- Artikel zu den regulatorischen Anforderungen an Medizinprodukte, die Machine Learning verwenden

Fachartikel Pitfalls to Avoid when Interpreting Machine Learning Models

Videotrainings im Auditgarant

Leitfaden zur KI bei Medizinprodukten (Basis für WHO-Leitfaden und Leitfaden der Benannten Stellen)

2020-11-1719 min

Machine Learning Street Talk (MLST)Explainability, Reasoning, Priors and GPT-3This week Dr. Tim Scarfe and Dr. Keith Duggar discuss Explainability, Reasoning, Priors and GPT-3. We check out Christoph Molnar's book on intepretability, talk about priors vs experience in NNs, whether NNs are reasoning and also cover articles by Gary Marcus and Walid Saba critiquing deep learning. We finish with a brief discussion of Chollet's ARC challenge and intelligence paper.

00:00:00 Intro

00:01:17 Explainability and Christoph Molnars book on Intepretability

00:26:45 Explainability - Feature visualisation

00:33:28 Architecture / CPPNs

00:36:10 Invariance and data parsimony, priors and experience, manifolds

00:42:04 What NNs l...

2020-09-161h 25

Data Futurology - Leadership And Strategy in Artificial Intelligence, Machine Learning, Data Science#110 Explainable AI Methods for Structured DataDuring this special episode, Felipe gives a presentation on explainable AI methods for structured data. First, Felipe talks about opening the black box. Algorithms can be both sexist and racist, even at massive companies like Google and Amazon. Removing bias in AI is a difficult problem. However, there are ways to overcome it. Where does the bias come from? The dirty secret is that the data is biased. The algorithm doesn’t decide to be biased, it learns to be biased from the data. In reality, AI puts a mirror on society. We have inherent sexism and racism in ou...

2020-05-1537 min

Data SkepticInterpretabilityInterpretability Machine learning has shown a rapid expansion into every sector and industry. With increasing reliance on models and increasing stakes for the decisions of models, questions of how models actually work are becoming increasingly important to ask. Welcome to Data Skeptic Interpretability. In this episode, Kyle interviews Christoph Molnar about his book Interpretable Machine Learning. Thanks to our sponsor, the Gartner Data & Analytics Summit going on in Grapevine, TX on March 23 – 26, 2020. Use discount code: dataskeptic. Music Our new theme song is #5 by Big D and the Kids Table...

2020-01-0732 min

Data Futurology - Leadership And Strategy in Artificial Intelligence, Machine Learning, Data Science#70 Making Black Box Models Explainable with Christoph Molnar – Interpretable Machine Learning ResearcherChristoph Molnar is a data scientist and Ph.D. candidate in interpretable machine learning. He is interested in making the decisions from algorithms more understandable for humans. Christoph is passionate about using statistics and machine learning on data to make humans and machines smarter.

Enjoy the show!

We speak about:

[02:10] How Christoph started in the data space

[09:25] Understanding what a researcher needs

[15:15] Skills learned from software engineers

[16:00] Statistical consulting

[19:50] Labeling data

[23:00] Christoph is pursuing his Ph.D.

[29:00] Why is interpretable machine learning needed now?

[31:00...

2019-09-2455 min

Practical AIModel inspection and interpretation at SeldonInterpreting complicated models is a hot topic. How can we trust and manage AI models that we can’t explain? In this episode, Janis Klaise, a data scientist with Seldon, joins us to talk about model interpretation and Seldon’s new open source project called Alibi. Janis also gives some of his thoughts on production ML/AI and how Seldon addresses related problems.Sponsors:DigitalOcean – Check out DigitalOcean’s dedicated vCPU Droplets with dedicated vCPU threads. Get started for free with a $50 credit. Learn more at do.co/changelog. DataEngPodcast – A podcast about data engine...

2019-06-1743 min