Shows

一位小米前高管的AI学习日报AIOS: LLM Agent Operating SystemThe source introduces AIOS, an innovative Large Language Model (LLM) agent operating system designed to optimize the performance and deployment of LLM-based intelligent agents. It addresses key challenges like suboptimal scheduling, resource allocation, and context management by integrating LLMs as the "brain" of the operating system. AIOS features a layered architecture including application, kernel (OS and LLM kernels), and hardware layers, with the LLM kernel providing crucial modules for agent scheduling, context management, memory/storage management, and tool/access control. The system aims to facilitate concurrent execution of multiple agents and enhance their ability to solve complex, real-world tasks...2025-07-2629 min

一位小米前高管的AI学习日报AIOS: LLM Agent Operating SystemThe source introduces AIOS, an innovative Large Language Model (LLM) agent operating system designed to optimize the performance and deployment of LLM-based intelligent agents. It addresses key challenges like suboptimal scheduling, resource allocation, and context management by integrating LLMs as the "brain" of the operating system. AIOS features a layered architecture including application, kernel (OS and LLM kernels), and hardware layers, with the LLM kernel providing crucial modules for agent scheduling, context management, memory/storage management, and tool/access control. The system aims to facilitate concurrent execution of multiple agents and enhance their ability to solve complex, real-world tasks...2025-07-2629 min Inside Algomatic#116 AI/LLM学術ニュース Weekly #14: LLMのカスタマイズを数秒で Drag-and-Drop LLMs(DnD)「AI/LLM学術ニュースWeekly」では最新のAI/LLM学術ニュースについてWeeklyで語ります。ファシリテーターはAI Transformation(AX) カンパニー AIエンジニア 岩城、語り手はAIエンジニア 渋谷でお送りします。第14回は、「Drag-and-Drop LLMs(DnD)」について紹介します。Drag-and-Drop LLMs(DnD)はプロンプトを与えるだけで、従来は数時間かかっていたLLMのファインチューニングとほぼ同等の効果を発揮する重みの更新を数秒で完了させます。これにより、モデルを特定のタスクへ高速に適応させることが可能になり、リアルタイムでのモデル変更を実施できます。詳しくはPodcastをお聞きください。出演者AI Transformation(AX) AIエンジニア 岩城(@yukl_dev)AI Transformation(AX) AIエンジニア 渋谷(@sergicalsix)note・https://note.com/algomatic_oa/n/n3dd05738f8b1技術紹介のリンク・https://arxiv.org/abs/2506.16406Algomaticグループでは一緒に働く仲間を募集中です!「AI/LLM学術ニュース Weekly」でご紹介しているような生成AI/LLMの技術に興味がある方々、Algomaticに興味がある方々、まずはカジュアル面談でお気軽に連絡いただければと思います。採用情報はこちら:https://jobs.algomatic.jp/2025-07-1010 min

Inside Algomatic#116 AI/LLM学術ニュース Weekly #14: LLMのカスタマイズを数秒で Drag-and-Drop LLMs(DnD)「AI/LLM学術ニュースWeekly」では最新のAI/LLM学術ニュースについてWeeklyで語ります。ファシリテーターはAI Transformation(AX) カンパニー AIエンジニア 岩城、語り手はAIエンジニア 渋谷でお送りします。第14回は、「Drag-and-Drop LLMs(DnD)」について紹介します。Drag-and-Drop LLMs(DnD)はプロンプトを与えるだけで、従来は数時間かかっていたLLMのファインチューニングとほぼ同等の効果を発揮する重みの更新を数秒で完了させます。これにより、モデルを特定のタスクへ高速に適応させることが可能になり、リアルタイムでのモデル変更を実施できます。詳しくはPodcastをお聞きください。出演者AI Transformation(AX) AIエンジニア 岩城(@yukl_dev)AI Transformation(AX) AIエンジニア 渋谷(@sergicalsix)note・https://note.com/algomatic_oa/n/n3dd05738f8b1技術紹介のリンク・https://arxiv.org/abs/2506.16406Algomaticグループでは一緒に働く仲間を募集中です!「AI/LLM学術ニュース Weekly」でご紹介しているような生成AI/LLMの技術に興味がある方々、Algomaticに興味がある方々、まずはカジュアル面談でお気軽に連絡いただければと思います。採用情報はこちら:https://jobs.algomatic.jp/2025-07-1010 min PaperLedgeComputation and Language - Efficiency-Effectiveness Reranking FLOPs for LLM-based RerankersHey PaperLedge crew, Ernis here, ready to dive into some seriously cool research! Today, we're tackling a paper that's all about making those super-smart Large Language Models, or LLMs, work smarter, not just harder, when it comes to finding you the info you need.

Now, you've probably heard of LLMs like ChatGPT. They're amazing at understanding and generating text, and researchers have been using them to improve search results – it's like having a super-powered librarian that knows exactly what you're looking for. This is done by reranking search results; taking the initial list from a search engine an...2025-07-0906 min

PaperLedgeComputation and Language - Efficiency-Effectiveness Reranking FLOPs for LLM-based RerankersHey PaperLedge crew, Ernis here, ready to dive into some seriously cool research! Today, we're tackling a paper that's all about making those super-smart Large Language Models, or LLMs, work smarter, not just harder, when it comes to finding you the info you need.

Now, you've probably heard of LLMs like ChatGPT. They're amazing at understanding and generating text, and researchers have been using them to improve search results – it's like having a super-powered librarian that knows exactly what you're looking for. This is done by reranking search results; taking the initial list from a search engine an...2025-07-0906 min Pi TechNews: етичні проблеми LLM, ШІ в фантастиці та в житті, чи всі мають програмувати?ШІ вже генерує код, створює контент і навіть сам себе перевіряє — але чи може він мислити?🔹 локальна LLM-модель від Microsoft🔹 Apple зберігає мовчанку щодо власної AI-стратегії🔹 сучасні моделі мають суттєві обмеження у міркуванні та розумінніТакож говоримо про те, як ШІ змінює створення контенту, ревʼю коду та навіть поведінку в ігрових симуляціях. У межах експерименту LLM-моделі зіграли у гру Дипломатія — і результати виявилися напрочуд «людськими»: одні брехали, інші вели переговори, дехто — діяв агресивно.Етика, стабільність ринку праці, майбутнє людської креативності — про все це говоримо в новому випуску.00:32 — огляд локальної LLM-моделі від Microsoft02:00 — розвиток ШІ в Apple та пов’язані виклики04:22 — можливості й обмеження LLM09:30 — етичні питання у сфері ШІ та LLM15:46 — LLM у стратегічних іграх23:08 — генерація відео за допомогою ШІ та майбутнє створення контенту33:43 — небезпека вічних хімікатів38:43 — автономні транспортні засоби та роль ШІ в доставленні43:10 — захист авторських прав 49:38 — вплив ШІ на технологічну індустрію

2025-07-0156 min

Pi TechNews: етичні проблеми LLM, ШІ в фантастиці та в житті, чи всі мають програмувати?ШІ вже генерує код, створює контент і навіть сам себе перевіряє — але чи може він мислити?🔹 локальна LLM-модель від Microsoft🔹 Apple зберігає мовчанку щодо власної AI-стратегії🔹 сучасні моделі мають суттєві обмеження у міркуванні та розумінніТакож говоримо про те, як ШІ змінює створення контенту, ревʼю коду та навіть поведінку в ігрових симуляціях. У межах експерименту LLM-моделі зіграли у гру Дипломатія — і результати виявилися напрочуд «людськими»: одні брехали, інші вели переговори, дехто — діяв агресивно.Етика, стабільність ринку праці, майбутнє людської креативності — про все це говоримо в новому випуску.00:32 — огляд локальної LLM-моделі від Microsoft02:00 — розвиток ШІ в Apple та пов’язані виклики04:22 — можливості й обмеження LLM09:30 — етичні питання у сфері ШІ та LLM15:46 — LLM у стратегічних іграх23:08 — генерація відео за допомогою ШІ та майбутнє створення контенту33:43 — небезпека вічних хімікатів38:43 — автономні транспортні засоби та роль ШІ в доставленні43:10 — захист авторських прав 49:38 — вплив ШІ на технологічну індустрію

2025-07-0156 min The Platform PlaybookLLM Security Exposed! Breaking Down the Zero-Trust Blueprint for AI WorkloadsIn this episode, we break down our recent YouTube video : “LLM Security Exposed!”, where we explore the rising security risks in Large Language Model (LLM) deployments — and how Zero-Trust principles can help mitigate them.🔍 We dive deeper into:The top LLM threats you can’t afford to ignore — from prompt injection to data leakage and malicious packagesWhy LLM applications need the same level of protection as any production workloadWhat a Zero-Trust Architecture looks like in the AI spaceHow tools like LLM Guard, Rebuff, Vigil, Guardrail AI, and Kubernete...2025-06-2725 min

The Platform PlaybookLLM Security Exposed! Breaking Down the Zero-Trust Blueprint for AI WorkloadsIn this episode, we break down our recent YouTube video : “LLM Security Exposed!”, where we explore the rising security risks in Large Language Model (LLM) deployments — and how Zero-Trust principles can help mitigate them.🔍 We dive deeper into:The top LLM threats you can’t afford to ignore — from prompt injection to data leakage and malicious packagesWhy LLM applications need the same level of protection as any production workloadWhat a Zero-Trust Architecture looks like in the AI spaceHow tools like LLM Guard, Rebuff, Vigil, Guardrail AI, and Kubernete...2025-06-2725 min Macro Lab 總經實驗室EP28 | AI 自由還是枷鎖?開源 VS 閉源 LLM,哪條路才是未來?— SWOT 深度解析(AI 語音)Macro Lab | 總經實驗室

EP28 | AI 自由還是枷鎖?開源 VS 閉源 LLM,哪條路才是未來?— SWOT 深度解析(AI 語音)

本集《Macro Lab 總經實驗室》帶你從概念到策略,一次看懂開源與閉源大型語言模型(LLM)的核心差異──為何Meta 選擇開放,Google、OpenAI 與 Anthropic 則走商業化路線?我們用最實務的案例與最新SWOT分析,助你快速掌握各自優劣與風險,並在複雜市場中做出最佳選擇!

Chin 帶你進入 LLM 抉擇的核心對話:當「成本、隱私、性能、可定制化」彼此競合,企業和開發者該如何權衡?

1️⃣ 開源LLM詳解 [0:49]

Meta Llama、Hugging Face 社群生態自由度高

高透明度+社群協作:可檢視偏見、快速迭代

免授權費×可重現研究,但需承擔資安與維護成本

2️⃣ 開源LLM SWOT分析 [1:14]

Strengths:技術透明、成本靈活、社群驅動

Weaknesses:濫用風險、技術門檻、可持續性挑戰

Opportunities:監管合規優勢、邊緣運算應用

Threats:生態碎片化、商業變現限制

3️⃣ 閉源LLM詳解 [3:00]

GPT 系列、Google Gemini、Claude 等商業模型

精選訓練資料+企業級安全控管

API 即用體驗佳,但客製化彈性低、需信任供應商

4️⃣ 閉源LLM SWOT分析 [3:06]

Strengths:專有技術壁壘、商業化效率、責任明確

Weaknesses:黑箱風險、供應商鎖定、透明度不足

Opportunities:多模態整合、垂直領域專用化

Threats:開源追趕、監管壓力上升

5️⃣ 未來趨勢與策略對比 [3:11]

混合模式崛起:開源基礎+閉源增值服務

監管驅動開放:企業需保持透明與合規

選型關鍵:依場景、數據敏感度、團隊能力決策

🎯 為何一定要聽?

全方位決策依據:結合 SWOT 與實務選型指標,助你從策略高度與落地層面雙管齊下 unisys.combusinessinsider.com

風險與對策掌握:學會如何利用 Reflection Prompts、Cognitive Scaffold 等設計強化 AI 應用中的安全與可靠性

🔔 立即收聽 → 訂閱 Macro Lab,解鎖更多 科技 × 經濟 × 社會 深度剖析!

👍 按讚+分享,加入 IG @amateur_economists |Medium|每天早晨 10 分鐘,「通勤咖啡」帶你洞悉世界動能。

Macro Lab: Macroeconomics decoded for builders and doers—because the big picture drives better business.

(對於本集討論的 SWОT 分析與研究報告,歡迎讀者深入原始文獻並提出交流!)

Reference

Mohammad et al. (2024) 《Exploring LLMs: A systematic review with SWOT analysis》

--

Hosting provided by SoundOn 2025-06-2613 min

Macro Lab 總經實驗室EP28 | AI 自由還是枷鎖?開源 VS 閉源 LLM,哪條路才是未來?— SWOT 深度解析(AI 語音)Macro Lab | 總經實驗室

EP28 | AI 自由還是枷鎖?開源 VS 閉源 LLM,哪條路才是未來?— SWOT 深度解析(AI 語音)

本集《Macro Lab 總經實驗室》帶你從概念到策略,一次看懂開源與閉源大型語言模型(LLM)的核心差異──為何Meta 選擇開放,Google、OpenAI 與 Anthropic 則走商業化路線?我們用最實務的案例與最新SWOT分析,助你快速掌握各自優劣與風險,並在複雜市場中做出最佳選擇!

Chin 帶你進入 LLM 抉擇的核心對話:當「成本、隱私、性能、可定制化」彼此競合,企業和開發者該如何權衡?

1️⃣ 開源LLM詳解 [0:49]

Meta Llama、Hugging Face 社群生態自由度高

高透明度+社群協作:可檢視偏見、快速迭代

免授權費×可重現研究,但需承擔資安與維護成本

2️⃣ 開源LLM SWOT分析 [1:14]

Strengths:技術透明、成本靈活、社群驅動

Weaknesses:濫用風險、技術門檻、可持續性挑戰

Opportunities:監管合規優勢、邊緣運算應用

Threats:生態碎片化、商業變現限制

3️⃣ 閉源LLM詳解 [3:00]

GPT 系列、Google Gemini、Claude 等商業模型

精選訓練資料+企業級安全控管

API 即用體驗佳,但客製化彈性低、需信任供應商

4️⃣ 閉源LLM SWOT分析 [3:06]

Strengths:專有技術壁壘、商業化效率、責任明確

Weaknesses:黑箱風險、供應商鎖定、透明度不足

Opportunities:多模態整合、垂直領域專用化

Threats:開源追趕、監管壓力上升

5️⃣ 未來趨勢與策略對比 [3:11]

混合模式崛起:開源基礎+閉源增值服務

監管驅動開放:企業需保持透明與合規

選型關鍵:依場景、數據敏感度、團隊能力決策

🎯 為何一定要聽?

全方位決策依據:結合 SWOT 與實務選型指標,助你從策略高度與落地層面雙管齊下 unisys.combusinessinsider.com

風險與對策掌握:學會如何利用 Reflection Prompts、Cognitive Scaffold 等設計強化 AI 應用中的安全與可靠性

🔔 立即收聽 → 訂閱 Macro Lab,解鎖更多 科技 × 經濟 × 社會 深度剖析!

👍 按讚+分享,加入 IG @amateur_economists |Medium|每天早晨 10 分鐘,「通勤咖啡」帶你洞悉世界動能。

Macro Lab: Macroeconomics decoded for builders and doers—because the big picture drives better business.

(對於本集討論的 SWОT 分析與研究報告,歡迎讀者深入原始文獻並提出交流!)

Reference

Mohammad et al. (2024) 《Exploring LLMs: A systematic review with SWOT analysis》

--

Hosting provided by SoundOn 2025-06-2613 min PaperLedgeArtificial Intelligence - The Effect of State Representation on LLM Agent Behavior in Dynamic Routing GamesHey PaperLedge crew, Ernis here, ready to dive into some fascinating research! Today, we're talking about Large Language Models, or LLMs – think of them as super-smart chatbots – and how we can use them to make decisions in complex situations, like playing games.

Now, LLMs have a bit of a memory problem. They don't naturally remember what happened in the past, which is kind of a big deal when you're trying to, say, play a game that unfolds over multiple rounds. Imagine playing chess, but forgetting all the moves that came before your turn! That's where this paper come...2025-06-2106 min

PaperLedgeArtificial Intelligence - The Effect of State Representation on LLM Agent Behavior in Dynamic Routing GamesHey PaperLedge crew, Ernis here, ready to dive into some fascinating research! Today, we're talking about Large Language Models, or LLMs – think of them as super-smart chatbots – and how we can use them to make decisions in complex situations, like playing games.

Now, LLMs have a bit of a memory problem. They don't naturally remember what happened in the past, which is kind of a big deal when you're trying to, say, play a game that unfolds over multiple rounds. Imagine playing chess, but forgetting all the moves that came before your turn! That's where this paper come...2025-06-2106 min Study for the Bar in Your CarEvidence - Hearsay - Part 1Ready to decode the courtroom? Dive into "Evidence - Hearsay - Part 1," brought to you by "Study for the Bar in Your Car." Your hosts, Ma and Claude, unpack the often-confusing world of hearsay, using Angela’s incredibly detailed notes—the product of an LLM law student and former judicial law clerk. This episode is your essential guide to understanding the foundational definition of hearsay and, crucially, why it's generally inadmissible due to reliability concerns, the lack of oath, and the absence of cross-examination.We illuminate key categories of statements that are NOT hearsay at all, meaning you...2025-06-1834 min

Study for the Bar in Your CarEvidence - Hearsay - Part 1Ready to decode the courtroom? Dive into "Evidence - Hearsay - Part 1," brought to you by "Study for the Bar in Your Car." Your hosts, Ma and Claude, unpack the often-confusing world of hearsay, using Angela’s incredibly detailed notes—the product of an LLM law student and former judicial law clerk. This episode is your essential guide to understanding the foundational definition of hearsay and, crucially, why it's generally inadmissible due to reliability concerns, the lack of oath, and the absence of cross-examination.We illuminate key categories of statements that are NOT hearsay at all, meaning you...2025-06-1834 min PaperLedgeComputation and Language - Steering LLM Thinking with Budget GuidanceAlright learning crew, Ernis here, ready to dive into some fascinating research that's all about making our AI overlords... I mean, helpful assistants... think smarter, not necessarily longer.

We're talking about Large Language Models, or LLMs – those powerful AIs that can write essays, answer questions, and even code. Think of them as super-smart students, but sometimes, they get a little too caught up in their own thought processes. Imagine giving a student a simple math problem, and they fill up pages and pages with calculations, even though a shorter, more direct approach would have worked just as we...2025-06-1707 min

PaperLedgeComputation and Language - Steering LLM Thinking with Budget GuidanceAlright learning crew, Ernis here, ready to dive into some fascinating research that's all about making our AI overlords... I mean, helpful assistants... think smarter, not necessarily longer.

We're talking about Large Language Models, or LLMs – those powerful AIs that can write essays, answer questions, and even code. Think of them as super-smart students, but sometimes, they get a little too caught up in their own thought processes. Imagine giving a student a simple math problem, and they fill up pages and pages with calculations, even though a shorter, more direct approach would have worked just as we...2025-06-1707 min Study for the Bar in Your CarEvidence - Relevancy and the Exclusion of EvidenceReady to unravel the intricacies of evidence law? Tune into "Study for the Bar in Your Car" episode three, "Relevancy and the Exclusion of Evidence", where hosts Ma and Claude, powered by the incredibly detailed notes of LLM law student and former judicial law clerk Angela, guide you through the fundamental principles that determine what information gets heard in court.This deep dive is your essential resource for understanding how the legal system filters information. We dissect the absolute baseline of relevancy—when evidence has any tendency to make a fact more or less probable and that fa...2025-06-1538 min

Study for the Bar in Your CarEvidence - Relevancy and the Exclusion of EvidenceReady to unravel the intricacies of evidence law? Tune into "Study for the Bar in Your Car" episode three, "Relevancy and the Exclusion of Evidence", where hosts Ma and Claude, powered by the incredibly detailed notes of LLM law student and former judicial law clerk Angela, guide you through the fundamental principles that determine what information gets heard in court.This deep dive is your essential resource for understanding how the legal system filters information. We dissect the absolute baseline of relevancy—when evidence has any tendency to make a fact more or less probable and that fa...2025-06-1538 min Study for the Bar in Your CarEvidence - Presentation of EvidenceReady to decode the courtroom? Dive into "Evidence - Presentation of Evidence," episode two of "Study for the Bar in Your Car," where hosts Ma and Claude (powered by Angela’s incredibly detailed notes as an LLM law student and former judicial law clerk) break down the crucial rules governing how information actually makes it before a judge and jury.This essential deep dive illuminates the foundational concepts that dictate witness testimony and evidence handling, offering you a shortcut to understanding the practical mechanics of a trial.You’ll uncover insights into:Witness Competency: The...2025-06-1416 min

Study for the Bar in Your CarEvidence - Presentation of EvidenceReady to decode the courtroom? Dive into "Evidence - Presentation of Evidence," episode two of "Study for the Bar in Your Car," where hosts Ma and Claude (powered by Angela’s incredibly detailed notes as an LLM law student and former judicial law clerk) break down the crucial rules governing how information actually makes it before a judge and jury.This essential deep dive illuminates the foundational concepts that dictate witness testimony and evidence handling, offering you a shortcut to understanding the practical mechanics of a trial.You’ll uncover insights into:Witness Competency: The...2025-06-1416 min Study for the Bar in Your CarEvidence - IntroductionAre you ready to master evidence law? Dive deep into the absolutely fundamental rules that dictate what information makes it into court with "Study for the Bar in Your Car." Your hosts, Ma and Claude, unpack the complexities of evidence using incredibly detailed notes generously provided by Angela, an LLM law student and former judicial law clerk. Angela, a real-life human being and former judicial law clerk who dealt with evidence issues constantly, contributed significantly to the material.This essential podcast acts as your shortcut to understanding the fundamental rules that shape how facts are determined in...2025-06-1336 min

Study for the Bar in Your CarEvidence - IntroductionAre you ready to master evidence law? Dive deep into the absolutely fundamental rules that dictate what information makes it into court with "Study for the Bar in Your Car." Your hosts, Ma and Claude, unpack the complexities of evidence using incredibly detailed notes generously provided by Angela, an LLM law student and former judicial law clerk. Angela, a real-life human being and former judicial law clerk who dealt with evidence issues constantly, contributed significantly to the material.This essential podcast acts as your shortcut to understanding the fundamental rules that shape how facts are determined in...2025-06-1336 min Elixir WizardsLangChain: LLM Integration for Elixir Apps with Mark EricksenMark Ericksen, creator of the Elixir LangChain framework, joins the Elixir Wizards to talk about LLM integration in Elixir apps. He explains how LangChain abstracts away the quirks of different AI providers (OpenAI, Anthropic’s Claude, Google’s Gemini) so you can work with any LLM in one more consistent API. We dig into core features like conversation chaining, tool execution, automatic retries, and production-grade fallback strategies.

Mark shares his experiences maintaining LangChain in a fast-moving AI world: how it shields developers from API drift, manages token budgets, and handles rate limits and outages. He also reveals test...2025-06-1238 min

Elixir WizardsLangChain: LLM Integration for Elixir Apps with Mark EricksenMark Ericksen, creator of the Elixir LangChain framework, joins the Elixir Wizards to talk about LLM integration in Elixir apps. He explains how LangChain abstracts away the quirks of different AI providers (OpenAI, Anthropic’s Claude, Google’s Gemini) so you can work with any LLM in one more consistent API. We dig into core features like conversation chaining, tool execution, automatic retries, and production-grade fallback strategies.

Mark shares his experiences maintaining LangChain in a fast-moving AI world: how it shields developers from API drift, manages token budgets, and handles rate limits and outages. He also reveals test...2025-06-1238 min Today's tech newsKindle読書×LLMの新体験アプリ『cleo』を徹底解説! | AI Podcast🎙️ AIが生成したポッドキャスト📰 元記事情報タイトル: jpa (@josephpalbanese): "new project: cleo (kindle + llm) 📚cleo is an ios app that pairs whatever book you're reading on kindle with an llm (o3) — ask questions, get recaps/summaries, or listen/discuss (think audiobooks but interactive). the llm has context on exactly where you are + the book contents.best part? no complex setup needed. just link your kindle account and that's it. it just works.reply / rt for testflight invite 👇" | nitterURL: https://nitter.net/josephpalbanese/status/1927444663776002302📝 エピソード概要iOS向けの革新的な読書アプリ『cleo』について、Kindleと大規模言語モデル(LLM)を組み合わせた新しい読書体験の魅力を2人のキャスターが深掘りします。設定不要で手軽に使える点や、インタラクティブな機能の可能性、そして今後の展望を多角的に議論する内容です。🔑 主要ポイント1. 『cleo』はKindleで読んでいる本と連携するiOSアプリで、LLM(大規模言語モデル)を利用して質問応答、要約、朗読、ディスカッションが可能。2. 設定が非常に簡単で、Kindleアカウントをリンクするだけで使い始められる。3. LLMが読書中の正確な位置と本の内容を把握しているため、文脈に沿った回答が可能。4. インタラクティブな読書体験を提供し、従来のオーディオブックとは異なる双方向性が特徴。5. プライバシーの取り扱いやAndroid版への展開など、今後の課題や期待も存在。📚 参考記事についてhttps://nitter.net/josephpalbanese/status/1927444663776002302🗣️ 会話スクリプト(抜粋)Speaker1: こんにちは!今日は、ちょっとワクワクする新しいアプリの話題をお届けしますよ。Kindleで読んでいる本と連携して、質問したり要約を聞いたりできる、そんな夢みたいなiOSアプリ『cleo』についてご紹介します!Speaker2: はい、こんにちは。『cleo』、名前からして可愛いですね。Kindleと大規模言語モデル、つまりLLMを組み合わせて、読書をもっとインタラクティブにしてしまうという新プロジェクトなんですよね。すごく興味深いです。Speaker1: そうなんです。この記事によると、『cleo』はKindleで読んでいる本の内容を把握して、その場所の文脈も理解した上で質問に答えたり、要約を提供したり、あるいはオーディオブックのように朗読やディスカッションもできるというんです。まさに読書の新体験。Speaker2: なるほど。普通のオーディオブックは一方通行ですが、これは対話的に本の内容を深掘りできるわけですね。例えば、わからない箇所を聞いたり、内容のまとめを求めたりできるのは、読書の助けになるし学習効果も高まりそうです。Speaker1: ええ、そして一番のポイントは、設定が超簡単ってところ。Kindleアカウントを連携するだけで使えるので、技術的なハードルが低いんですよ。これってすごく大事ですよね。せっかく便利なツールでも設定が難しいと使わなくなっちゃうし。Speaker2: そうですよね。ユーザーフレンドリーな設計は普及の鍵です。ちなみに、このLLMはO3というモデルが使われているそうですが、大規模言語モデルの進化のおかげで、こうした自然言語での読書体験が実現できるんですね。Speaker1: そうそう。...(続きは音声でお楽しみください)🤖 このポッドキャストについてこのエピソードは、AI(人工知能)によって記事から自動生成されたポッドキャストです。2人の話者による自然な会話形式で、記事の内容を分かりやすく解説しています。生成日時: 2025/5/30 14:30:122025-05-3003 min

Today's tech newsKindle読書×LLMの新体験アプリ『cleo』を徹底解説! | AI Podcast🎙️ AIが生成したポッドキャスト📰 元記事情報タイトル: jpa (@josephpalbanese): "new project: cleo (kindle + llm) 📚cleo is an ios app that pairs whatever book you're reading on kindle with an llm (o3) — ask questions, get recaps/summaries, or listen/discuss (think audiobooks but interactive). the llm has context on exactly where you are + the book contents.best part? no complex setup needed. just link your kindle account and that's it. it just works.reply / rt for testflight invite 👇" | nitterURL: https://nitter.net/josephpalbanese/status/1927444663776002302📝 エピソード概要iOS向けの革新的な読書アプリ『cleo』について、Kindleと大規模言語モデル(LLM)を組み合わせた新しい読書体験の魅力を2人のキャスターが深掘りします。設定不要で手軽に使える点や、インタラクティブな機能の可能性、そして今後の展望を多角的に議論する内容です。🔑 主要ポイント1. 『cleo』はKindleで読んでいる本と連携するiOSアプリで、LLM(大規模言語モデル)を利用して質問応答、要約、朗読、ディスカッションが可能。2. 設定が非常に簡単で、Kindleアカウントをリンクするだけで使い始められる。3. LLMが読書中の正確な位置と本の内容を把握しているため、文脈に沿った回答が可能。4. インタラクティブな読書体験を提供し、従来のオーディオブックとは異なる双方向性が特徴。5. プライバシーの取り扱いやAndroid版への展開など、今後の課題や期待も存在。📚 参考記事についてhttps://nitter.net/josephpalbanese/status/1927444663776002302🗣️ 会話スクリプト(抜粋)Speaker1: こんにちは!今日は、ちょっとワクワクする新しいアプリの話題をお届けしますよ。Kindleで読んでいる本と連携して、質問したり要約を聞いたりできる、そんな夢みたいなiOSアプリ『cleo』についてご紹介します!Speaker2: はい、こんにちは。『cleo』、名前からして可愛いですね。Kindleと大規模言語モデル、つまりLLMを組み合わせて、読書をもっとインタラクティブにしてしまうという新プロジェクトなんですよね。すごく興味深いです。Speaker1: そうなんです。この記事によると、『cleo』はKindleで読んでいる本の内容を把握して、その場所の文脈も理解した上で質問に答えたり、要約を提供したり、あるいはオーディオブックのように朗読やディスカッションもできるというんです。まさに読書の新体験。Speaker2: なるほど。普通のオーディオブックは一方通行ですが、これは対話的に本の内容を深掘りできるわけですね。例えば、わからない箇所を聞いたり、内容のまとめを求めたりできるのは、読書の助けになるし学習効果も高まりそうです。Speaker1: ええ、そして一番のポイントは、設定が超簡単ってところ。Kindleアカウントを連携するだけで使えるので、技術的なハードルが低いんですよ。これってすごく大事ですよね。せっかく便利なツールでも設定が難しいと使わなくなっちゃうし。Speaker2: そうですよね。ユーザーフレンドリーな設計は普及の鍵です。ちなみに、このLLMはO3というモデルが使われているそうですが、大規模言語モデルの進化のおかげで、こうした自然言語での読書体験が実現できるんですね。Speaker1: そうそう。...(続きは音声でお楽しみください)🤖 このポッドキャストについてこのエピソードは、AI(人工知能)によって記事から自動生成されたポッドキャストです。2人の話者による自然な会話形式で、記事の内容を分かりやすく解説しています。生成日時: 2025/5/30 14:30:122025-05-3003 min Verbos: AI og Softwareudvikling# 89 - LLM'er Lokalt I Browseren m. Rasmus Aagaard og Jakob Hoeg MørkI denne episode af Verbos Podcast diskuterer vært Kasper Junge de nyeste fremskridt inden for Large Language Models (LLM'er) og deres anvendelse i browseren med Rasmus Aagaard og Jakob Hoeg Mørk. De dækker emner som use cases, teknologier, udfordringer og fremtiden for LLM'er i browseren, samt hvordan udviklere kan komme i gang med at implementere disse modeller.Læs Rasmus Aagaards transformers.js tutorial her: https://rasgaard.com/posts/getting-started-transformersjs/Kapitler00:00 Introduktion til LLM'er i Browseren03:01 Erfaringer med LLM'er og Open...2025-05-2957 min

Verbos: AI og Softwareudvikling# 89 - LLM'er Lokalt I Browseren m. Rasmus Aagaard og Jakob Hoeg MørkI denne episode af Verbos Podcast diskuterer vært Kasper Junge de nyeste fremskridt inden for Large Language Models (LLM'er) og deres anvendelse i browseren med Rasmus Aagaard og Jakob Hoeg Mørk. De dækker emner som use cases, teknologier, udfordringer og fremtiden for LLM'er i browseren, samt hvordan udviklere kan komme i gang med at implementere disse modeller.Læs Rasmus Aagaards transformers.js tutorial her: https://rasgaard.com/posts/getting-started-transformersjs/Kapitler00:00 Introduktion til LLM'er i Browseren03:01 Erfaringer med LLM'er og Open...2025-05-2957 min AI+Crypto FM【保存版】今さら聞けない!AIの成り立ち、LLM、RAG、AIエージェントの仕組みからその歴史まで 前編*ラスト音声が入っていなかったのでアップしなおしました!YouTubeはこちら:https://youtu.be/6YTX2ofptEQkinjo https://x.com/illshin|AKINDO : https://x.com/akindo_ioKanazawa: https://x.com/k_another_waAIエージェント時代を制する鍵:企業の最先端MCP活用事例LT【東京AI祭プレイベント】単なるメモから知的資産へー松濤Vimmer流 Obsidian in Cursorの知的生産システムChapters00:00 AIとクリプトの最新トレンド03:03 AIエージェントの仕組みと歴史06:05 AIイベントの紹介と参加方法09:00 AIの定義とその広がり12:04 AIブームの歴史と進化12:26 AIの歴史と進化14:50 ディープラーニングの登場17:04 データの重要性とAIの影響18:24 ヒントン教授とディープラーニングの発展20:16 機械学習とディープラーニングの違い25:41 大規模言語モデルの進化27:55 トランスフォーマーの仕組み30:06 トランスフォーマーのデータ処理31:59 パラメータと計算リソースの関係35:00 GPTの進化と実用性38:06 AIの限界と未来の可能性38:42 AIの限界と未来41:30 人間とAIの知性の違い44:49 オープンソースとクローズドモデルの違い49:57 オープンソースの意義と企業の戦略50:35 ブロックチェーンとトークンの価値51:38 日本語対応のAIモデルの課題52:59 日本のAI開発の現状54:45 オープンソースとAPIの選択肢56:00 GPUとAI企業の未来58:42 NVIDIAの技術的優位性01:00:45 AIモデルの性能比較01:02:48 次回のテーマとまとめ1. AIの基礎:そもそもAIって何?-AIの歴史-機械学習 vs. 深層学習の簡単な違い-LLM(大規模言語モデル)って何? ChatGPTの裏側2.LLMの仕組みと進化Transformerとは?GPTシリーズの進化LLMのトレーニング方法と限界オープンソース/クローズドLLMLLMを作るには...2025-05-291h 01

AI+Crypto FM【保存版】今さら聞けない!AIの成り立ち、LLM、RAG、AIエージェントの仕組みからその歴史まで 前編*ラスト音声が入っていなかったのでアップしなおしました!YouTubeはこちら:https://youtu.be/6YTX2ofptEQkinjo https://x.com/illshin|AKINDO : https://x.com/akindo_ioKanazawa: https://x.com/k_another_waAIエージェント時代を制する鍵:企業の最先端MCP活用事例LT【東京AI祭プレイベント】単なるメモから知的資産へー松濤Vimmer流 Obsidian in Cursorの知的生産システムChapters00:00 AIとクリプトの最新トレンド03:03 AIエージェントの仕組みと歴史06:05 AIイベントの紹介と参加方法09:00 AIの定義とその広がり12:04 AIブームの歴史と進化12:26 AIの歴史と進化14:50 ディープラーニングの登場17:04 データの重要性とAIの影響18:24 ヒントン教授とディープラーニングの発展20:16 機械学習とディープラーニングの違い25:41 大規模言語モデルの進化27:55 トランスフォーマーの仕組み30:06 トランスフォーマーのデータ処理31:59 パラメータと計算リソースの関係35:00 GPTの進化と実用性38:06 AIの限界と未来の可能性38:42 AIの限界と未来41:30 人間とAIの知性の違い44:49 オープンソースとクローズドモデルの違い49:57 オープンソースの意義と企業の戦略50:35 ブロックチェーンとトークンの価値51:38 日本語対応のAIモデルの課題52:59 日本のAI開発の現状54:45 オープンソースとAPIの選択肢56:00 GPUとAI企業の未来58:42 NVIDIAの技術的優位性01:00:45 AIモデルの性能比較01:02:48 次回のテーマとまとめ1. AIの基礎:そもそもAIって何?-AIの歴史-機械学習 vs. 深層学習の簡単な違い-LLM(大規模言語モデル)って何? ChatGPTの裏側2.LLMの仕組みと進化Transformerとは?GPTシリーズの進化LLMのトレーニング方法と限界オープンソース/クローズドLLMLLMを作るには...2025-05-291h 01 AI+Crypto FM【保存版】今さら聞けない!AIの成り立ち、LLM、RAG、AIエージェントの仕組みからその歴史まで 前編YouTubeはこちら:https://youtu.be/5V4A_6fdDQokinjo https://x.com/illshin|AKINDO : https://x.com/akindo_ioKanazawa: https://x.com/k_another_waAIエージェント時代を制する鍵:企業の最先端MCP活用事例LT【東京AI祭プレイベント】単なるメモから知的資産へー松濤Vimmer流 Obsidian in Cursorの知的生産システム1. AIの基礎:そもそもAIって何?-AIの歴史-機械学習 vs. 深層学習の簡単な違い-LLM(大規模言語モデル)って何? ChatGPTの裏側2.LLMの仕組みと進化Transformerとは?GPTシリーズの進化LLMのトレーニング方法と限界オープンソース/クローズドLLMLLMを作るには?LLMを使った実装とは3.RAGってなに? なぜ注目されてる?・Retrieval-Augmented Generation の仕組み・LLMだけではなぜダメなのか?・検索+生成のメリットと課題4.AIエージェントとは?・単なるチャットボットとの違い・メモリ・ツール・プランニング:エージェントの中身・AutoGPTやOpenAIのAgentsの事例紹介・web3文脈でのAIエージェントとの違い5.今後の発展について・ブロックチェーンとの融合・AGI、ASIが来る未来をどうみてる・AIが浸透していく時代の課題感・今年のAIの発展に期待していること2025-05-281h 01

AI+Crypto FM【保存版】今さら聞けない!AIの成り立ち、LLM、RAG、AIエージェントの仕組みからその歴史まで 前編YouTubeはこちら:https://youtu.be/5V4A_6fdDQokinjo https://x.com/illshin|AKINDO : https://x.com/akindo_ioKanazawa: https://x.com/k_another_waAIエージェント時代を制する鍵:企業の最先端MCP活用事例LT【東京AI祭プレイベント】単なるメモから知的資産へー松濤Vimmer流 Obsidian in Cursorの知的生産システム1. AIの基礎:そもそもAIって何?-AIの歴史-機械学習 vs. 深層学習の簡単な違い-LLM(大規模言語モデル)って何? ChatGPTの裏側2.LLMの仕組みと進化Transformerとは?GPTシリーズの進化LLMのトレーニング方法と限界オープンソース/クローズドLLMLLMを作るには?LLMを使った実装とは3.RAGってなに? なぜ注目されてる?・Retrieval-Augmented Generation の仕組み・LLMだけではなぜダメなのか?・検索+生成のメリットと課題4.AIエージェントとは?・単なるチャットボットとの違い・メモリ・ツール・プランニング:エージェントの中身・AutoGPTやOpenAIのAgentsの事例紹介・web3文脈でのAIエージェントとの違い5.今後の発展について・ブロックチェーンとの融合・AGI、ASIが来る未来をどうみてる・AIが浸透していく時代の課題感・今年のAIの発展に期待していること2025-05-281h 01 Daily Paper CastDeciphering Trajectory-Aided LLM Reasoning: An Optimization Perspective

🤗 Upvotes: 33 | cs.CL, cs.AI

Authors:

Junnan Liu, Hongwei Liu, Linchen Xiao, Shudong Liu, Taolin Zhang, Zihan Ma, Songyang Zhang, Kai Chen

Title:

Deciphering Trajectory-Aided LLM Reasoning: An Optimization Perspective

Arxiv:

http://arxiv.org/abs/2505.19815v1

Abstract:

We propose a novel framework for comprehending the reasoning capabilities of large language models (LLMs) through the perspective of meta-learning. By conceptualizing reasoning trajectories as pseudo-gradient descent updates to the LLM's parameters, we identify parallels between LLM reasoning and various meta-learning paradigms. We formalize the training process for reasoning tasks as...2025-05-2821 min

Daily Paper CastDeciphering Trajectory-Aided LLM Reasoning: An Optimization Perspective

🤗 Upvotes: 33 | cs.CL, cs.AI

Authors:

Junnan Liu, Hongwei Liu, Linchen Xiao, Shudong Liu, Taolin Zhang, Zihan Ma, Songyang Zhang, Kai Chen

Title:

Deciphering Trajectory-Aided LLM Reasoning: An Optimization Perspective

Arxiv:

http://arxiv.org/abs/2505.19815v1

Abstract:

We propose a novel framework for comprehending the reasoning capabilities of large language models (LLMs) through the perspective of meta-learning. By conceptualizing reasoning trajectories as pseudo-gradient descent updates to the LLM's parameters, we identify parallels between LLM reasoning and various meta-learning paradigms. We formalize the training process for reasoning tasks as...2025-05-2821 min Study for the Bar in Your CarCivil Procedure - Bonus Episode - Essay QuestionsReady for a unique approach to bar exam practice? Dive into our bonus episode of Study for the Bar in Your Car! Angela, our LLM law student host, pushes the boundaries of bar prep by putting NCBE MEE Civil Procedure questions into AI and having it generate new, original questions based on the patterns.In this special episode, Angela shares and breaks down the first AI-generated essay question, focusing on the critical topic of Subject Matter Jurisdiction. Angela reads the challenging fact pattern involving complex issues of diversity and amount in controversy. Then, her AI...2025-05-2729 min

Study for the Bar in Your CarCivil Procedure - Bonus Episode - Essay QuestionsReady for a unique approach to bar exam practice? Dive into our bonus episode of Study for the Bar in Your Car! Angela, our LLM law student host, pushes the boundaries of bar prep by putting NCBE MEE Civil Procedure questions into AI and having it generate new, original questions based on the patterns.In this special episode, Angela shares and breaks down the first AI-generated essay question, focusing on the critical topic of Subject Matter Jurisdiction. Angela reads the challenging fact pattern involving complex issues of diversity and amount in controversy. Then, her AI...2025-05-2729 min Study for the Bar in Your CarCivil Procedure - Wrap up and ReviewReady to conquer Civil Procedure? Join Ma and Claude on Study for the Bar in Your Car for a comprehensive wrap-up of this challenging but crucial subject! Drawing on Angela's detailed notes – informed by her LLM studies and experience as a judicial law clerk who saw these rules in action – this episode is your roadmap from the start of a lawsuit all the way through appeal. It focuses on what's critical for the bar exam and where pitfalls lie.We tackle the foundational building blocks you need to nail the bar and navigate litigation:Jurisdiction & Venue: Mast...2025-05-2632 min

Study for the Bar in Your CarCivil Procedure - Wrap up and ReviewReady to conquer Civil Procedure? Join Ma and Claude on Study for the Bar in Your Car for a comprehensive wrap-up of this challenging but crucial subject! Drawing on Angela's detailed notes – informed by her LLM studies and experience as a judicial law clerk who saw these rules in action – this episode is your roadmap from the start of a lawsuit all the way through appeal. It focuses on what's critical for the bar exam and where pitfalls lie.We tackle the foundational building blocks you need to nail the bar and navigate litigation:Jurisdiction & Venue: Mast...2025-05-2632 min Best AI papers explainedAuto-Differentiating Any LLM Workflow: A Farewell to Manual PromptingThis document introduces LLM-AutoDiff, a novel framework for Automatic Prompt Engineering (APE) that aims to automate the challenging task of designing prompts for complex Large Language Model (LLM) workflows. By viewing these workflows as computation graphs where textual inputs are treated as trainable parameters, the system uses a "backward engine" LLM to generate textual gradients – feedback that guides the iterative improvement of prompts. Unlike previous methods that focus on single LLM calls, LLM-AutoDiff supports multi-component pipelines, including functional operations like retrieval, handles cycles in iterative processes, and separates different parts of prompts (like instructions and examples) into peer nodes fo...2025-05-2319 min

Best AI papers explainedAuto-Differentiating Any LLM Workflow: A Farewell to Manual PromptingThis document introduces LLM-AutoDiff, a novel framework for Automatic Prompt Engineering (APE) that aims to automate the challenging task of designing prompts for complex Large Language Model (LLM) workflows. By viewing these workflows as computation graphs where textual inputs are treated as trainable parameters, the system uses a "backward engine" LLM to generate textual gradients – feedback that guides the iterative improvement of prompts. Unlike previous methods that focus on single LLM calls, LLM-AutoDiff supports multi-component pipelines, including functional operations like retrieval, handles cycles in iterative processes, and separates different parts of prompts (like instructions and examples) into peer nodes fo...2025-05-2319 min GitHub Daily TrendGitHub - llm-d/llm-d: llm-d is a Kubernetes-native high-performance distributed LLM inference fra...https://github.com/llm-d/llm-d llm-d is a Kubernetes-native high-performance distributed LLM inference framework - llm-d/llm-d Powered by VoiceFeed. https://voicefeed.web.app/lp/podcast?utm_source=githubtrenddaily&utm_medium=podcast Developer:https://twitter.com/_horotter2025-05-2102 min

GitHub Daily TrendGitHub - llm-d/llm-d: llm-d is a Kubernetes-native high-performance distributed LLM inference fra...https://github.com/llm-d/llm-d llm-d is a Kubernetes-native high-performance distributed LLM inference framework - llm-d/llm-d Powered by VoiceFeed. https://voicefeed.web.app/lp/podcast?utm_source=githubtrenddaily&utm_medium=podcast Developer:https://twitter.com/_horotter2025-05-2102 min Study for the Bar in Your Car Civil Procedure - IntroductionLooking for a smart way to maximize your bar exam study time, especially on the go? Welcome to Study for the Bar in Your Car!Created by Angela, an LLM law student who feels like she's been studying forever, this podcast is designed to provide actual bar study content in an audio format. Tired of not finding resources for studying while driving or travelingIn this deep dive episode, your study companion focuses squarely on a foundational area critical for bar success: Civil Procedure. Think of this episode like a roadmap, covering the journey from...2025-05-1937 min

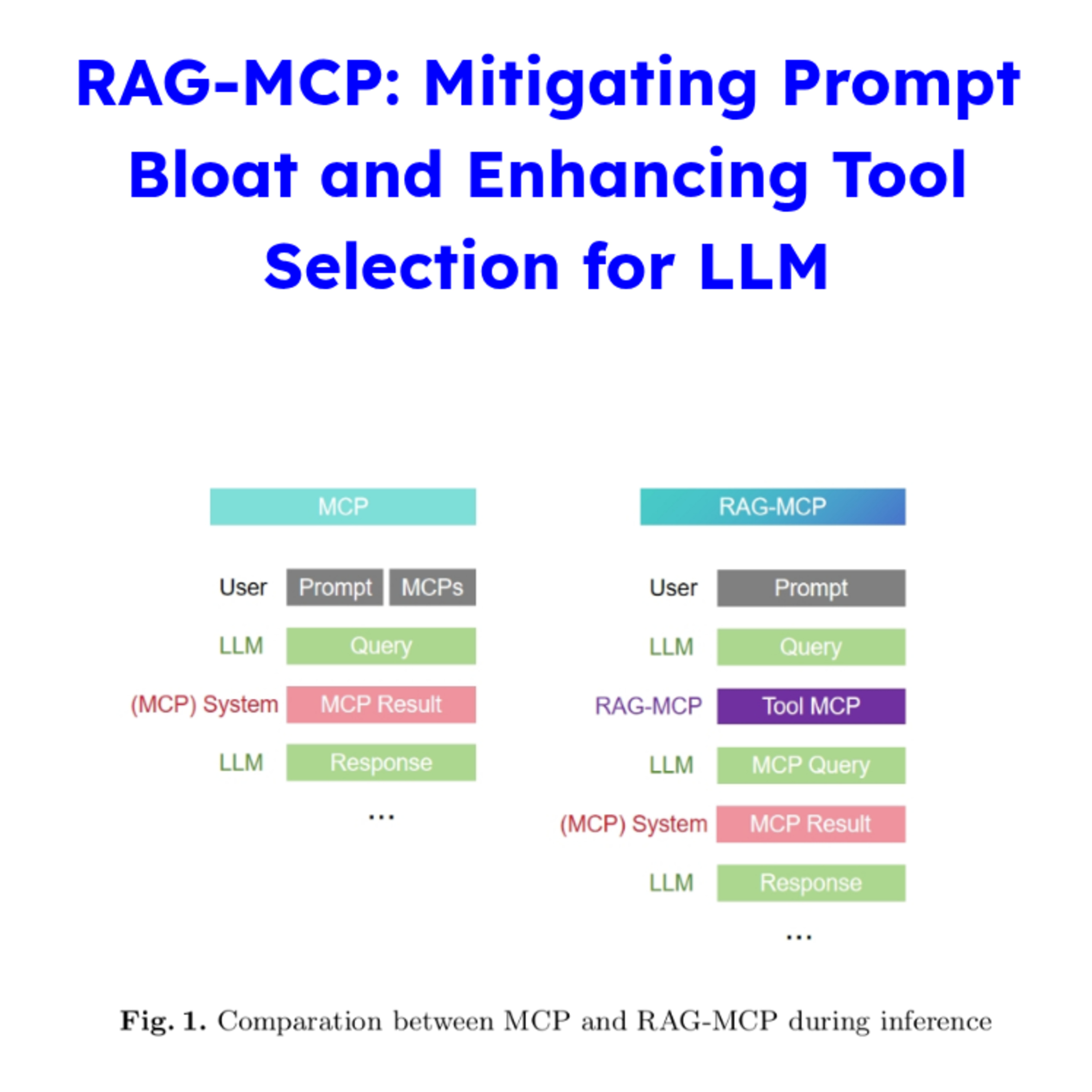

Study for the Bar in Your Car Civil Procedure - IntroductionLooking for a smart way to maximize your bar exam study time, especially on the go? Welcome to Study for the Bar in Your Car!Created by Angela, an LLM law student who feels like she's been studying forever, this podcast is designed to provide actual bar study content in an audio format. Tired of not finding resources for studying while driving or travelingIn this deep dive episode, your study companion focuses squarely on a foundational area critical for bar success: Civil Procedure. Think of this episode like a roadmap, covering the journey from...2025-05-1937 min GenAI Level UPRAG-MCP: Mitigating Prompt Bloat and Enhancing Tool Selection for LLMLarge Language Models (LLMs) face significant challenges in effectively using a growing number of external tools, such as those defined by the Model Context Protocol (MCP). These challenges include prompt bloat and selection complexity. As the number of available tools increases, providing definitions for every tool in the LLM's context consumes an enormous number of tokens, risking overwhelming and confusing the model, which can lead to errors like selecting suboptimal tools or hallucinating non-existent ones.To address these issues, the RAG-MCP framework is introduced. This approach leverages Retrieval-Augmented Generation (RAG) principles applied to tool selection. Instead of...2025-05-1313 min

GenAI Level UPRAG-MCP: Mitigating Prompt Bloat and Enhancing Tool Selection for LLMLarge Language Models (LLMs) face significant challenges in effectively using a growing number of external tools, such as those defined by the Model Context Protocol (MCP). These challenges include prompt bloat and selection complexity. As the number of available tools increases, providing definitions for every tool in the LLM's context consumes an enormous number of tokens, risking overwhelming and confusing the model, which can lead to errors like selecting suboptimal tools or hallucinating non-existent ones.To address these issues, the RAG-MCP framework is introduced. This approach leverages Retrieval-Augmented Generation (RAG) principles applied to tool selection. Instead of...2025-05-1313 min Experiencing Data w/ Brian T. O’Neill (UX for AI Data Products, SAAS Analytics, Data Product Management)169 - AI Product Management and UX: What’s New (If Anything) About Making Valuable LLM-Powered Products with Stuart Winter-TearToday, I'm chatting with Stuart Winter-Tear about AI product management. We're getting into the nitty-gritty of what it takes to build and launch LLM-powered products for the commercial market that actually produce value. Among other things in this rich conversation, Stuart surprised me with the level of importance he believes UX has in making LLM-powered products successful, even for technical audiences.

After spending significant time on the forefront of AI’s breakthroughs, Stuart believes many of the products we’re seeing today are the result of FOMO above all else. He shares a belief...2025-05-131h 01

Experiencing Data w/ Brian T. O’Neill (UX for AI Data Products, SAAS Analytics, Data Product Management)169 - AI Product Management and UX: What’s New (If Anything) About Making Valuable LLM-Powered Products with Stuart Winter-TearToday, I'm chatting with Stuart Winter-Tear about AI product management. We're getting into the nitty-gritty of what it takes to build and launch LLM-powered products for the commercial market that actually produce value. Among other things in this rich conversation, Stuart surprised me with the level of importance he believes UX has in making LLM-powered products successful, even for technical audiences.

After spending significant time on the forefront of AI’s breakthroughs, Stuart believes many of the products we’re seeing today are the result of FOMO above all else. He shares a belief...2025-05-131h 01 Innovation on ArmEP07 I 從雲端到邊緣:為何眾多開發者選擇在 Arm 架構上運行 LLM?隨著大型語言模型(LLM)的技術演進,開發者不再只能依賴雲端,越來越多人開始將 LLM 推論部署在高效能的 Arm 架構上。這一轉變的背後,是推論框架優化、模型壓縮與量化技術的進步。

本集節目將由來自 Arm 的 Principal Solutions Architect 深入淺出介紹什麼是 LLM? 各家基於 Arm Neoverse 架構導入 LLM 應用的雲端平台,包括 AWS Graviton、Google Cloud、Microsoft Azure等,同時也介紹了推動 LLM 生態發展的關鍵開源社群,例如 Hugging Face、ModelScope 等平台。此外,講者也分享了有趣的 use case。Arm 架構的優勢包括:高能效比、低總體擁有成本、跨平台一致性、以及提升資料隱私的本地運算能力,LLM on Arm 不再是遙遠的構想,已經正在發生,Arm 將是您雲端與邊緣部署的理想選擇!

此外,Arm 將在 COMPUTEX 2025 期間舉辦一系列活動,包括 Arm 前瞻技術高峰演講以及 Arm Developer Experience 開發者大會。

1. Arm 前瞻技術高峰演講 (地點:台北漢來大飯店三樓):

2025 年 5 月 19 日下午 3 點至 4 點,Arm 資深副總裁暨終端產品事業部總經理 Chris Bergey 將親臨現場以「雲端至邊緣:共築在 Arm 架構上的人工智慧發展」為題,分享橫跨晶片技術、軟體開發、雲端與邊緣平台的最新趨勢與創新成果,精彩可期,座位有限,提早報到者還有機會獲得 Arm 與 Aston Martin Aramco F1 車隊的精美聯名限量贈品,歡迎立即報名!

Arm 前瞻技術高峰演講報名網址:https://reurl.cc/2KrR2X

2. Arm Developer Experience 開發者系列活動 (地點:台北漢來大飯店六樓;5/20 Arm Cloud AI Day 13:00-17:00、5/21 Arm Mobile AI Day 9:00-13:00):

Arm 首次將於2025 年 5/20-5/22 COMPUTEX 期間,為開發人員舉辦連續三天的系列活動,包括以下四大活動:

A. 5/20 下午 13:00-17:00 以 Cloud AI 為主題的技術演講與工作坊:

部分議程包括;

Accelerating development with Arm GitHub Copilot

Seamless cloud migration to Arm

Deploying a RAG-based chatbot with llama-cpp-python using Arm KleidiAI on Google Axion processors, plus live Q&A

Ubuntu: Unlocking the Power of Edge AI

B. 5/21 早上 9:00-13:00 以 Mobile AI 為主題的技術演講:

部分議程包括;

Build next-generation mobile gaming with Arm Accuracy Super Resolution (ASR)

Vision LLM inference on Android with KleidiAI and MNN

Introduction to Arm Frame Advisor/Arm Performance Studio

C. Arm 開發者小酌輕食見面會 ( 5/20 晚上 17:30-19:30)

我們將於 5/20舉辦開發者見面會!誠摯邀請您與 Arm 開發者專家當面交流!

D. 5/20-5/22 Arm Developer Chill Out Lounge ( 5/20-5/22 9:00- 17:00):

為期三天,我們布置了舒適的休憩空間,讓您可以在輕鬆的環境與 Arm 開發者專家交流、觀看 Arm 產品展示、為您的裝置充電、進行桌遊以及休憩等。

無需報名,歡迎隨時來參觀。

席位有限,誠摯邀請您立即報名上述活動,報名者還有機會於現場抽中包括 Keychron K5 Max 超薄無線客製機械鍵盤,以及 AirPods 4 耳機等大獎喔!

讓 Arm 協助您擴展雲端應用、提升行動裝置效能、優化遊戲等,助力您開發下一代 AI 解決方案!

Arm Developer Experience 開發者系列活動報名網址: https://reurl.cc/bWNo56

--

Hosting provided by SoundOn 2025-04-2331 min

Innovation on ArmEP07 I 從雲端到邊緣:為何眾多開發者選擇在 Arm 架構上運行 LLM?隨著大型語言模型(LLM)的技術演進,開發者不再只能依賴雲端,越來越多人開始將 LLM 推論部署在高效能的 Arm 架構上。這一轉變的背後,是推論框架優化、模型壓縮與量化技術的進步。

本集節目將由來自 Arm 的 Principal Solutions Architect 深入淺出介紹什麼是 LLM? 各家基於 Arm Neoverse 架構導入 LLM 應用的雲端平台,包括 AWS Graviton、Google Cloud、Microsoft Azure等,同時也介紹了推動 LLM 生態發展的關鍵開源社群,例如 Hugging Face、ModelScope 等平台。此外,講者也分享了有趣的 use case。Arm 架構的優勢包括:高能效比、低總體擁有成本、跨平台一致性、以及提升資料隱私的本地運算能力,LLM on Arm 不再是遙遠的構想,已經正在發生,Arm 將是您雲端與邊緣部署的理想選擇!

此外,Arm 將在 COMPUTEX 2025 期間舉辦一系列活動,包括 Arm 前瞻技術高峰演講以及 Arm Developer Experience 開發者大會。

1. Arm 前瞻技術高峰演講 (地點:台北漢來大飯店三樓):

2025 年 5 月 19 日下午 3 點至 4 點,Arm 資深副總裁暨終端產品事業部總經理 Chris Bergey 將親臨現場以「雲端至邊緣:共築在 Arm 架構上的人工智慧發展」為題,分享橫跨晶片技術、軟體開發、雲端與邊緣平台的最新趨勢與創新成果,精彩可期,座位有限,提早報到者還有機會獲得 Arm 與 Aston Martin Aramco F1 車隊的精美聯名限量贈品,歡迎立即報名!

Arm 前瞻技術高峰演講報名網址:https://reurl.cc/2KrR2X

2. Arm Developer Experience 開發者系列活動 (地點:台北漢來大飯店六樓;5/20 Arm Cloud AI Day 13:00-17:00、5/21 Arm Mobile AI Day 9:00-13:00):

Arm 首次將於2025 年 5/20-5/22 COMPUTEX 期間,為開發人員舉辦連續三天的系列活動,包括以下四大活動:

A. 5/20 下午 13:00-17:00 以 Cloud AI 為主題的技術演講與工作坊:

部分議程包括;

Accelerating development with Arm GitHub Copilot

Seamless cloud migration to Arm

Deploying a RAG-based chatbot with llama-cpp-python using Arm KleidiAI on Google Axion processors, plus live Q&A

Ubuntu: Unlocking the Power of Edge AI

B. 5/21 早上 9:00-13:00 以 Mobile AI 為主題的技術演講:

部分議程包括;

Build next-generation mobile gaming with Arm Accuracy Super Resolution (ASR)

Vision LLM inference on Android with KleidiAI and MNN

Introduction to Arm Frame Advisor/Arm Performance Studio

C. Arm 開發者小酌輕食見面會 ( 5/20 晚上 17:30-19:30)

我們將於 5/20舉辦開發者見面會!誠摯邀請您與 Arm 開發者專家當面交流!

D. 5/20-5/22 Arm Developer Chill Out Lounge ( 5/20-5/22 9:00- 17:00):

為期三天,我們布置了舒適的休憩空間,讓您可以在輕鬆的環境與 Arm 開發者專家交流、觀看 Arm 產品展示、為您的裝置充電、進行桌遊以及休憩等。

無需報名,歡迎隨時來參觀。

席位有限,誠摯邀請您立即報名上述活動,報名者還有機會於現場抽中包括 Keychron K5 Max 超薄無線客製機械鍵盤,以及 AirPods 4 耳機等大獎喔!

讓 Arm 協助您擴展雲端應用、提升行動裝置效能、優化遊戲等,助力您開發下一代 AI 解決方案!

Arm Developer Experience 開發者系列活動報名網址: https://reurl.cc/bWNo56

--

Hosting provided by SoundOn 2025-04-2331 min Kabir's Tech DivesThe Next Token and Beyond: Unraveling the LLM EnigmaYes, I can certainly provide a long and detailed elaboration on the topics covered in the sources, particularly focusing on LLM-generated text detection and the nature of LLMs themselves.The emergence of powerful Large Language Models (LLMs) has led to a significant increase in text generation capabilities, making it challenging to distinguish between human-written and machine-generated content. This has consequently created a pressing need for effective LLM-generated text detection. The necessity for this detection arises from several critical concerns, as outlined in the survey. These include the potential for misuse of LLMs in spreading disinformation...2025-04-1619 min

Kabir's Tech DivesThe Next Token and Beyond: Unraveling the LLM EnigmaYes, I can certainly provide a long and detailed elaboration on the topics covered in the sources, particularly focusing on LLM-generated text detection and the nature of LLMs themselves.The emergence of powerful Large Language Models (LLMs) has led to a significant increase in text generation capabilities, making it challenging to distinguish between human-written and machine-generated content. This has consequently created a pressing need for effective LLM-generated text detection. The necessity for this detection arises from several critical concerns, as outlined in the survey. These include the potential for misuse of LLMs in spreading disinformation...2025-04-1619 min LessWrong (Curated & Popular)“Reducing LLM deception at scale with self-other overlap fine-tuning” by Marc Carauleanu, Diogo de Lucena, Gunnar_Zarncke, Judd Rosenblatt, Mike Vaiana, Cameron BergThis research was conducted at AE Studio and supported by the AI Safety Grants programme administered by Foresight Institute with additional support from AE Studio. SummaryIn this post, we summarise the main experimental results from our new paper, "Towards Safe and Honest AI Agents with Neural Self-Other Overlap", which we presented orally at the Safe Generative AI Workshop at NeurIPS 2024. This is a follow-up to our post Self-Other Overlap: A Neglected Approach to AI Alignment, which introduced the method last July.Our results show that the Self-Other Overlap (SOO) fine-tuning drastically[1] reduces deceptive...2025-03-1712 min

LessWrong (Curated & Popular)“Reducing LLM deception at scale with self-other overlap fine-tuning” by Marc Carauleanu, Diogo de Lucena, Gunnar_Zarncke, Judd Rosenblatt, Mike Vaiana, Cameron BergThis research was conducted at AE Studio and supported by the AI Safety Grants programme administered by Foresight Institute with additional support from AE Studio. SummaryIn this post, we summarise the main experimental results from our new paper, "Towards Safe and Honest AI Agents with Neural Self-Other Overlap", which we presented orally at the Safe Generative AI Workshop at NeurIPS 2024. This is a follow-up to our post Self-Other Overlap: A Neglected Approach to AI Alignment, which introduced the method last July.Our results show that the Self-Other Overlap (SOO) fine-tuning drastically[1] reduces deceptive...2025-03-1712 min AI Portfolio PodcastMaxime Labonne: LLM Scientist Roadmap, AI Scientist, LLM Course & Open Source - AI Portfolio PodcastMaxime Labonne, Co-author of the LLM Engineers Handbook, creator of the LLM course on github with over 40k stars, and author of Hands on Graph Neural Networks.Follow/Connect:Maxime LinkedIn:https://www.linkedin.com/in/maxime-labonne/Mark Linkedin: https://www.linkedin.com/in/markmoyou/Chapters:📌 00:00 – Intro📚 01:51 – Maxime: Books & Courses🤖 07:30 – AI Scientist vs. AI Engineer🚀 09:05 – Path to Becoming an AI Expert🎓 11:13 – Do You Need a Degree?⏳ 13:01 – How Long Does It Take to Become an AI Scientist?👨🔬 15:58 – Individual Contributor Role as an LLM Scientist🧠 26:04 – Understanding LLM Personality🎯 30:07 – Objective Func...2025-03-121h 27

AI Portfolio PodcastMaxime Labonne: LLM Scientist Roadmap, AI Scientist, LLM Course & Open Source - AI Portfolio PodcastMaxime Labonne, Co-author of the LLM Engineers Handbook, creator of the LLM course on github with over 40k stars, and author of Hands on Graph Neural Networks.Follow/Connect:Maxime LinkedIn:https://www.linkedin.com/in/maxime-labonne/Mark Linkedin: https://www.linkedin.com/in/markmoyou/Chapters:📌 00:00 – Intro📚 01:51 – Maxime: Books & Courses🤖 07:30 – AI Scientist vs. AI Engineer🚀 09:05 – Path to Becoming an AI Expert🎓 11:13 – Do You Need a Degree?⏳ 13:01 – How Long Does It Take to Become an AI Scientist?👨🔬 15:58 – Individual Contributor Role as an LLM Scientist🧠 26:04 – Understanding LLM Personality🎯 30:07 – Objective Func...2025-03-121h 27 Daily Paper CastAutellix: An Efficient Serving Engine for LLM Agents as General Programs

🤗 Upvotes: 15 | cs.LG, cs.AI, cs.DC

Authors:

Michael Luo, Xiaoxiang Shi, Colin Cai, Tianjun Zhang, Justin Wong, Yichuan Wang, Chi Wang, Yanping Huang, Zhifeng Chen, Joseph E. Gonzalez, Ion Stoica

Title:

Autellix: An Efficient Serving Engine for LLM Agents as General Programs

Arxiv:

http://arxiv.org/abs/2502.13965v1

Abstract:

Large language model (LLM) applications are evolving beyond simple chatbots into dynamic, general-purpose agentic programs, which scale LLM calls and output tokens to help AI agents reason, explore, and solve complex tasks. However, existing LLM serving systems ign...2025-02-2122 min

Daily Paper CastAutellix: An Efficient Serving Engine for LLM Agents as General Programs

🤗 Upvotes: 15 | cs.LG, cs.AI, cs.DC

Authors:

Michael Luo, Xiaoxiang Shi, Colin Cai, Tianjun Zhang, Justin Wong, Yichuan Wang, Chi Wang, Yanping Huang, Zhifeng Chen, Joseph E. Gonzalez, Ion Stoica

Title:

Autellix: An Efficient Serving Engine for LLM Agents as General Programs

Arxiv:

http://arxiv.org/abs/2502.13965v1

Abstract:

Large language model (LLM) applications are evolving beyond simple chatbots into dynamic, general-purpose agentic programs, which scale LLM calls and output tokens to help AI agents reason, explore, and solve complex tasks. However, existing LLM serving systems ign...2025-02-2122 min ibl.aiOWASP: LLM Applications Cybersecurity and Governance ChecklistSummary of https://genai.owasp.org/resource/llm-applications-cybersecurity-and-governance-checklist-english

Provides guidance on securing and governing Large Language Models (LLMs) in various organizational contexts. It emphasizes understanding AI risks, establishing comprehensive policies, and incorporating security measures into existing practices.

The document aims to assist leaders across multiple sectors in navigating the challenges and opportunities presented by LLMs while safeguarding against potential threats. The checklist helps organizations formulate strategies, improve accuracy, and reduce oversights in their AI adoption journey.

It also includes references to external resources like OWASP and MITRE to facilitate a robust cybersecurity plan...2025-02-1820 min

ibl.aiOWASP: LLM Applications Cybersecurity and Governance ChecklistSummary of https://genai.owasp.org/resource/llm-applications-cybersecurity-and-governance-checklist-english

Provides guidance on securing and governing Large Language Models (LLMs) in various organizational contexts. It emphasizes understanding AI risks, establishing comprehensive policies, and incorporating security measures into existing practices.

The document aims to assist leaders across multiple sectors in navigating the challenges and opportunities presented by LLMs while safeguarding against potential threats. The checklist helps organizations formulate strategies, improve accuracy, and reduce oversights in their AI adoption journey.

It also includes references to external resources like OWASP and MITRE to facilitate a robust cybersecurity plan...2025-02-1820 min Daily Paper CastCan 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling

🤗 Upvotes: 71 | cs.CL

Authors:

Runze Liu, Junqi Gao, Jian Zhao, Kaiyan Zhang, Xiu Li, Biqing Qi, Wanli Ouyang, Bowen Zhou

Title:

Can 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling

Arxiv:

http://arxiv.org/abs/2502.06703v1

Abstract:

Test-Time Scaling (TTS) is an important method for improving the performance of Large Language Models (LLMs) by using additional computation during the inference phase. However, current studies do not systematically analyze how policy models, Process Reward Models (PRMs), and problem difficulty influence TTS. This lack of analysis limits the und...2025-02-1222 min

Daily Paper CastCan 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling

🤗 Upvotes: 71 | cs.CL

Authors:

Runze Liu, Junqi Gao, Jian Zhao, Kaiyan Zhang, Xiu Li, Biqing Qi, Wanli Ouyang, Bowen Zhou

Title:

Can 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling

Arxiv:

http://arxiv.org/abs/2502.06703v1

Abstract:

Test-Time Scaling (TTS) is an important method for improving the performance of Large Language Models (LLMs) by using additional computation during the inference phase. However, current studies do not systematically analyze how policy models, Process Reward Models (PRMs), and problem difficulty influence TTS. This lack of analysis limits the und...2025-02-1222 min Bliskie Spotkania z AI#11 GenAI i LLM - Wszystko, co musisz wiedzieć, zanim zaczniesz działać | Mariusz KorzekwaNie uczysz się AI? Spokojnie, AI już uczy się, jak Cię zastąpić!🔔 Subskrybuj, aby nie przegapić nowych odcinków!Tym razem moim gościem jest Mariusz Korzekwa, ekspert od #AI specjalizujący się w #promptEngineeringu i integracjach z #LLM-ami.W tym odcinku rozmawiamy o modelach językowych (LLM) i ich zastosowaniach w sztucznej inteligencji. Poruszamy temat generative AI, omawiając jego definicję oraz kluczowe różnice między LLM a klasyczną AI. Analizujemy znaczenie multimodalności, a także roli inputu i outputu w kontekście działania...2025-02-112h 40

Bliskie Spotkania z AI#11 GenAI i LLM - Wszystko, co musisz wiedzieć, zanim zaczniesz działać | Mariusz KorzekwaNie uczysz się AI? Spokojnie, AI już uczy się, jak Cię zastąpić!🔔 Subskrybuj, aby nie przegapić nowych odcinków!Tym razem moim gościem jest Mariusz Korzekwa, ekspert od #AI specjalizujący się w #promptEngineeringu i integracjach z #LLM-ami.W tym odcinku rozmawiamy o modelach językowych (LLM) i ich zastosowaniach w sztucznej inteligencji. Poruszamy temat generative AI, omawiając jego definicję oraz kluczowe różnice między LLM a klasyczną AI. Analizujemy znaczenie multimodalności, a także roli inputu i outputu w kontekście działania...2025-02-112h 40 Daily Paper CastPreference Leakage: A Contamination Problem in LLM-as-a-judge

🤗 Upvotes: 25 | cs.LG, cs.AI, cs.CL

Authors:

Dawei Li, Renliang Sun, Yue Huang, Ming Zhong, Bohan Jiang, Jiawei Han, Xiangliang Zhang, Wei Wang, Huan Liu

Title:

Preference Leakage: A Contamination Problem in LLM-as-a-judge

Arxiv:

http://arxiv.org/abs/2502.01534v1

Abstract:

Large Language Models (LLMs) as judges and LLM-based data synthesis have emerged as two fundamental LLM-driven data annotation methods in model development. While their combination significantly enhances the efficiency of model training and evaluation, little attention has been given to the potential contamination brought by this new...2025-02-0521 min

Daily Paper CastPreference Leakage: A Contamination Problem in LLM-as-a-judge

🤗 Upvotes: 25 | cs.LG, cs.AI, cs.CL

Authors:

Dawei Li, Renliang Sun, Yue Huang, Ming Zhong, Bohan Jiang, Jiawei Han, Xiangliang Zhang, Wei Wang, Huan Liu

Title:

Preference Leakage: A Contamination Problem in LLM-as-a-judge

Arxiv:

http://arxiv.org/abs/2502.01534v1

Abstract:

Large Language Models (LLMs) as judges and LLM-based data synthesis have emerged as two fundamental LLM-driven data annotation methods in model development. While their combination significantly enhances the efficiency of model training and evaluation, little attention has been given to the potential contamination brought by this new...2025-02-0521 min AI Engineering PodcastOptimize Your AI Applications Automatically With The TensorZero LLM GatewaySummaryIn this episode of the AI Engineering podcast Viraj Mehta, CTO and co-founder of TensorZero, talks about the use of LLM gateways for managing interactions between client-side applications and various AI models. He highlights the benefits of using such a gateway, including standardized communication, credential management, and potential features like request-response caching and audit logging. The conversation also explores TensorZero's architecture and functionality in optimizing AI applications by managing structured data inputs and outputs, as well as the challenges and opportunities in automating prompt generation and maintaining interaction history for optimization purposes.AnnouncementsHello...2025-01-221h 03

AI Engineering PodcastOptimize Your AI Applications Automatically With The TensorZero LLM GatewaySummaryIn this episode of the AI Engineering podcast Viraj Mehta, CTO and co-founder of TensorZero, talks about the use of LLM gateways for managing interactions between client-side applications and various AI models. He highlights the benefits of using such a gateway, including standardized communication, credential management, and potential features like request-response caching and audit logging. The conversation also explores TensorZero's architecture and functionality in optimizing AI applications by managing structured data inputs and outputs, as well as the challenges and opportunities in automating prompt generation and maintaining interaction history for optimization purposes.AnnouncementsHello...2025-01-221h 03