Shows

80k After HoursRobert Wright & Rob Wiblin on the truth about effective altruismThis is a cross-post of an interview Rob Wiblin did on Robert Wright's Nonzero podcast in January 2024. You can get access to full episodes of that show by subscribing to the Nonzero Newsletter. They talk about Sam Bankman-Fried, virtue ethics, the growing influence of longtermism, what role EA played in the OpenAI board drama, the culture of local effective altruism groups, where Rob thinks people get EA most seriously wrong, what Rob fears most about rogue AI, the double-edged sword of AI-empowered governments, and flattening the curve of AI's social disruption.And if you enjoy...2024-04-042h 08

80k After HoursRobert Wright & Rob Wiblin on the truth about effective altruismThis is a cross-post of an interview Rob Wiblin did on Robert Wright's Nonzero podcast in January 2024. You can get access to full episodes of that show by subscribing to the Nonzero Newsletter. They talk about Sam Bankman-Fried, virtue ethics, the growing influence of longtermism, what role EA played in the OpenAI board drama, the culture of local effective altruism groups, where Rob thinks people get EA most seriously wrong, what Rob fears most about rogue AI, the double-edged sword of AI-empowered governments, and flattening the curve of AI's social disruption.And if you enjoy...2024-04-042h 08 80k After HoursHighlights: #146 – Robert Long on why large language models like GPT (probably) aren’t consciousThis is a selection of highlights from episode #146 of The 80,000 Hours Podcast.These aren't necessarily the most important, or even most entertaining parts of the interview — and if you enjoy this, we strongly recommend checking out the full episode:Robert Long on why large language models like GPT (probably) aren’t consciousAnd if you're finding these highlights episodes valuable, please let us know by emailing podcast@80000hours.org.Highlights put together by Simon Monsour, Milo McGuire, and Dominic Armstrong2024-01-2520 min

80k After HoursHighlights: #146 – Robert Long on why large language models like GPT (probably) aren’t consciousThis is a selection of highlights from episode #146 of The 80,000 Hours Podcast.These aren't necessarily the most important, or even most entertaining parts of the interview — and if you enjoy this, we strongly recommend checking out the full episode:Robert Long on why large language models like GPT (probably) aren’t consciousAnd if you're finding these highlights episodes valuable, please let us know by emailing podcast@80000hours.org.Highlights put together by Simon Monsour, Milo McGuire, and Dominic Armstrong2024-01-2520 min "The Cognitive Revolution" | AI Builders, Researchers, and Live Player AnalysisNathan on The 80,000 Hours Podcast: AI Scouting, OpenAI's Safety Record, and Redteaming Frontier ModelsIn today's conversation, Nathan joins Rob Wiblin, host of The 80,000 Hours Podcast to discuss why we need more AI scouts, OpenAI's safety record, and redteaming frontier models. If you need an ecommerce platform, check out our sponsor Shopify: https://shopify.com/cognitive for a $1/month trial period.We're hiring across the board at Turpentine and for Erik's personal team on other projects he's incubating. He's hiring a Chief of Staff, EA, Head of Special Projects, Investment Associate, and more. For a list of JDs, check out: eriktorenberg.com.SPONSORS:2023-12-273h 53

"The Cognitive Revolution" | AI Builders, Researchers, and Live Player AnalysisNathan on The 80,000 Hours Podcast: AI Scouting, OpenAI's Safety Record, and Redteaming Frontier ModelsIn today's conversation, Nathan joins Rob Wiblin, host of The 80,000 Hours Podcast to discuss why we need more AI scouts, OpenAI's safety record, and redteaming frontier models. If you need an ecommerce platform, check out our sponsor Shopify: https://shopify.com/cognitive for a $1/month trial period.We're hiring across the board at Turpentine and for Erik's personal team on other projects he's incubating. He's hiring a Chief of Staff, EA, Head of Special Projects, Investment Associate, and more. For a list of JDs, check out: eriktorenberg.com.SPONSORS:2023-12-273h 53 80,000 Hours Podcast#175 – Lucia Coulter on preventing lead poisoning for $1.66 per childLead is one of the most poisonous things going. A single sugar sachet of lead, spread over a park the size of an American football field, is enough to give a child that regularly plays there lead poisoning. For life they’ll be condemned to a ~3-point-lower IQ; a 50% higher risk of heart attacks; and elevated risk of kidney disease, anaemia, and ADHD, among other effects.We’ve known lead is a health nightmare for at least 50 years, and that got lead out of car fuel everywhere. So is the situation under control? Not even close.Ar...2023-12-142h 14

80,000 Hours Podcast#175 – Lucia Coulter on preventing lead poisoning for $1.66 per childLead is one of the most poisonous things going. A single sugar sachet of lead, spread over a park the size of an American football field, is enough to give a child that regularly plays there lead poisoning. For life they’ll be condemned to a ~3-point-lower IQ; a 50% higher risk of heart attacks; and elevated risk of kidney disease, anaemia, and ADHD, among other effects.We’ve known lead is a health nightmare for at least 50 years, and that got lead out of car fuel everywhere. So is the situation under control? Not even close.Ar...2023-12-142h 14 80k After HoursLuisa and Robert Long on how to make independent research more funIn this episode of 80k After Hours, Luisa Rodriguez and Robert Long have an honest conversation about the challenges of independent research.Links to learn more, highlights and full transcript.They cover:Assigning probabilities when you’re really uncertainStruggles around self-belief and imposter syndromeThe importance of sharing work even when it feels terribleBalancing impact and fun in a jobAnd some mistakes researchers often makeWho this episode is for:People pursuing independent researchPeople who struggle with self-beliefPeople who feel a pull towards pursuing a career they don’t actually wantW...2023-03-1443 min

80k After HoursLuisa and Robert Long on how to make independent research more funIn this episode of 80k After Hours, Luisa Rodriguez and Robert Long have an honest conversation about the challenges of independent research.Links to learn more, highlights and full transcript.They cover:Assigning probabilities when you’re really uncertainStruggles around self-belief and imposter syndromeThe importance of sharing work even when it feels terribleBalancing impact and fun in a jobAnd some mistakes researchers often makeWho this episode is for:People pursuing independent researchPeople who struggle with self-beliefPeople who feel a pull towards pursuing a career they don’t actually wantW...2023-03-1443 min The Bayesian Conspiracy175 – FTX + EA, and Personal FinanceDavid from The Mind Killer joins us. We got to talking about the FTX collapse and some of the waves it sent through the Effective Altruism community. Afterwards David helps us out with personal finance.

0:00:00 Intro

0:02:08 The Sequences Now Formatted For Zoomers

0:11:25 FTX Collapse & EA Shockwaves

0:54:20 Personal Finance

1:43:20 Thank the Patron!

The Sequences In A Zoomer-Readable Format

FTX: The $32B implosion – a good fast roundup of what the hell happened

Yudkowsky’s essay on FTX Future Fund money that’s been paid out

Yudkowsky’s essay war...2022-11-161h 45

The Bayesian Conspiracy175 – FTX + EA, and Personal FinanceDavid from The Mind Killer joins us. We got to talking about the FTX collapse and some of the waves it sent through the Effective Altruism community. Afterwards David helps us out with personal finance.

0:00:00 Intro

0:02:08 The Sequences Now Formatted For Zoomers

0:11:25 FTX Collapse & EA Shockwaves

0:54:20 Personal Finance

1:43:20 Thank the Patron!

The Sequences In A Zoomer-Readable Format

FTX: The $32B implosion – a good fast roundup of what the hell happened

Yudkowsky’s essay on FTX Future Fund money that’s been paid out

Yudkowsky’s essay war...2022-11-161h 45 The Bayesian ConspiracyBREAKING – FTX collapse & EA shockwavesWhile recording the next episode we got to talking about the FTX collapse and some of the waves it sent through the Effective Altruism community. We decided to break it out into a separate segment so it can air while it’s still relevant.

FTX: The $32B implosion – a good fast roundup of what the hell happened

Yudkowsky’s essay on FTX Future Fund money that’s been paid out

Yudkowsky’s essay warning against humans trying to use utilitarianism 14 years ago

Bunch of Twitter – The reason we have excess money to give; Rob...2022-11-1343 min

The Bayesian ConspiracyBREAKING – FTX collapse & EA shockwavesWhile recording the next episode we got to talking about the FTX collapse and some of the waves it sent through the Effective Altruism community. We decided to break it out into a separate segment so it can air while it’s still relevant.

FTX: The $32B implosion – a good fast roundup of what the hell happened

Yudkowsky’s essay on FTX Future Fund money that’s been paid out

Yudkowsky’s essay warning against humans trying to use utilitarianism 14 years ago

Bunch of Twitter – The reason we have excess money to give; Rob...2022-11-1343 min The Bayesian Conspiracy169 – S&E BS re AGISteven and Eneasz discuss the latest timeline shifts regarding the advent of superhuman general intelligence. Then in the LW posts we got greatly sidetracked by politics (stupid mind-killer!). Links: Two-year update on my personal AI timelines on LessWrong Robert Wiblin … Continue reading →2022-08-242h 12

The Bayesian Conspiracy169 – S&E BS re AGISteven and Eneasz discuss the latest timeline shifts regarding the advent of superhuman general intelligence. Then in the LW posts we got greatly sidetracked by politics (stupid mind-killer!). Links: Two-year update on my personal AI timelines on LessWrong Robert Wiblin … Continue reading →2022-08-242h 12 The Bayesian Conspiracy169 – S&E BS re AGISteven and Eneasz discuss the latest timeline shifts regarding the advent of superhuman general intelligence. Then in the LW posts we got greatly sidetracked by politics (stupid mind-killer!).

Links:

Two-year update on my personal AI timelines on LessWrong

Robert Wiblin on kissing your kids

Lex Fridman podcast

Jack Clark (AI policy guy) on AI policy

Scott Alexander on slowing AI progress

Guide to working in AI policy and strategy

0:00:42 Feedback

0:08:20 Main Topic

1:13:29 LW posts

2:11:40 Thank the Patron

Hey...2022-08-242h 12

The Bayesian Conspiracy169 – S&E BS re AGISteven and Eneasz discuss the latest timeline shifts regarding the advent of superhuman general intelligence. Then in the LW posts we got greatly sidetracked by politics (stupid mind-killer!).

Links:

Two-year update on my personal AI timelines on LessWrong

Robert Wiblin on kissing your kids

Lex Fridman podcast

Jack Clark (AI policy guy) on AI policy

Scott Alexander on slowing AI progress

Guide to working in AI policy and strategy

0:00:42 Feedback

0:08:20 Main Topic

1:13:29 LW posts

2:11:40 Thank the Patron

Hey...2022-08-242h 12 The Bayesian Conspiracy169 – S&E BS re AGISteven and Eneasz discuss the latest timeline shifts regarding the advent of superhuman general intelligence. Then in the LW posts we got greatly sidetracked by politics (stupid mind-killer!). Links: Two-year update on my personal AI timelines on LessWrong Robert Wiblin … Continue reading →2022-08-242h 12

The Bayesian Conspiracy169 – S&E BS re AGISteven and Eneasz discuss the latest timeline shifts regarding the advent of superhuman general intelligence. Then in the LW posts we got greatly sidetracked by politics (stupid mind-killer!). Links: Two-year update on my personal AI timelines on LessWrong Robert Wiblin … Continue reading →2022-08-242h 12 80k After HoursClay Graubard and Robert de Neufville on forecasting the war in UkraineIn this episode of 80k After Hours, Rob Wiblin interviews Clay Graubard and Robert de Neufville about forecasting the war between Russia and Ukraine.Links to learn more, highlights and full transcript.They cover:Their early predictions for the warThe performance of the Russian militaryThe risk of use of nuclear weaponsThe most interesting remaining topics on Russia and UkraineGeneral lessons we can take from the warThe evolution of the forecasting spaceWhat Robert and Clay were reading back in FebruaryForecasters vs. subject matter expertsWays to get involved with the forecasting communityImpressive past predictionsAnd more2022-05-261h 59

80k After HoursClay Graubard and Robert de Neufville on forecasting the war in UkraineIn this episode of 80k After Hours, Rob Wiblin interviews Clay Graubard and Robert de Neufville about forecasting the war between Russia and Ukraine.Links to learn more, highlights and full transcript.They cover:Their early predictions for the warThe performance of the Russian militaryThe risk of use of nuclear weaponsThe most interesting remaining topics on Russia and UkraineGeneral lessons we can take from the warThe evolution of the forecasting spaceWhat Robert and Clay were reading back in FebruaryForecasters vs. subject matter expertsWays to get involved with the forecasting communityImpressive past predictionsAnd more2022-05-261h 59 The Bayesian Conspiracy157 – A Fistful of TopicsWe catch up on stuff and hit a few wandering targets.

0:01 Jace on Lithium

21:10 Feedback

26:05 LW posts

50:06 Nuclear Ukraine

59:43 Nuclear Us

1:05:30 Steven Tries To Get Us Cancelled

Lithium in drinking water linked with lower suicide rates

The Mind Killer – Vladimir Putin’s Small Dick Energy

Warcasting – ACX and Zvi

Razom Ukraine delivers medical supplies

Robert Wiblin leaving London

Potasium Iodide tablets

Eneasz Interviews Ada Palmer

Keep Your Identity Small

Hey look, we have a discord...2022-03-091h 22

The Bayesian Conspiracy157 – A Fistful of TopicsWe catch up on stuff and hit a few wandering targets.

0:01 Jace on Lithium

21:10 Feedback

26:05 LW posts

50:06 Nuclear Ukraine

59:43 Nuclear Us

1:05:30 Steven Tries To Get Us Cancelled

Lithium in drinking water linked with lower suicide rates

The Mind Killer – Vladimir Putin’s Small Dick Energy

Warcasting – ACX and Zvi

Razom Ukraine delivers medical supplies

Robert Wiblin leaving London

Potasium Iodide tablets

Eneasz Interviews Ada Palmer

Keep Your Identity Small

Hey look, we have a discord...2022-03-091h 22 80,000 Hours Podcast#101 – Robert Wright on using cognitive empathy to save the worldIn 2003, Saddam Hussein refused to let Iraqi weapons scientists leave the country to be interrogated. Given the overwhelming domestic support for an invasion at the time, most key figures in the U.S. took that as confirmation that he had something to hide — probably an active WMD program.

But what about alternative explanations? Maybe those scientists knew about past crimes. Or maybe they’d defect. Or maybe giving in to that kind of demand would have humiliated Hussein in the eyes of enemies like Iran and Saudi Arabia.

According to today’s guest Robert Wright, host o...2021-05-281h 36

80,000 Hours Podcast#101 – Robert Wright on using cognitive empathy to save the worldIn 2003, Saddam Hussein refused to let Iraqi weapons scientists leave the country to be interrogated. Given the overwhelming domestic support for an invasion at the time, most key figures in the U.S. took that as confirmation that he had something to hide — probably an active WMD program.

But what about alternative explanations? Maybe those scientists knew about past crimes. Or maybe they’d defect. Or maybe giving in to that kind of demand would have humiliated Hussein in the eyes of enemies like Iran and Saudi Arabia.

According to today’s guest Robert Wright, host o...2021-05-281h 36 The Wright ShowSaving the World One Podcast at a Time (Robert Wright & Rob Wiblin)Defining effective altruism ... Rob explains the meaning of ‘80,000 Hours’ ... Should young people dedicate their careers to fighting climate change? ... The Apocalypse Aversion Project ... How cognitive biases fuel tribalism ... Bob: We need a psychological revolution ... Are external threats the best way to bring people together? ... The balance between surveillance and public safety ... Bob: Let's retire the word 'apologist' ... Making rationality cool ... Rob challenges Bob to find flaws in "The Moral Animal" ...2021-05-2500 min

The Wright ShowSaving the World One Podcast at a Time (Robert Wright & Rob Wiblin)Defining effective altruism ... Rob explains the meaning of ‘80,000 Hours’ ... Should young people dedicate their careers to fighting climate change? ... The Apocalypse Aversion Project ... How cognitive biases fuel tribalism ... Bob: We need a psychological revolution ... Are external threats the best way to bring people together? ... The balance between surveillance and public safety ... Bob: Let's retire the word 'apologist' ... Making rationality cool ... Rob challenges Bob to find flaws in "The Moral Animal" ...2021-05-2500 min MeaningofLife.tvSaving the World One Podcast at a Time (Robert Wright & Rob Wiblin)Defining effective altruism ... Rob explains the meaning of ‘80,000 Hours’ ... Should young people dedicate their careers to fighting climate change? ... The Apocalypse Aversion Project ... How cognitive biases fuel tribalism ... Bob: We need a psychological revolution ... Are external threats the best way to bring people together? ... The balance between surveillance and public safety ... Bob: Let's retire the word 'apologist' ... Making rationality cool ... Rob challenges Bob to find flaws in "The Moral Animal" ...2021-05-2500 min

MeaningofLife.tvSaving the World One Podcast at a Time (Robert Wright & Rob Wiblin)Defining effective altruism ... Rob explains the meaning of ‘80,000 Hours’ ... Should young people dedicate their careers to fighting climate change? ... The Apocalypse Aversion Project ... How cognitive biases fuel tribalism ... Bob: We need a psychological revolution ... Are external threats the best way to bring people together? ... The balance between surveillance and public safety ... Bob: Let's retire the word 'apologist' ... Making rationality cool ... Rob challenges Bob to find flaws in "The Moral Animal" ...2021-05-2500 min MeaningofLife.tvSaving the World One Podcast at a Time (Robert Wright & Rob Wiblin)Defining effective altruism ... Rob explains the meaning of ‘80,000 Hours’ ... Should young people dedicate their careers to fighting climate change? ... The Apocalypse Aversion Project ... How cognitive biases fuel tribalism ... Bob: We need a psychological revolution ... Are external threats the best way to bring people together? ... The balance between surveillance and public safety ... Bob: Let's retire the word 'apologist' ... Making rationality cool ... Rob challenges Bob to find flaws in "The Moral Animal" ...2021-05-2500 min

MeaningofLife.tvSaving the World One Podcast at a Time (Robert Wright & Rob Wiblin)Defining effective altruism ... Rob explains the meaning of ‘80,000 Hours’ ... Should young people dedicate their careers to fighting climate change? ... The Apocalypse Aversion Project ... How cognitive biases fuel tribalism ... Bob: We need a psychological revolution ... Are external threats the best way to bring people together? ... The balance between surveillance and public safety ... Bob: Let's retire the word 'apologist' ... Making rationality cool ... Rob challenges Bob to find flaws in "The Moral Animal" ...2021-05-2500 min Spedup Conversation With TylerRob Wiblin interviews Tyler on *Stubborn Attachments*<p>In this special episode, Rob Wiblin of 80,000 Hours has the super-sized conversation he wants to have with Tyler about Stubborn Attachments. In addition to a deep examination of the ideas in the book, the conversation ranges far and wide across Tyler's thinking, including why we won't leave the galaxy, the unresolvable clash between the claims of culture and nature, and what Tyrone would have to say about the book, and more.</p> <p><a href= "https://medium.com/conversations-with-tyler/tyler-cowen-robert-wiblin-stubborn-attachments-80000-hours-podcast-359aa62aa8ab"> Transcript and links</a>...2020-10-222h 16

Spedup Conversation With TylerRob Wiblin interviews Tyler on *Stubborn Attachments*<p>In this special episode, Rob Wiblin of 80,000 Hours has the super-sized conversation he wants to have with Tyler about Stubborn Attachments. In addition to a deep examination of the ideas in the book, the conversation ranges far and wide across Tyler's thinking, including why we won't leave the galaxy, the unresolvable clash between the claims of culture and nature, and what Tyrone would have to say about the book, and more.</p> <p><a href= "https://medium.com/conversations-with-tyler/tyler-cowen-robert-wiblin-stubborn-attachments-80000-hours-podcast-359aa62aa8ab"> Transcript and links</a>...2020-10-222h 16 80,000 Hours PodcastRob & Howie on what we do and don't know about 2019-nCoVTwo 80,000 Hours researchers, Robert Wiblin and Howie Lempel, record an experimental bonus episode about the new 2019-nCoV virus.See this list of resources, including many discussed in the episode, to learn more.In the 1h15m conversation we cover:• What is it? • How many people have it? • How contagious is it? • What fraction of people who contract it die?• How likely is it to spread out of control?• What's the range of plausible fatalities worldwide?• How does it compare to other epidemics?• What don't we know and why? • What a...2020-02-031h 18

80,000 Hours PodcastRob & Howie on what we do and don't know about 2019-nCoVTwo 80,000 Hours researchers, Robert Wiblin and Howie Lempel, record an experimental bonus episode about the new 2019-nCoV virus.See this list of resources, including many discussed in the episode, to learn more.In the 1h15m conversation we cover:• What is it? • How many people have it? • How contagious is it? • What fraction of people who contract it die?• How likely is it to spread out of control?• What's the range of plausible fatalities worldwide?• How does it compare to other epidemics?• What don't we know and why? • What a...2020-02-031h 18 Google DeepMind: The PodcastTowards the futureAI researchers around the world are trying to create a general purpose learning system that can learn to solve a broad range of problems without being taught how. Koray Kavukcuoglu, DeepMind’s Director of Research, describes the journey to get there, and takes Hannah on a whistle-stop tour of DeepMind’s HQ and its research.If you have a question or feedback on the series, message us on Twitter (@DeepMind using the hashtag #DMpodcast) or email us at podcast@deepmind.com.Further reading:OpenAI: An overview of neural networks and the progress that has been...2019-09-1026 min

Google DeepMind: The PodcastTowards the futureAI researchers around the world are trying to create a general purpose learning system that can learn to solve a broad range of problems without being taught how. Koray Kavukcuoglu, DeepMind’s Director of Research, describes the journey to get there, and takes Hannah on a whistle-stop tour of DeepMind’s HQ and its research.If you have a question or feedback on the series, message us on Twitter (@DeepMind using the hashtag #DMpodcast) or email us at podcast@deepmind.com.Further reading:OpenAI: An overview of neural networks and the progress that has been...2019-09-1026 min The New Liberal PodcastGood at Doing Good: Effective Altruism ft. Robert WiblinRobert Wiblin from 80000hours.org joins the podcast to discuss Effective Altruism. Robert and Jeremiah discuss whether we should focus on the long term or the short term with our work, and which areas of politics are the highest leverage areas for helping people. If you enjoy the podcast, please consider supporting us at Patreon.com/neoliberalproject. Patrons get access to exclusive bonus episodes, newsletters, and our sticker-of-the-month club and community Slack. 2019-06-2159 min

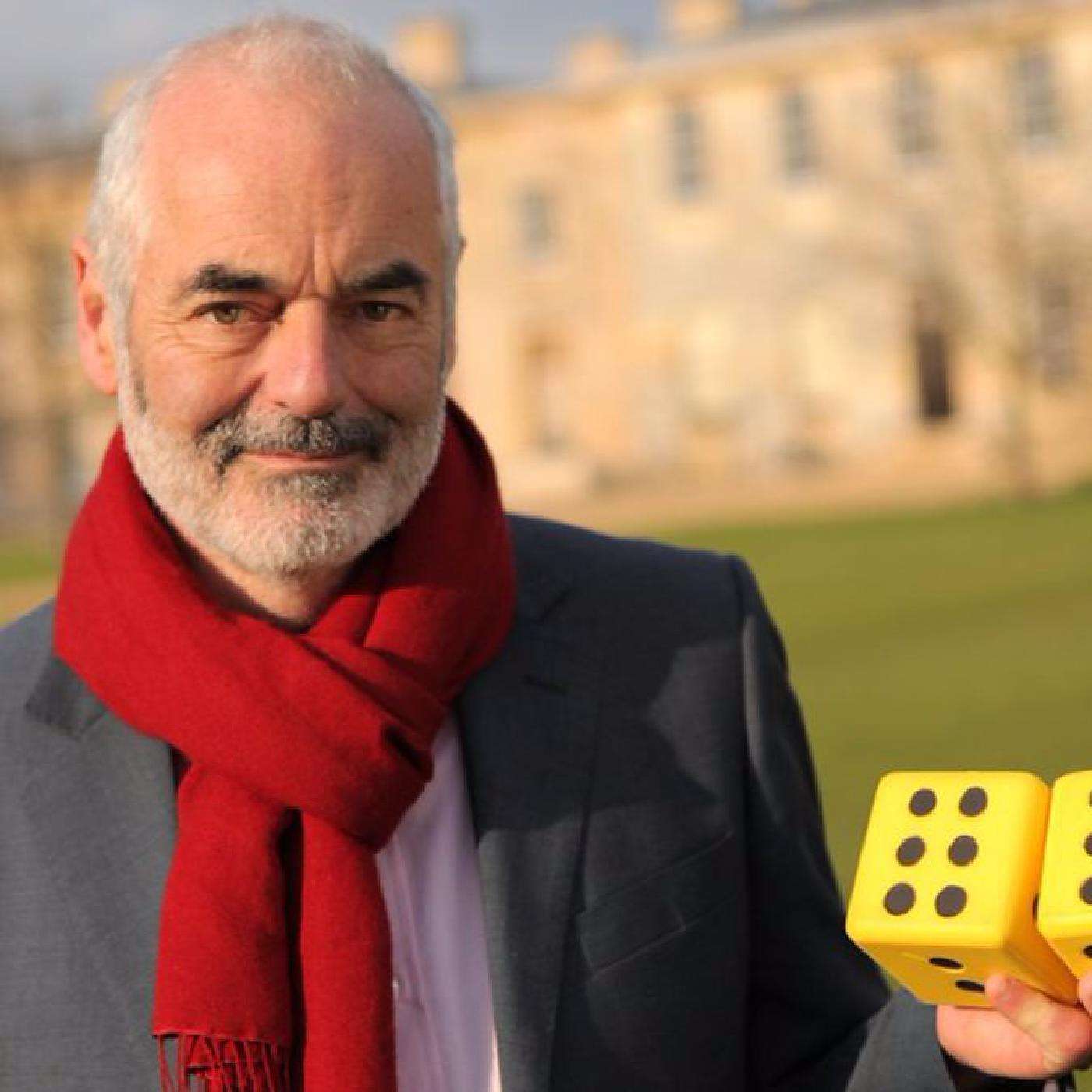

The New Liberal PodcastGood at Doing Good: Effective Altruism ft. Robert WiblinRobert Wiblin from 80000hours.org joins the podcast to discuss Effective Altruism. Robert and Jeremiah discuss whether we should focus on the long term or the short term with our work, and which areas of politics are the highest leverage areas for helping people. If you enjoy the podcast, please consider supporting us at Patreon.com/neoliberalproject. Patrons get access to exclusive bonus episodes, newsletters, and our sticker-of-the-month club and community Slack. 2019-06-2159 min Farrel Buchinsky's Listen Later#2 - David Spiegelhalter on risk, stats and improving understanding of science Podcast: 80,000 Hours Podcast (LS 54 · TOP 0.5% what is this?)Episode: #2 - David Spiegelhalter on risk, stats and improving understanding of sciencePub date: 2017-06-21Get Podcast Transcript →powered by Listen411 - fast audio-to-text and summarizationRecorded in 2015 by Robert Wiblin with colleague Jess Whittlestone at the Centre for Effective Altruism, and recovered from the dusty 80,000 Hours archives. David Spiegelhalter is a statistician at the University of Cambridge and something of an academic celebrity in the UK. Part of his role is to improve the public understanding of ri...2019-05-0933 min

Farrel Buchinsky's Listen Later#2 - David Spiegelhalter on risk, stats and improving understanding of science Podcast: 80,000 Hours Podcast (LS 54 · TOP 0.5% what is this?)Episode: #2 - David Spiegelhalter on risk, stats and improving understanding of sciencePub date: 2017-06-21Get Podcast Transcript →powered by Listen411 - fast audio-to-text and summarizationRecorded in 2015 by Robert Wiblin with colleague Jess Whittlestone at the Centre for Effective Altruism, and recovered from the dusty 80,000 Hours archives. David Spiegelhalter is a statistician at the University of Cambridge and something of an academic celebrity in the UK. Part of his role is to improve the public understanding of ri...2019-05-0933 min Philanthropy Podcast: A Resource for Nonprofit Leaders and Fundraising & Advancement ProfessionalsEffective Altruism - An Interview with Eric Freidman, Author of Reinventing Philanthropy - Episode 36The challenge we face: Donors are becoming more sophisticated when it comes to charitable giving. One philosophy and social movement around philanthropy is effective altruism. Through effective altruism donors try to determine and support nonprofits that combine the most effective and cost efficient programming to impact our world. Fundraising professionals and nonprofit leaders need to be aware of this growing attitude toward funding nonprofits and ensure that our interactions with donors communicates clearly the value proposition of our organization. In this episode we’ll discuss: Effective altruism’s approach to thinking about charitable giving and philanthropy How the approach fundraisers take...2018-07-3045 min

Philanthropy Podcast: A Resource for Nonprofit Leaders and Fundraising & Advancement ProfessionalsEffective Altruism - An Interview with Eric Freidman, Author of Reinventing Philanthropy - Episode 36The challenge we face: Donors are becoming more sophisticated when it comes to charitable giving. One philosophy and social movement around philanthropy is effective altruism. Through effective altruism donors try to determine and support nonprofits that combine the most effective and cost efficient programming to impact our world. Fundraising professionals and nonprofit leaders need to be aware of this growing attitude toward funding nonprofits and ensure that our interactions with donors communicates clearly the value proposition of our organization. In this episode we’ll discuss: Effective altruism’s approach to thinking about charitable giving and philanthropy How the approach fundraisers take...2018-07-3045 min Philanthropy Podcast: A Resource for Nonprofit Leaders and Fundraising & Advancement ProfessionalsEffective Altruism - An Interview with Eric Freidman, Author of Reinventing PhilanthropyThe challenge we face: Donors are becoming more sophisticated when it comes to charitable giving. One philosophy and social movement around philanthropy is effective altruism. Through effective altruism donors try to determine and support nonprofits that combine the most effective and cost efficient programming to impact our world. Fundraising professionals and nonprofit leaders need to be aware of this growing attitude toward funding nonprofits and ensure that our interactions with donors communicates clearly the value proposition of our organization. In this episode we’ll discuss: Effective altruism’s approach to thinking about charitable giving and...2018-07-3000 min

Philanthropy Podcast: A Resource for Nonprofit Leaders and Fundraising & Advancement ProfessionalsEffective Altruism - An Interview with Eric Freidman, Author of Reinventing PhilanthropyThe challenge we face: Donors are becoming more sophisticated when it comes to charitable giving. One philosophy and social movement around philanthropy is effective altruism. Through effective altruism donors try to determine and support nonprofits that combine the most effective and cost efficient programming to impact our world. Fundraising professionals and nonprofit leaders need to be aware of this growing attitude toward funding nonprofits and ensure that our interactions with donors communicates clearly the value proposition of our organization. In this episode we’ll discuss: Effective altruism’s approach to thinking about charitable giving and...2018-07-3000 min 80,000 Hours Podcast#2 - David Spiegelhalter on risk, stats and improving understanding of scienceRecorded in 2015 by Robert Wiblin with colleague Jess Whittlestone at the Centre for Effective Altruism, and recovered from the dusty 80,000 Hours archives. David Spiegelhalter is a statistician at the University of Cambridge and something of an academic celebrity in the UK. Part of his role is to improve the public understanding of risk - especially everyday risks we face like getting cancer or dying in a car crash. As a result he’s regularly in the media explaining numbers in the news, trying to assist both ordinary people and politicians focus on the important risks we f...2017-06-2133 min

80,000 Hours Podcast#2 - David Spiegelhalter on risk, stats and improving understanding of scienceRecorded in 2015 by Robert Wiblin with colleague Jess Whittlestone at the Centre for Effective Altruism, and recovered from the dusty 80,000 Hours archives. David Spiegelhalter is a statistician at the University of Cambridge and something of an academic celebrity in the UK. Part of his role is to improve the public understanding of risk - especially everyday risks we face like getting cancer or dying in a car crash. As a result he’s regularly in the media explaining numbers in the news, trying to assist both ordinary people and politicians focus on the important risks we f...2017-06-2133 min 80,000 Hours Podcast#1 - Miles Brundage on the world's desperate need for AI strategists and policy expertsRobert Wiblin, Director of Research at 80,000 Hours speaks with Miles Brundage, research fellow at the University of Oxford's Future of Humanity Institute. Miles studies the social implications surrounding the development of new technologies and has a particular interest in artificial general intelligence, that is, an AI system that could do most or all of the tasks humans could do.

This interview complements our profile of the importance of positively shaping artificial intelligence and our guide to careers in AI policy and strategy

Full transcript, apply for personalised coaching to work on AI strategy, see what questions are...2017-06-0655 min

80,000 Hours Podcast#1 - Miles Brundage on the world's desperate need for AI strategists and policy expertsRobert Wiblin, Director of Research at 80,000 Hours speaks with Miles Brundage, research fellow at the University of Oxford's Future of Humanity Institute. Miles studies the social implications surrounding the development of new technologies and has a particular interest in artificial general intelligence, that is, an AI system that could do most or all of the tasks humans could do.

This interview complements our profile of the importance of positively shaping artificial intelligence and our guide to careers in AI policy and strategy

Full transcript, apply for personalised coaching to work on AI strategy, see what questions are...2017-06-0655 min